[Docker] Nextcloud with Caddy as a Reverse Proxy (2024)

Featuring PHP-FPM, PostgreSQL, notify_push, cron, Imaginary and Caddy

Table of contents

- Overview

- Introduction

- Difference to Nextcloud docker-compose setup with notify_push (2024)

- Alternatives and credits for AiO and other appliances

- Disclaimer

- The use of Docker and the preparation of the environment

- Requirements

- Set up a directory for our project

- The Nextcloud Stack: Creation of the required files

- .env

- nextcloud.env

- cron.sh

- Webserver

- Nextcloud Stack (docker-compose.yml)

- secrets

- Generate secure random password on command line

- Create required mount points

- Overview of container mounts

- Launch the Nextcloud Stacks

- First start of Nextcloud

- Status of the Nextcloud Stack

- The Reverse Proxy Stack: Creation of the required files

- Reverse Proxy

- Proxy Stack (docker-compose.yml)

- File structure of the Reverse Proxy Stack

- Launch the Reverse Proxy Stack

- First start of the proxy

- Status of the Caddy Stack

- Initial setup of Nextcloud via CLI

- Setup notify_push

- Setup imaginary

- Side notes

- Install apps

- Logging and troubleshooting

- Creating, Starting and Updating Containers

- Nextcloud Office (Collabora integration)

- Backup / Restore / Remove

- Start from scratch

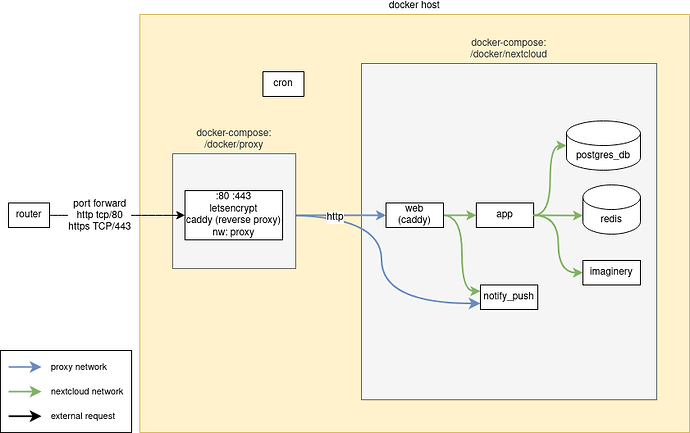

Overview

Introduction

This tutorial is intended to help you set up Nextcloud using Docker.

The special feature is the use of the fpm image from Nextcloud. It is based on the php-fpm image and runs a fastCGI-Process that serves your Nextcloud page. To use this image it must be combined with any webserver that can proxy the http requests to the FastCGI-port of the container. Caddy will be used as the web server, which will take over this task. You will learn how to configure it in this tutorial.

Another feature of this tutorial is the use of Notify_push. It attempts to solve the issue, where Nextcloud clients have to periodically check the server if any files have been changed. In order to keep sync snappy, clients wants to check for updates often, which increases the load on the server.

With many clients all checking for updates a large portion of the server load can consist of just these update checks. By providing a way for the server to send update notifications to the clients, the need for the clients to make these checks can be greatly reduced.

Last but not least, imaginary should ensure that the creation of preview images for a wide variety of file formats is quick and unnoticed.

For quite a few, Nextcloud and Docker can be the first entry into self-hosting and in this context the question of accessibility also arises. How can I make my locally hosted service available on the Internet? The reverse proxy is a common choice for tackling this task.

Whether NGINX, Caddy or traefik, to name just a few, this task can be accomplished. The tutorial takes up this point and helps you get started. The versatile Caddy is also used for this purpose.

However, it should be noted that the tutorial cannot provide in-depth background knowledge on cyber security, network technology or similar. So read up on this topic thoroughly before you open your doors.

One more thing: The tutorial assumes that no reverse proxy has been used so far. Nevertheless, this part is completely optional. So if a reverse proxy is already in use, this part can be omitted and the Nextcloud web server can be exposed directly to the Docker host. Of course, the part with the Docker network proxy is then also obsolete. For this situation, please make your own changes to the docker-compose.yml!

I would like to take this opportunity to thank wwe AKA Willi Weikum for his tutorial Nextcloud docker-compose setup with notify_push (2024). It served as the basis for my personal project and which served as a guardrail for the numerous tutorials out there that never really wanted to tell me the whole answer to my question.

So if the wording sounds familiar to you, I probably didn’t reinvent the wheel. Thanks to wwe

Difference to Nextcloud docker-compose setup with notify_push (2024)

This brings us to the differences between my tutorial and wwe’s tutorial. The changes are based on my personal needs and taste. They don’t have to match yours or the common opinion on how Nextcloud should best be run.

- Use of the

fpmimage and thus the need to provide a web server for Nextcloud - Operation of

redisas non-root under its own user - A password is used for

redisand provided via the Docker secrets chronascrontabon the Docker host, as the failure rate of the Docker solution was too high for me and it behaved bitchyCaddyas both an internal web server and a reverse proxy. I intentionally separated the two tasks. The reverse proxy service may not be necessary if a reverse proxy is already in use- Nextcloud files are not located in www-root (i.e. not under

/var/www/html/data, but separately in an additional mounted drive.

Alternatives and credits for AiO and other appliances

If you are beginner, lack IT knowledge or maybe just want an easily setup Nextcloud without any desire to learn and customize the system other optionsmight be better suited for you. Take a look at #All-in-One project which provides more functionality out of the box at the cost of lower flexibility. Other projects like like ncp and Hansson IT vm and multiple hardware devices pre-configured with Nextcloud and many many manged Nextcloud offers out there - if you are looking for easy to use Nextcloud likely you should stop reading here.

Other tutorials which might be easier to start as a beginner:

- Nextcloud AiO 141

- Your Guide to the Nextcloud All-in-One on Windows 10 & 11!

- Full Nextcloud Docker Container for Raspberry Pi 4 & 5 with Collabora Online server (Nextcloud Office) behind Nginx Reverse Proxy Manager + GoAccess Charts. Supported with Redis Cache + Cron Jobs

- Nextcloud docker-compose setup with notify_push (2024) [Advanced Users]

Disclaimer

this article explains somewhat advanced setup using “manual” docker compose. The nature of Docker and Docker-Compose is you get many many help already and you can easily setup complex applications like Nextcloud within minutes. You will find dozen good tutorials how to setup Nextcloud in minutes. Most of them focus on fast setup and skip complexity which is required to setup stable, secure and fast system. if you are willing to follow the more complex path and learn docker-compose way to setup and maintain Nextcloud follow the steps below.

Tough stuff follows and I recommend you to understand all the aspects of your system when you choose to run a self-hosted system.

The use of Docker and the preparation of the environment

Docker offers the option of using stacks (aka docker compose) to combine a bundle of services into a package that are intended to interact with each other. We will create such a stack for the Nextcloud, for example. All the services required to operate this cloud are combined in such a stack.

Another stack will be created for the reverse proxy. Even if this is noticeably smaller, it can make sense to divide your Docker projects into such stacks. Perhaps you will add a stack for streaming with Kodi at some point? Stacks let you organize your projects. And that’s exactly why we will use it.

Requirements

Before that, however, we need to take care of a few things and ensure that the prerequisites are met. Start with following pre-requisites

- system user without login (adopt user ID):

sudo useradd --no-create-home test-nc --shell /usr/sbin/nologin --uid 1004. I did not customize the username when I followed the tutorial. Long livetest-nc. The name can of course be customized

- working docker-compose

- docker network

proxy:

docker network create proxy --subnet=172.16.0.0/24

You can define a subnet yourself. Remember, however, that this subnet is used in many subsequent files and must be adapted if you deviate from it. Used to connect proxy container with Nextcloud application - adopt in case your setup differs. - directory hosting all configs and data. The location on the Docker host can be chosen at will. However, it should not be under the

/homedirectory. I personally prefer a location under/.

Set up a directory for our project

We need a place where we can store the aforementioned stacks. If you want to get started on a PC, a server or a VM, a place under root / could be an idea. If you search the Internet for this topic, there are several locations that you could also use. Let’s start by creating the directory. To save typing errors and typing, we will store the path to this directory in a variable so that we only have to use this variable from now on. Really helpful if your path is somewhere completely different than in this tutorial.

Lets get started:

# Adjust the directory for this variable `DOCKER_PROJECT_DIR`!

DOCKER_PROJECT_DIR=/docker

mkdir -p ${DOCKER_PROJECT_DIR}/{nextcloud,proxy}

sudo chown -R $USER:$USER ${DOCKER_PROJECT_DIR}

cd ${DOCKER_PROJECT_DIR}/nextcloud

Explanation: We want to create our stacks under /docker. Each stack will have its own folder. With {nextcloud,proxy} we create both folders under /docker and make sure with chown that this folder also belongs to us. This is because you probably already had to have root rights using sudo to create the mkdir folder. Now that the folder belongs to you, you no longer need these special rights (for the time being).

The structure should now look as follows:

$ tree -L 1 ${DOCKER_PROJECT_DIR}

/docker

├── nextcloud

└── proxy

Almost inconspicuous: We change to the location of our first stack!

cd ${DOCKER_PROJECT_DIR}/nextcloud

The Nextcloud Stack: Creation of the required files

.env

Global environment variables required for the whole project. Likely this is the only file need to edit. general.

Use nano or vim to create the required files. Your favorite may need to be installed first. Beginners usually feel more comfortable with nano, but once you get the hang of it, vim is just great!

Create a file .env using your favorite editor and preparation / prerequisites paste the data (don’t forget to adopt the domain): nano ${DOCKER_PROJECT_DIR}/nextcloud/.env

.env

# project name is used as container name prefix (folder name if not set)

COMPOSE_PROJECT_NAME=nextcloud

# this is the global ENV file for docker-compose

# nextcloud FQDN - public DNS

DOMAIN=nextcloud.mydomain.tld

NEXTCLOUD_VERSION=29.0.7-fpm

DH_DATA_DIR=/HDD/nextcloud/data

NC_DATA_DIR=/nextcloud-data

UID=1004

GID=1004

DOCKER_LOGGING_MAX_SIZE=5m

DOCKER_LOGGING_MAX_FILE=3

Explanation: My Docker host is a virtual machine on my Proxmox host. The VM has two hard disks:

sdais set up on the SSD of the Proxmox host.- The operating system is installed on this disk.

- Size: 32 GB

sdbis set up on a RAID array of HDDs- Added as a second volume of the VM and initialized as

zfs pool. - Size: 2 TB

- Added as a second volume of the VM and initialized as

If this is also an interesting option for you, to split the place of the data from nextcloud, simply adjust the path under DH_DATA_DIR.

Useless knowledge:

DH_: Docker hostNC_: Nextcloud container.

If you do not care about the storage of the Nextcloud data, then remove the two variables DH_DATA_DIR and NC_DATA_DIR from the .env.

If you only notice it here: yes, you need a domain. Adjust DOMAIN accordingly.

nextcloud.env

File is used on first start of Nextcloud [app] container to create

config.phpfile. Changes are not applied once the container has started once!

This file should work for most cases. In case you are very limited in RAM you want to reduce

PHP_MEMORY_LIMIT(or increase in case you can afford).${DOMAIN}variable is populated in different required places from the top-level .env file so you only need to edit it there.

Create a file nextcloud.env using your favorite editor and paste the data:

nano ${DOCKER_PROJECT_DIR}/nextcloud/nextcloud.env

nextcloud.env

# nextcloud FQDN - public DNS

NEXTCLOUD_TRUSTED_DOMAINS=${DOMAIN}

# reverse proxy config

OVERWRITEHOST=${DOMAIN}

OVERWRITECLIURL=https://${DOMAIN}

OVERWRITEPROTOCOL=https

# all private IPs - excluded for now: 192.168.0.0/16 10.0.0.0/8 fc00::/7 fe80::/10 2001:db8::/32

TRUSTED_PROXIES=172.16.0.0/24

# nextcloud data directory

NEXTCLOUD_DATA_DIR=${NC_DATA_DIR}

# php

PHP_MEMORY_LIMIT=1G

PHP_UPLOAD_LIMIT=10G

# db

POSTGRES_HOST=db

POSTGRES_DB_FILE=/run/secrets/postgres_db

POSTGRES_USER_FILE=/run/secrets/postgres_user

POSTGRES_PASSWORD_FILE=/run/secrets/postgres_password

# admin user

NEXTCLOUD_ADMIN_PASSWORD_FILE=/run/secrets/nextcloud_admin_password

NEXTCLOUD_ADMIN_USER_FILE=/run/secrets/nextcloud_admin_user

# redis

REDIS_HOST=redis

REDIS_HOST_PASSWORD_FILE=/run/secrets/redis_password

Explanation: If you have decided in .env not to store the Nextcloud data elsewhere, you must remove NEXTCLOUD_DATA_DIR.

If you go with the default setup, where Caddy is used as a reverse proxy and Caddy as a web server, only the proxy network of the reverse proxy is required for TRUSTED_PROXIES. If only Caddy web server is used and you provide a reverse proxy differently, the IP address/range should be changed. Remember to use CIDR notation for IP addresses.

cron.sh

Source: Nextcloud Docker Container - best way to run cron job

Unfortunately I don’t see any way to run cron container as non-root.

Personally, I have given up on running cron.sh in Docker. Therefore, I have moved this task to the Docker host.

I found this advice to be the most helpful of them all, as anything else means meddling with dockerfiles and the like.

What is perhaps not mentioned very clearly is that this command needs to be added to the crontab of root on the host system:

sudo crontab -e. Add this line to your file:

*/5 * * * * docker compose -f /docker/nextcloud/docker-compose.yml exec -u 1004 app php /var/www/html/cron.phpClose and save.

Tip: In our example, the docker-compose.yml to be created is located under /docker/nextcloud. Adjust the path according to your setup.

If you want to use the original type of cron via Docker, you have to adapt the compose.yml and use the original description of the linked tutorial.

Webserver

With the image fpm we have to take care of our own web server. Caddy can be used for this as well as for the reverse proxy. Create the main config file Caddyfile in the corresponding directory:

mkdir web

nano web/Caddyfile

web/Caddyfile

{

servers {

trusted_proxies static 172.16.0.0/24

}

log DEBUG

}

:80 {

request_body {

max_size 10G

}

# Enable gzip but do not remove ETag headers

encode {

zstd

gzip 4

minimum_length 256

match {

header Content-Type application/atom+xml

header Content-Type application/javascript

header Content-Type application/json

header Content-Type application/ld+json

header Content-Type application/manifest+json

header Content-Type application/rss+xml

header Content-Type application/vnd.geo+json

header Content-Type application/vnd.ms-fontobject

header Content-Type application/wasm

header Content-Type application/x-font-ttf

header Content-Type application/x-web-app-manifest+json

header Content-Type application/xhtml+xml

header Content-Type application/xml

header Content-Type font/opentype

header Content-Type image/bmp

header Content-Type image/svg+xml

header Content-Type image/x-icon

header Content-Type text/cache-manifest

header Content-Type text/css

header Content-Type text/plain

header Content-Type text/vcard

header Content-Type text/vnd.rim.location.xloc

header Content-Type text/vtt

header Content-Type text/x-component

header Content-Type text/x-cross-domain-policy

}

}

header {

# Based on following source:

# https://raw.githubusercontent.com/nextcloud/docker/refs/heads/master/.examples/docker-compose/insecure/mariadb/fpm/web/nginx.conf

#

# HSTS settings

# WARNING: Only add the preload option once you read about

# the consequences in https://hstspreload.org/. This option

# will add the domain to a hardcoded list that is shipped

# in all major browsers and getting removed from this list

# could take several months.

# Strict-Transport-Security "max-age=15768000; includeSubDomains; preload;"

Strict-Transport-Security: "max-age=31536000; includeSubDomains;"

# HTTP response headers borrowed from Nextcloud `.htaccess`

Referrer-Policy no-referrer

X-Content-Type-Options nosniff

X-Download-Options noopen

X-Frame-Options SAMEORIGIN

X-Permitted-Cross-Domain-Policies none

X-Robots-Tag "noindex,nofollow"

X-XSS-Protection "1; mode=block"

Permissions-Policy "accelerometer=(), ambient-light-sensor=(), autoplay=(), battery=(), camera=(), cross-origin-isolated=(), display-capture=(), document-domain=(), encrypted-media=(), execution-while-not-rendered=(), execution-while-out-of-viewport=(), fullscreen=(), geolocation=(), gyroscope=(), keyboard-map=(), magnetometer=(), microphone=(), midi=(), navigation-override=(), payment=(), picture-in-picture=(), publickey-credentials-get=(), screen-wake-lock=(), sync-xhr=(), usb=(), web-share=(), xr-spatial-tracking=()"

}

# Path to the root of your installation

root * /var/www/html

handle_path /push/* {

reverse_proxy http://notify_push:7867

}

route {

# Rule borrowed from `.htaccess` to handle Microsoft DAV clients

@msftdavclient {

header User-Agent DavClnt*

path /

}

redir @msftdavclient /remote.php/webdav/ temporary

route /robots.txt {

log_skip

file_server

}

# Add exception for `/.well-known` so that clients can still access it

# despite the existence of the `error @internal 404` rule which would

# otherwise handle requests for `/.well-known` below

route /.well-known/* {

redir /.well-known/carddav /remote.php/dav/ permanent

redir /.well-known/caldav /remote.php/dav/ permanent

@well-known-static path \

/.well-known/acme-challenge /.well-known/acme-challenge/* \

/.well-known/pki-validation /.well-known/pki-validation/*

route @well-known-static {

try_files {path} {path}/ =404

file_server

}

redir * /index.php{path} permanent

}

@internal path \

/build /build/* \

/tests /tests/* \

/config /config/* \

/lib /lib/* \

/3rdparty /3rdparty/* \

/templates /templates/* \

/data /data/* \

\

/.* \

/autotest* \

/occ* \

/issue* \

/indie* \

/db_* \

/console*

error @internal 404

@assets {

path *.css *.js *.svg *.gif *.png *.jpg *.jpeg *.ico *.wasm *.tflite *.map *.wasm2

file {path} # Only if requested file exists on disk, otherwise /index.php will take care of it

}

route @assets {

header /* Cache-Control "max-age=15552000" # Cache-Control policy borrowed from `.htaccess`

header /*.woff2 Cache-Control "max-age=604800" # Cache-Control policy borrowed from `.htaccess`

log_skip # Optional: Don't log access to assets

file_server {

precompressed gzip

}

}

# Rule borrowed from `.htaccess`

redir /remote/* /remote.php{path} permanent

# Serve found static files, continuing to the PHP default handler below if not found

try_files {path} {path}/

@notphpordir not path /*.php /*.php/* / /*/

file_server @notphpordir {

pass_thru

}

# Required for legacy support

#

# Rewrites all other requests to be prepended by “/index.php” unless they match a known-valid PHP file path.

@notlegacy {

path *.php *.php/

not path /index*

not path /remote*

not path /public*

not path /cron*

not path /core/ajax/update*

not path /status*

not path /ocs/v1*

not path /ocs/v2*

not path /ocs-provider/*

not path /updater/*

not path */richdocumentscode/proxy*

}

rewrite @notlegacy /index.php{uri}

# Let everything else be handled by the PHP-FPM component

php_fastcgi app:9000 {

env modHeadersAvailable true # Avoid sending the security headers twice

env front_controller_active true # Enable pretty urls

}

}

}

Explanation:

The IP range from which the web server receives its request is specified under trusted_proxies static 172.16.0.0/24. The reverse proxy in the proxy network will forward the request sent to it to the Nextcloud web server via the proxy network. Since we specified the subnet range when setting up the network mentioned, we enter it here accordingly. It is the same address range as under TRUSTED_PROXIES in nextcloud.env. If another reverse proxy is already in use, the IP range may need to be adjusted.

Nextcloud Stack (docker-compose.yml)

This compose file creates a pretty complete Nextcloud installation with postgres running as limited user you defined in global .env.

- for PostgreSQL and redis I intentionally choose debian-based image as it turns out popular alpine flavors are slower.

- all mounts are stored inside of project directory - in case you want to store all or some files in a different place, please adopt docker-compose.yml

One Docker feature I didn’t understand and underestimated for long time is “silently included”. Docker Compose creates container “services” using

${COMPOSE_PROJECT_NAME}variable and falls back to directory name of the compose file if this variable is not set. This allows you to run multiple compose installations from the same file by just duplicating the folder/compose file. when you leave the project untouched important containers will start with test-nc prefix and -1 suffix so will find following containers laternextcloud-app-1,nextcloud-db-1etc… but you still can use service names to manage containers - runningdocker compose exec app {command}will always address the serviceappdepending on you current directory - it could be test, dev or prod instance, the command remains the same.

Create a file docker-compose.yml using your favorite editor and paste the data: nano docker-compose.yml

Nextcloud docker-compose.yml

networks:

proxy:

external: true

secrets:

nextcloud_admin_password:

file: ./secrets/nextcloud_admin_password # put admin password in this file

nextcloud_admin_user:

file: ./secrets/nextcloud_admin_user # put admin username in this file

postgres_db:

file: ./secrets/postgres_db # put postgresql db name in this file

postgres_password:

file: ./secrets/postgres_password # put postgresql password in this file

postgres_user:

file: ./secrets/postgres_user # put postgresql username in this file

redis_password:

file: ./secrets/redis_password # put redis password in this file

services:

web:

image: caddy:2.8.4

pull_policy: always

restart: unless-stopped

user: ${UID}:${GID}

depends_on:

- app

volumes:

- ./web/Caddyfile:/etc/caddy/Caddyfile

- ./web/data:/data

- ./web/config:/config

- ./nextcloud:/var/www/html:ro

- ./apps:/var/www/html/custom_apps:ro

- /etc/localtime:/etc/localtime:ro

- /etc/timezone:/etc/timezone:ro

logging:

options:

max-size: ${DOCKER_LOGGING_MAX_SIZE:?DOCKER_LOGGING_MAX_SIZE not set}

max-file: ${DOCKER_LOGGING_MAX_FILE:?DOCKER_LOGGING_MAX_FILE not set}

healthcheck:

test: ["CMD", "wget", "--no-verbose", "--tries=1", "--spider", "127.0.0.1:2019/metrics"]

interval: 10s

retries: 3

start_period: 5s

timeout: 5s

networks:

- default

- proxy

app:

image: nextcloud:${NEXTCLOUD_VERSION}

restart: unless-stopped

user: ${UID}:${GID}

depends_on:

db:

condition: service_healthy

redis:

condition: service_healthy

env_file:

- ./nextcloud.env

secrets:

- postgres_db

- postgres_password

- postgres_user

- nextcloud_admin_user

- nextcloud_admin_password

- redis_password

volumes:

- ./nextcloud:/var/www/html

- ./apps:/var/www/html/custom_apps

# if another location is not desired for data:

# ./data:/var/www/html/data

- ${DH_DATA_DIR}:${NC_DATA_DIR}

- ./config:/var/www/html/config

# https://github.com/nextcloud/docker/issues/182

- ./redis-session.ini:/usr/local/etc/php/conf.d/redis-session.ini

- /etc/localtime:/etc/localtime:ro

- /etc/timezone:/etc/timezone:ro

db:

# https://hub.docker.com/_/postgres

image: postgres:15

restart: unless-stopped

user: ${UID}:${GID}

environment:

- POSTGRES_DB_FILE=/run/secrets/postgres_db

- POSTGRES_USER_FILE=/run/secrets/postgres_user

- POSTGRES_PASSWORD_FILE=/run/secrets/postgres_password

volumes:

- ./db:/var/lib/postgresql/data

- /etc/passwd:/etc/passwd:ro

- /etc/localtime:/etc/localtime:ro

- /etc/timezone:/etc/timezone:ro

# psql performance tuning: https://pgtune.leopard.in.ua/

# DB Version: 15

# OS Type: linux

# DB Type: web

# Total Memory (RAM): 5 GB

# CPUs num: 4

# Data Storage: ssd

# command:

# postgres

# -c max_connections=200

# -c shared_buffers=1280MB

# -c effective_cache_size=3840MB

# -c maintenance_work_mem=320MB

# -c checkpoint_completion_target=0.9

# -c wal_buffers=16MB

# -c default_statistics_target=100

# -c random_page_cost=1.1

# -c effective_io_concurrency=200

# -c work_mem=3276kB

# -c min_wal_size=1GB

# -c max_wal_size=4GB

# -c max_worker_processes=4

# -c max_parallel_workers_per_gather=2

# -c max_parallel_workers=4

# -c max_parallel_maintenance_workers=2

healthcheck:

test: ["CMD-SHELL", "pg_isready -d `cat $$POSTGRES_DB_FILE` -U `cat $$POSTGRES_USER_FILE`"]

start_period: 15s

interval: 30s

retries: 3

timeout: 5s

secrets:

- postgres_db

- postgres_password

- postgres_user

redis:

image: redis:bookworm

restart: unless-stopped

user: ${UID}:${GID}

command: bash -c 'redis-server --requirepass "$$(cat /run/secrets/redis_password)"'

secrets:

- redis_password

volumes:

- ./redis:/data

healthcheck:

test: ["CMD-SHELL", "redis-cli --no-auth-warning -a \"$$(cat /run/secrets/redis_password)\" ping | grep PONG"]

start_period: 10s

interval: 30s

retries: 3

timeout: 3s

notify_push:

image: nextcloud:${NEXTCLOUD_VERSION}

restart: unless-stopped

user: ${UID}:${GID}

depends_on:

- web

environment:

- PORT=7867

- NEXTCLOUD_URL=http://web

volumes:

- ./nextcloud:/var/www/html:ro

- ./apps:/var/www/html/custom_apps:ro

- ./config:/var/www/html/config:ro

- /etc/localtime:/etc/localtime:ro

- /etc/timezone:/etc/timezone:ro

entrypoint: /var/www/html/custom_apps/notify_push/bin/x86_64/notify_push /var/www/html/config/config.php

networks:

- default

- proxy

imaginary:

image: nextcloud/aio-imaginary:latest

restart: unless-stopped

user: ${UID}:${GID}

expose:

- "9000"

depends_on:

- app

#environment:

# - TZ=${TIMEZONE} # e.g. Europe/Berlin

cap_add:

- SYS_NICE

tmpfs:

- /tmp

Explanation:

- If you do not want to change the location for the Nextcloud data, you must adjust

volumesunderapp:- remove

- ${DH_DATA_DIR}:${NC_DATA_DIR} - comment in

./data:/var/www/html/data - remember that in this case

NEXTCLOUD_DATA_DIRmust be removed fromnextcloud.env!!!

- remove

- Parameters are commented out under

db, which can ensure that the database is optimally adapted to your situation and hardware. If you want to activate the parameters, visit the page https://pgtune.leopard.in.ua/, enter your parameters into the computer and transfer the result to docker-compose.yml. The section must then be commented. To do this, remove the hash marks#fromcommand:down to the last command (-c).

secrets

Docker secrets is a concept of more secure storage of credentials for Docker applications. Traditionally one would expose credentials like DB password using environment variables which could result this variables are leaked while troubleshooting e.g. when application dump ENV into log files. Docker compose didn’t support secrets earlier and now the implementation is not really sophisticated - the secret is stored as plain file - but this is still better hidden than a simple ENV variable.

The app is build around following secrets stored in a separate “secrets” directory

Create a “secret” file for Nextcloud and postgres user and DB as full set of

_FILEvariables is required by Nextcloud Docker container (you can use random strings as well if you want but it makes it harder)

mkdir secrets

echo -n "admin" > ./secrets/nextcloud_admin_user

echo -n "nextcloud" > ./secrets/postgres_db

echo -n "nextcloud" > ./secrets/postgres_user

tr -dc 'A-Za-z0-9#$%&+_' < /dev/urandom | head -c 32 | tee ./secrets/postgres_password; echo

tr -dc 'A-Za-z0-9#$%&+_' < /dev/urandom | head -c 32 | tee ./secrets/nextcloud_admin_password; echo

tr -dc 'A-Za-z0-9#$%&+_' < /dev/urandom | head -c 32 | tee ./secrets/redis_password; echo

Generate secure random password on command line

This procedure might be no really safe cryptographically but should be good enough for most cases - 32 char with letters and some special characters (without problematic like braces, feel free to use [:pirnt:] or [:graph:] classes for more special characters)

tr -dc 'A-Za-z0-9#$%&+_' </dev/urandom | head -c 32; echo. using this we generate “secret” file forpostgres_password,nextcloud_admin_passwordandredis_password.

check the contents of folder:

$ ls secrets -w 1

nextcloud_admin_password

nextcloud_admin_user

postgres_db

postgres_password

postgres_user

redis_password

Create required mount points

Just run the commands, adopt the UID of the user you created in “preparation / prerequisites”:

DH_DATA_DIR=/HDD/nextcloud/data

mkdir -p apps config data db nextcloud redis web/data web/config $DH_DATA_DIR

touch redis-session.ini

sudo chown 1004:1004 apps config data db nextcloud redis redis-session.ini web/data web/config web/Caddyfile $DH_DATA_DIR

Explanation: The mount point of the storage location DH_DATA_DIR needs to be adjusted. It should reflect the same path as under .env. The -p flag created all necessary subfolders that are stated under the path.

The file and folder structure should look like this. If you have forgotten something, you should make up for it:

$ tree -a ${DOCKER_PROJECT_DIR}/nextcloud

├── apps

├── config

├── data

├── db

├── docker-compose.yml

├── .env

├── nextcloud

├── nextcloud.env

├── redis

├── redis-session.ini

├── secrets

│ ├── nextcloud_admin_password

│ ├── nextcloud_admin_user

│ ├── postgres_db

│ ├── postgres_password

│ ├── postgres_user

│ └── redis_password

└── web

├── Caddyfile

├── config

└── data

Overview of container mounts

Even if the picture no longer quite corresponds to our status, I would still like to use it. Many thanks to wwe!

Now check with docker compose config if there are any errors or if the result in the Docker compose contains any obvious errors in variables.

Launch the Nextcloud Stacks

First start of Nextcloud

Now is the time to start the stack: docker compose up -d

If you access your system via ssh, you can follow the logs in parallel in another session. To do this, enter the following: docker compose logs -f

-f lets you participate in the running logs in the session. End the session with CTRL + C, if desired.

Tip: The command docker compose always expects the compose file to be at the location where the command is executed. If you are in a different location, you must specify the location of the file with docker compose -f /path/docker-compose.yml.

I’m using more moder

docker composesyntax older implementation instead needdocker-composewith a dash, adopt in case it is required.

Error message related to notify_push is expected because the app does not exist yet:

Error response from daemon: failed to create task for container: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: exec: "/var/www/html/custom_apps/notify_push/bin/x86_64/notify_push": stat /var/www/html/custom_apps/notify_push/bin/x86_64/notify_push: no such file or directory: unknown

It takes a while while all the containers are downloaded and started. don’t be impatient - even after the app container entered “started” state there are some initialization tasks running…

Status of the Nextcloud Stack

Check the status of Nextcloud by checking the functionality of redis on the one hand and whether the Nextcloud database can accept requests on the other. By using a password for redis, the command is only marginally different:

# show containers

$ docker compose ps

# redis healthcheck

$ docker compose exec redis sh -c 'redis-cli --no-auth-warning -a "$(cat /run/secrets/redis_password)" ping'

# postgres healthcheck

$ docker compose exec db pg_isready

The Reverse Proxy Stack: Creation of the required files

Now we’ve had so much fun on the way to our own cloud and have already seen the Nextcloud spaceship take off. Before we get to it, we now need to take care of the accessibility issue. The tutorial assumes that a domain has been rented or purchased, a DNS record has been created there via API or manually, and thus the nextcloud.mydomain.tld resolves to your IP. There are also numerous other ways to operate a site locally with PiHole etc., but that’s not the point here.

Reverse Proxy

Our newly created web server from above will provide us with the content of our Nextcloud. However, experienced users will have noticed that the web server does not expose any port on the Docker host. This means that port 80 of the web server is not accessible via the IP address of the Docker host.

For this problem, we use Caddy again. This time in the function of a reverse proxy, which waits for requests on port 80 (HTTP) and 443 (HTTPS) on the IP address of the Docker host and forwards them to the Caddy web server of the Nextcloud.

Do you remember our initial concept? Each stack gets its own directory under /docker. So let’s change the directory first, because from here on we’ll continue with the proxy stack!

cd ${DOCKER_PROJECT_DIR}/proxy

Caddyfile

The configuration as a webserver or as a reverse proxy is carried out via the file Caddyfile.

nano Caddyfile

proxy/Caddyfile

{

email info@mydomain.tld

log DEBUG

}

# Nextcloud

nextcloud.mydomain.tld {

# redir /.well-known/carddav /remote.php/dav/ 301

# redir /.well-known/caldav /remote.php/dav/ 301

handle_path /push/* {

reverse_proxy notify_push:7867

}

reverse_proxy web:80

}

Explanation: Two places need to be adjusted in this Caddyfile:

- the e-mail address

- the domain

For example, if your domain is example.com, then the e-mail address must be changed to info@example.com or similar, and because you are probably setting up a subdomain for Nextcloud, the domain must be changed to nextcloud.example.com.

You have to look up your e-mail address for your domain in your account and set one up there if necessary. I can do this with my domain provider.

.env

Global environment variables required for the whole project. Likely this is the only file need to edit. general.

Use nano or vim to create the required files. Your favorite may need to be installed first. Beginners usually feel more comfortable with nano, but once you get the hang of it, vim is just great!

Create a file .env using your favorite editor and preparation / prerequisites paste the data (don’t forget to adopt the domain): nano ${DOCKER_PROJECT_DIR}/proxy/.env

.env

# project name is used as container name prefix (folder name if not set)

COMPOSE_PROJECT_NAME=reverse-proxy

DOCKER_LOGGING_MAX_SIZE=5m

DOCKER_LOGGING_MAX_FILE=3

Proxy Stack (docker-compose.yml)

In contrast to the docker compose file from Nextcloud, the compose file for the reverse proxy is very neat.

Create it accordingly. Remember which directory you are in and where you should create the compose file. You are probably still under ${DOCKER_PROJECT_DIR}/proxy? Good!

nano docker-compose.yml

proxy/docker-compose.yml

---

networks:

proxy:

external: true

services:

caddy:

image: caddy:alpine

pull_policy: always

container_name: caddy

hostname: caddy

restart: unless-stopped

ports:

- 80:80

- 443:443

volumes:

- ./Caddyfile:/etc/caddy/Caddyfile:ro

- ./data:/data

- ./logs:/logs

- /etc/localtime:/etc/localtime:ro

- /etc/timezone:/etc/timezone:ro

logging:

options:

max-size: ${DOCKER_LOGGING_MAX_SIZE:?DOCKER_LOGGING_MAX_SIZE not set}

max-file: ${DOCKER_LOGGING_MAX_FILE:?DOCKER_LOGGING_MAX_FILE not set}

healthcheck:

test: ["CMD", "wget", "--no-verbose", "--tries=1", "--spider", "127.0.0.1:2019/metrics"]

interval: 10s

retries: 3

start_period: 5s

timeout: 5s

networks:

proxy: {}

File structure of the Reverse Proxy Stack

The file and folder structure should look like this. If you have forgotten something, you should make up for it:

$ tree -a ${DOCKER_PROJECT_DIR}/proxy

├── Caddyfile

├── docker-compose.yml

└── .env

Launch the Reverse Proxy Stack

First start of the proxy

Now is the time to start the stack of the reverse proxy: docker compose up -d

Tip 1: Before executing the command, make sure that you have opened ports 80 and 443 on your router and that your Docker host is accessible from the Internet. There must also be a DNS record for your domain whose IP points to your Docker host. Otherwise, Caddy cannot request the Letsencrypt certificate and it will fail. If it requests too often, its request will be throttled by the remote peer and it can then take a very long time before you receive your certificate. Even if you have fixed the error in the meantime.

Tip 2: The internet is evil. Carelessly opening a port on the router without being aware of the dangers and the correct settings is like using public transport naked. The tutorial does not cover this area and is therefore assumed.

Status of the Caddy Stack

Similar to the Nextcloud stack, you can track the status of your reverse proxy via docker compose logs -f.

Initial setup of Nextcloud via CLI

A few minutes later, you should be able to access your Nextcloud instance via your domain in the browser. Select yourself as admin user and the corresponding password ./secrets/nextcloud_admin_password.

Few setups steps are not possible (I’m not aware how to perform them in a “docker way”) so this configuration steps remain to start using some features.

Setup notify_push

Run following commands, to install and enable

notify_pushaka “high performance backend for files” - definitely recommended option.

docker compose exec app php occ app:install notify_push

docker compose up -d notify_push

docker compose exec app sh -c 'php occ notify_push:setup https://${OVERWRITEHOST}/push'

# check stats

docker compose exec app php occ notify_push:metrics

docker compose exec app php occ notify_push:self-test

Setup imaginary

I used the imaginary container from AiO project - it is widely used and should work without issues. in case there are issues only preview generation is and no important functionaly is affected and you can solve issues at your time. for this reason I use :latest docker tag which is not recommended usually.

# Review config

docker compose exec app php occ config:system:get enabledPreviewProviders

# Enable imaginary

docker compose exec app php occ config:system:set enabledPreviewProviders 0 --value 'OC\Preview\MP3'

docker compose exec app php occ config:system:set enabledPreviewProviders 1 --value 'OC\Preview\TXT'

docker compose exec app php occ config:system:set enabledPreviewProviders 2 --value 'OC\Preview\MarkDown'

docker compose exec app php occ config:system:set enabledPreviewProviders 3 --value 'OC\Preview\OpenDocument'

docker compose exec app php occ config:system:set enabledPreviewProviders 4 --value 'OC\Preview\Krita'

docker compose exec app php occ config:system:set enabledPreviewProviders 5 --value 'OC\Preview\Imaginary'

docker compose exec app php occ config:system:set preview_imaginary_url --value 'http://imaginary:9000'

# limit number of parallel jobs (adopt to your CPU core number)

docker compose exec app php occ config:system:set preview_concurrency_all --value 12

docker compose exec app php occ config:system:set preview_concurrency_new --value 8

Explanation: preview_concurrency_new should correspond to the number of cores of the CPU or the number of cores allocated to the VM. preview_concurrency_all should be twice the value of preview_concurrency_new, but can also be set lower.

Side notes

Install apps

Install required apps you need using occ command (faster and more reliable).

docker compose exec app php occ app:install user_oidc

docker compose exec app php occ app:install groupfolders

small list of my recommended apps:

- twofactor_webauthn

- polls

- memories

- cfg_share_links

Logging and troubleshooting

docker compose logs #show all container logs

docker compose logs -f #show all container logs and follow them real-time

docker compose logs app #show logs of app container

Creating, Starting and Updating Containers

Unleash the Docker magic:

docker-compose up -d

Docker-Compose will download all of the necessary images, set up the containers, and start everything in the proper order.

A few notes about running these containers:

- You must be in the same folder as

docker-compose.yml. - If you make any changes to docker-compose.yml, you can run

docker compose up -dagain to automatically recreate the container with the new configuration. - To update your contianers, run

docker compose pulland thendocker compose up -d. Then to dump the old images, rundocker image prune --force. Remember to adjust the version under.envbeforehand

However, Nextcloud can only be updated one major version at a time. - If you have built an image with a Dockerfile,

docker compose pullwill fail to pull it. Then just rundocker compose build --pullbefore runningdocker compose up -d. - To check the status of your containers, run

docker compose ps. - Persistent data (your Nextcloud data and database) are in the subfolders, so even if you delete your containers, your data is safe.

Nextcloud Office (Collabora integration)

I intentionally designed this system to run with separate CODE container. In my eyes a separate CODE is easier to maintain, you have better control about upgrades and more troubleshooting. For installation and customization you can follow native Collabora docs

Backup / Restore / Remove

Very important topic: backup/restore is not covered here.

You should implement proper backup strategy, including complete outage of your Docker host (offline and off-site backup copy). You should backup your persistent data, stored in Docker volume mounts. Database files are not consistent when the system is online so you should perform proper pg backup steps.

Start from scratch

The following commands completely wipe out the installation, so you can easily start from scratch or test you restore steps.

Please only continue, if you know, you want to start from scratch and have proper backup!!

This command silently removes all data and database files!!

# change into your docker compose directory

cd ${DOCKER_PROJECT_DIR}/nextcloud

sudo rm -rf apps config data nextcloud db redis-session.ini web/data web/config

# change to your data directory!

DH_DATA_DIR=/hdd/nextcloud/data

sudo rm -r $DH_DATA_DIR

Q/A

Even if I am not or was not affected, this information should not be lost here. Although it is only intended to give you a hint and not to unsettle you.