Hi!

We started running Nextcloud App as a prebuilt-docker image in Univention Corporate Server.

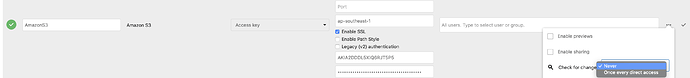

External (not primary) Storage for everything is AWS S3 and we have about 180 GB in our buckets. There are only less than 10 users, all using the Windows client or the web login. Files are not changed in the buckets externally, only by Nextcloud.

We received the AWS bill for last month and it says:

“Amazon Simple Storage Service EUC1-Requests-Tier1” ($0.0054 per 1,000 PUT, COPY, POST, or LIST requests): 48,217,810.000 Requests / 260.38 USD

“Amazon Simple Storage Service EUC1-Requests-Tier1” ($0.0043 per 1,000 PUT, COPY, POST, or LIST requests): 46,015,181.000 Requests / 19.79 USD

So we were paying about 280 USD for some requests and only a few bucks for the storage itself.

I suspect that there is a misconfiguration which leads to this huge amount of requests.

Any help or at least explanation for Nextcloud’s behaviour is appreciated.

Thanks in advance!

Nextcloud version 16.0.5-0

Operating system and version Univention (Debian) 4.4-3 errata423

Univentions currently supports updates up to Nextcloud 16.0.6-0

config.php:

<?php

$CONFIG = array (

'passwordsalt' => 'foobarfoobarfoobarfoobarfoobar',

'secret' => 'foobarfoobarfoobarfoobarfoobarfoobarfoobarfoobar',

'trusted_domains' =>

array (

0 => 'nextcloud.int.example.com',

1 => '172.16.30.21',

2 => 'nextcloud.example.com',

),

'datadirectory' => '/var/lib/univention-appcenter/apps/nextcloud/data/nextcloud-data',

'dbtype' => 'pgsql',

'version' => '16.0.5.1',

'overwrite.cli.url' => 'https://nextcloud.int.example.com/nextcloud',

'dbname' => 'nextcloud',

'dbhost' => '172.17.42.1',

'dbport' => '5432',

'dbtableprefix' => 'oc_',

'dbuser' => 'nextcloud',

'dbpassword' => 'foobarfoobarfoobarfoobarfoobarfoobarfoobarfoobarfoobarfoobar',

'installed' => true,

'instanceid' => 'abcdefg12345',

'updatechecker' => 'false',

'memcache.local' => '\\OC\\Memcache\\APCu',

'overwriteprotocol' => 'https',

'overwritewbroot' => '/nextcloud',

'htaccess.RewriteBase' => '/nextcloud',

'ldapIgnoreNamingRules' => false,

'ldapProviderFactory' => 'OCA\\User_LDAP\\LDAPProviderFactory',

'trusted_proxies' =>

array (

0 => '172.17.42.1',

),

'maintenance' => false,

'loglevel' => 2,

'mail_smtpmode' => 'smtp',

'mail_smtpsecure' => 'ssl',

'mail_sendmailmode' => 'smtp',

'mail_from_address' => 'nextcloud',

'mail_domain' => 'example.com',

'mail_smtpauthtype' => 'LOGIN',

'mail_smtpauth' => 1,

'mail_smtphost' => 'mail.example.com',

'mail_smtpport' => '587',

'mail_smtpname' => 'mailuser',

'mail_smtppassword' => 'foobarfoobarfoobarfoobarfoobarfoobarfoobar',

);