Hi all,

I’ve been trying to sort this out for well over a week and it is exhausting. I have made over 100 test calls and followed so many guides in German and English - plus I think I found one in Russian.

Firstly, my topology: 2 servers are involved, 1 VPS and 1 VM on Proxmox in a segregated VM. VPS utilises NPM and tailscale to proxy to Nextcloud AIO Docker VM on Apache port - this works perfectly fine. At first, I also used the NPM Stream function to expose 3478 from the VM to the VPS, calls would work but I suspect they were using the tailnet (giving the illusion of the same network) and had quite a few issues with people dropping off the call or audio not being heard for random participants. This is why I have coturn as it seems to be a better solution.

It should be noted that the VM with Nextcloud is within a VLAN with no access to my main lab or the Macbook I am using for testing. I would assume it would just connect over WAN and ignore any local connections as more of our users are also outside the network.

Below is my coturn docker-compose:

services:

coturn:

container_name: coturn

image: coturn/coturn

network_mode: host

tmpfs:

- "/var/lib/coturn"

command: "--listening-port 3478 --fingerprint --use-auth-secret --static-auth-secret=***** --realm=***** --total-quota=0 --bps-capacity=0 --stale-nonce --no-multicast-peers --verbose --min-port=49100 --max-port=49200 --prometheus"

restart: always

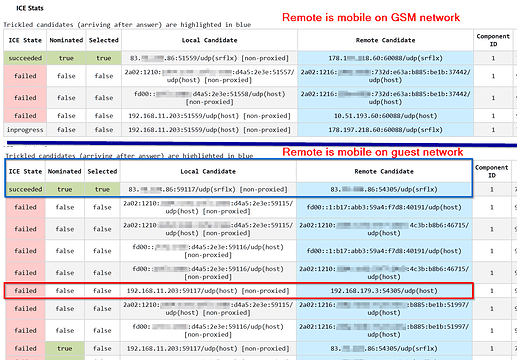

Aside from some strange 401 forbidden errors in the log, nothing truly stands out as being a problem to me. The WAN IPs of both users are shown and it attempts to connect but no connections. Telnet is successful on 3478 and the UDP range has been opened.

Now in Nextcloud Talk (where isn’t there a separate docker container for this?), I have seen some strange log entries like the one below and mDNS resolver. Google unfortunately has not given me anything concrete.

[WARN] [210253568013808] ICE failed for component 1 in stream 1, but let's give it some time... (trickle pending, answer received, alert not set)

[WARN] [4705455986961398] Error resolving mDNS address (ab23f4fe-eeef-4187-a25e-be9f6649d9af.local): Error resolving “ab23f4fe-eeef-4187-a25e-be9f6649d9af.local”: Try again

[WARN] [210253568013808] ICE failed for component 1 in stream 1, but we're still waiting for some info so we don't care... (trickle pending, answer received, alert not set)

[WARN] [210253568013808] ICE failed for component 1 in stream 1, but we're still waiting for some info so we don't care... (trickle pending, answer received, alert not set)

[WARN] [210253568013808] ICE failed for component 1 in stream 1, but we're still waiting for some info so we don't care... (trickle pending, answer received, alert not set)

[WARN] [210253568013808] ICE failed for component 1 in stream 1, but we're still waiting for some info so we don't care... (trickle pending, answer received, alert not set)

[WARN] [210253568013808] ICE failed for component 1 in stream 1, but we're still waiting for some info so we don't care... (trickle pending, answer received, alert not set)

[WARN] [210253568013808] ICE failed for component 1 in stream 1, but we're still waiting for some info so we don't care... (trickle pending, answer received, alert not set)

[WARN] [210253568013808] ICE failed for component 1 in stream 1, but we're still waiting for some info so we don't care... (trickle pending, answer received, alert not set)

Is there an additional step I may be missing? At this point, I was tempted to port forward with OPNSense the port 3478 straight to the VM but I would like to avoid this and have everything go via the VPS.

I did notice during my testing that if I change to my local VLAN the webcam would load during the test call (no loading sign) but the other user would be unable to connect either way. Firewall rules did show that the Nextcloud VM was trying to connect to my laptop (despite being on different VLANs). The other test user is entirely external and on another network and therefore WAN - no connection has been successful here.

Happy to explain further and give more details!