I have installed NC with the help of the VM file (sized at 1TB) created by Daniel a while ago.

Since that moment i have changed a couple of things, i have upgrade PHP to a recent version and added an extra 1TB disc. The PHP update didn’t go without a problem, but with the help of @eehmke it worked out fine

This time i would like to move the user data because i am not 100% happy how this works at the moment.

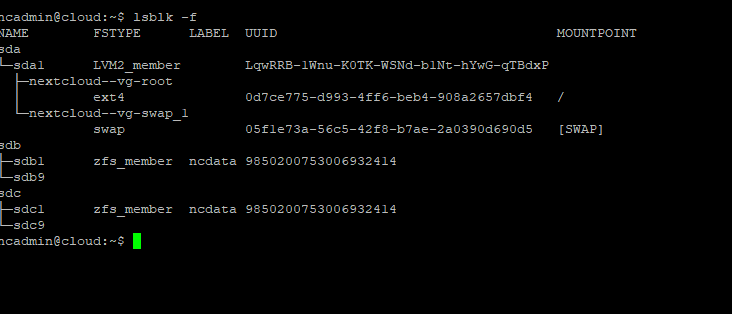

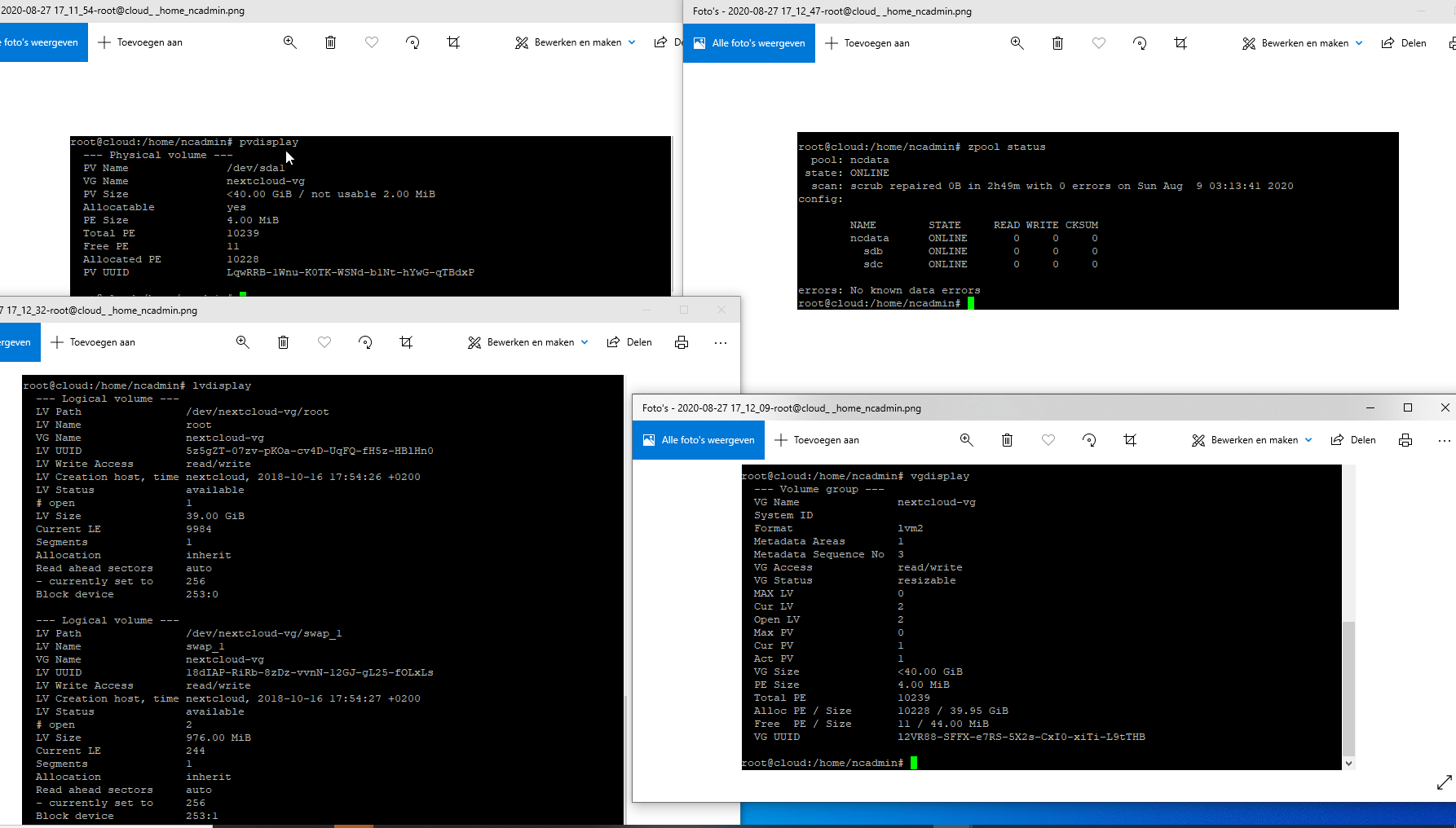

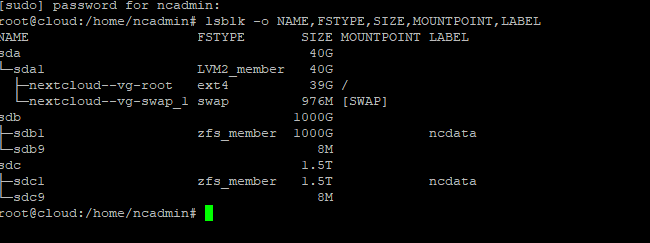

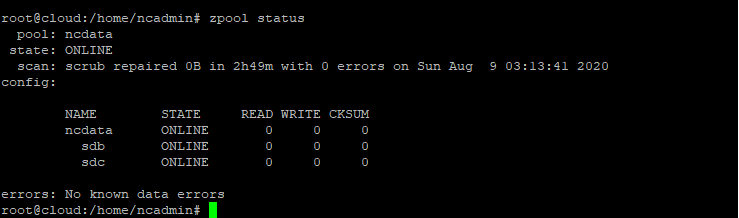

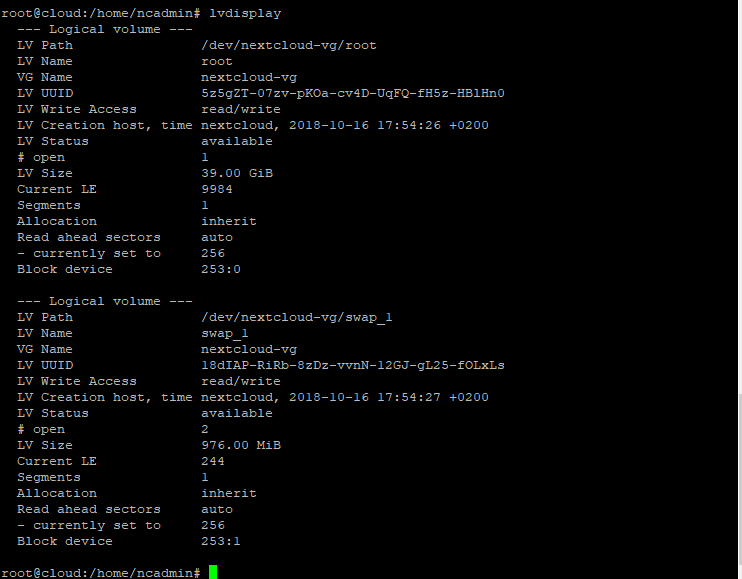

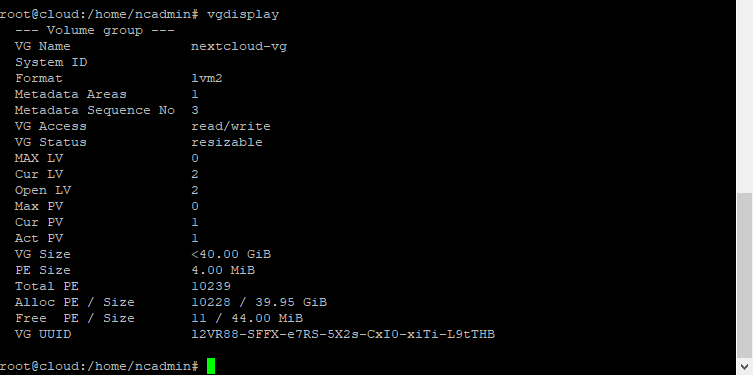

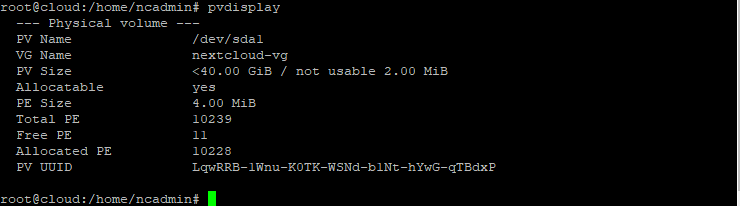

Current situation:

My Nextcloud runs as a VM on a ESX7 server (cluster of 2 with shared storage). It running on 8x1,92TB Micron enterprise SSDs at 10Gbit.

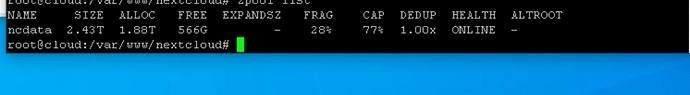

Because of the VM file bought from Daniel i had 2 hard disc files, later i tried to connect a third. These disks are in a pool, which is designed this way by Daniel. The problem with this is i cannot remove the extra 1TB disk i added myself. This extra storage never worked (extra space never should up in NC, even after i made it a member of the pool), but because of the pool i cannot simply remove the disk as far as i know. After adding the third disk it turned out i didn’t need the extra space so i left the way it was (with the third disc )

I would like to simply just move all the user data to a new virtual hard disc (thin provisioning).

But when i look at the topics regarding movement, it is often full of issues and problems. Since my PHP upgrade topic worked out great (again mostly due to eehmke  ) i was hoping to do this movement kind of the same way.

) i was hoping to do this movement kind of the same way.

So what is the best way to do this step by step? @eehmke it would be much appreciated if you could help out again.

I have learned quite a lot from the PHP upgrade topic, i even upgraded two more Nextcloud installations just for fun to see if i could do it