Support intro

Sorry to hear you’re facing problems ![]()

help.nextcloud.com is for home/non-enterprise users. If you’re running a business, paid support can be accessed via portal.nextcloud.com where we can ensure your business keeps running smoothly.

In order to help you as quickly as possible, before clicking Create Topic please provide as much of the below as you can. Feel free to use a pastebin service for logs, otherwise either indent short log examples with four spaces:

example

Or for longer, use three backticks above and below the code snippet:

longer

example

here

Some or all of the below information will be requested if it isn’t supplied; for fastest response please provide as much as you can ![]()

Nextcloud version (eg, 20.0.5): 21.0.2

Operating system and version (eg, Ubuntu 20.04): Raspbian GNU/Linux 10 (buster)

Apache or nginx version (eg, Apache 2.4.25): nginx/1.14.2

PHP version (eg, 7.4): v8.0.5

The issue you are facing:

cron.php script hangs causing cpu usage of 100%

Is this the first time you’ve seen this error? (Y/N): Y

Steps to replicate it:

- Just run cron.php every 5 mins in cron job

On first run and then every 12 hrs cron.php hangs and will run forever unless I manually kill it. It runs my cpu at 100%. All other times it runs fine.

I’ve checked my date.timezone in /etc/php/8.0/fpm/php.ini and /etc/php/8.0/cli/php.ini. And it’s set correctly. I’ve set the correct time zone for logging: timedatectl set-timezone

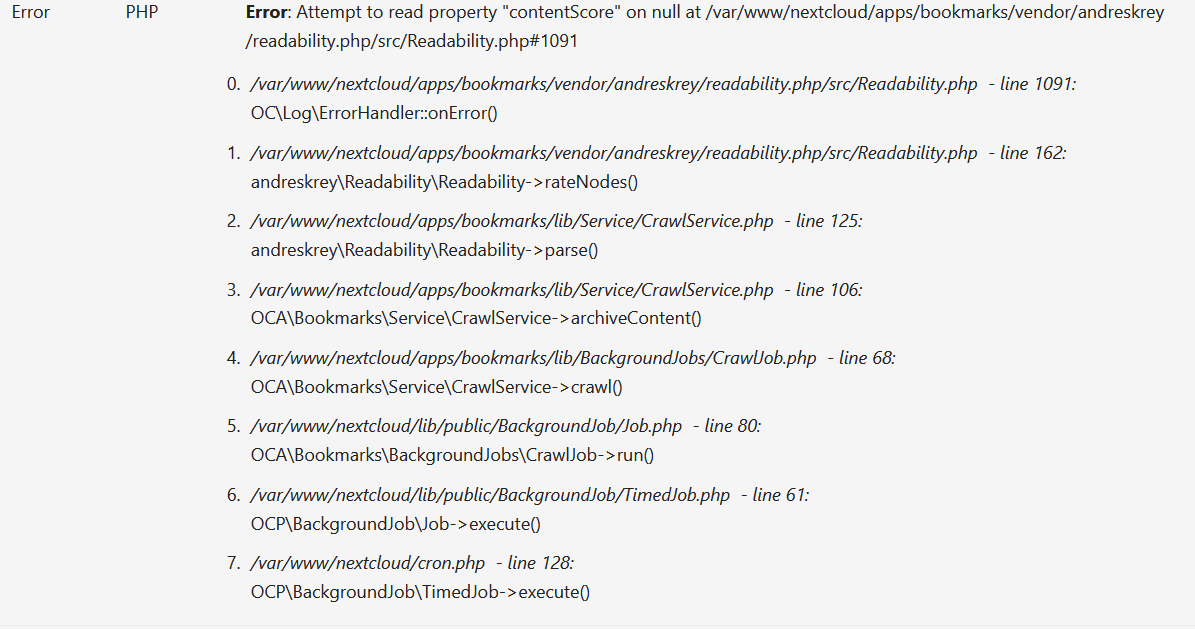

I’ve checked nextcloud log and it’s clean.

Attached strace to the process and this set of lines keeps repeating indefinitely until I kill it with Ctrl C

strace: Process 7485 attached

gettimeofday({tv_sec=1621744604, tv_usec=617061}, NULL) = 0

gettimeofday({tv_sec=1621744604, tv_usec=617257}, NULL) = 0

gettimeofday({tv_sec=1621744604, tv_usec=617765}, NULL) = 0

openat(AT_FDCWD, “/var/nc_data/nextcloud.log”, O_WRONLY|O_CREAT|O_APPEND|O_LARGEFILE, 0666) = 8

fstat64(8, {st_mode=S_IFREG|0640, st_size=516978614, …}) = 0

_llseek(8, 0, [0], SEEK_CUR) = 0

_llseek(8, 0, [0], SEEK_CUR) = 0

write(8, “{"reqId":"u0xEDjzmdegIXMaIQmZz",”…, 1768) = 1768

close(8)

cron.php runs under the php process. This started with php 8 so I surmise it may be an obscure bug with php 8. I noticed this issue because my fanshim LED turns red when my server is hot and it was red with no load so I investigated. If not for the red LED I would have been unaware of the issue. As such, I’m curious if others are experiencing this issue, but are unaware.

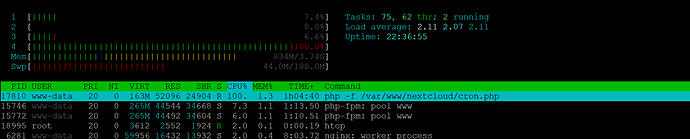

For others running php 8, I urge you to check sudo htop once a day and sort by CPU to check if it is hanging. If so, you should see it similar to the image posted above. If so, please report on this thread. Thank you.

Seeking assistance troubleshooting. Thank you!