How can I use OCC (or something else?) to trigger a rescan of external storage whenever a change is made?

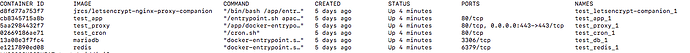

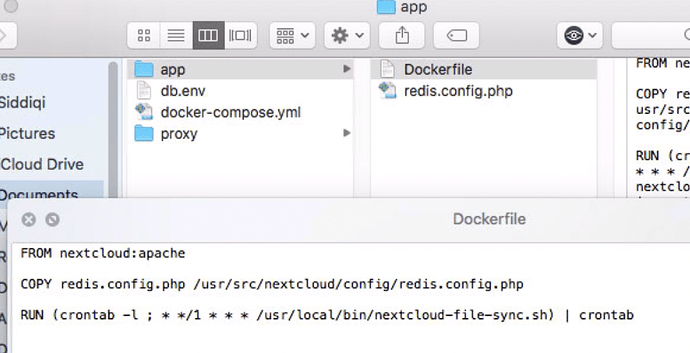

I have Nextcloud running on Docker on my Mac with this docker-compose. I made a small tweak to it so I can access my external storage (external hard drive):

app:

build: ./app

restart: always

volumes:

- nextcloud:/var/www/html

**- /Volumes/LaCie:/Volume**

environment:

- VIRTUAL_HOST=email@emaple.com

- LETSENCRYPT_HOST=email@emaple.com

- LETSENCRYPT_EMAIL=email@emaple.com

- MYSQL_HOST=db

env_file:

- db.env

depends_on:

- db

- redis

networks:

- proxy-tier

- default

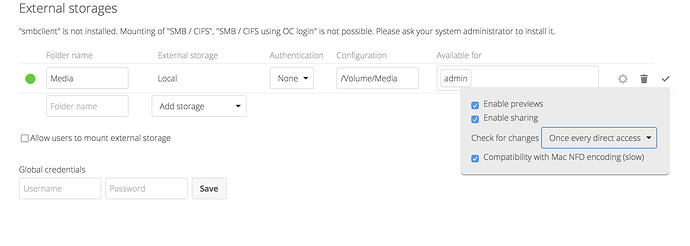

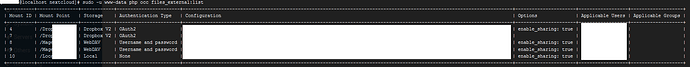

I was able to successfully enable local storage:

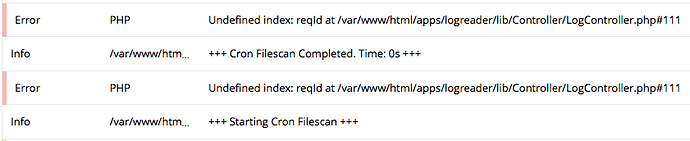

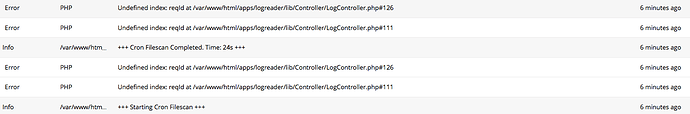

Immediately after setting up local storage, I am able to access all of my files and the data size states pending. However, after sometime only the files I accessed remain. Hence, if I have a TV show folder and click on the show SpongeBob and select season 1. All episodes from season 1 will load and the data size will update. However, if I don’t select and open the other seasons (i.e season 2,3,4) those folders will disappear from nextcloud.

Also, if I create a new folder with the same name name (i.e Season 2). Nextcloud will state Season 2 already exist and the folder will show up.

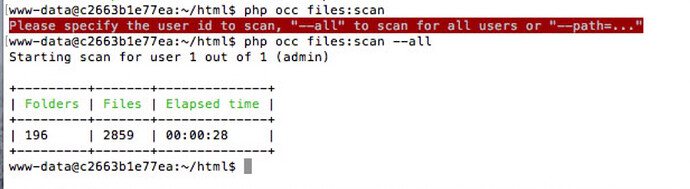

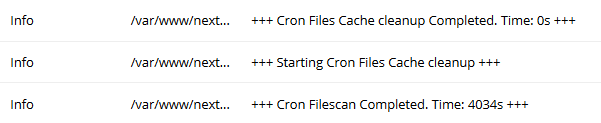

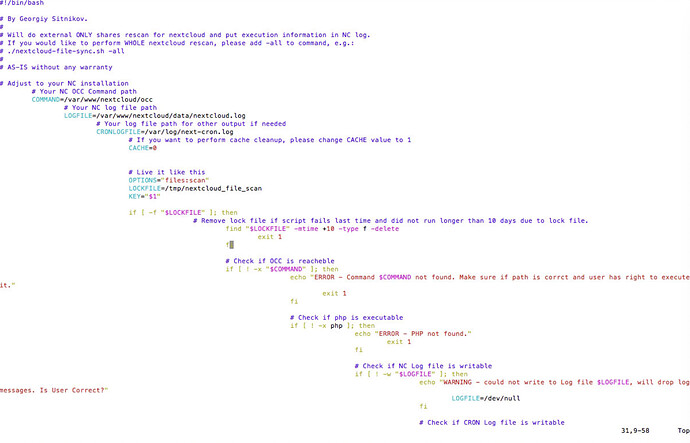

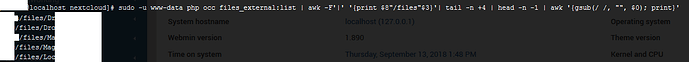

Running the command php occ files:scan --all under the user www-data appears to have done the job…all of my external storage files are showing! However, is there a better way to implement this code somewhere? I think running this script every time will use a lot of resources…is there an alternative solution?