Nextcloud version: 25.0.10

Operating system and version: Gentoo

Apache version: Apache/2.4.54

PHP version: 8.1

The issue you are facing:

So we have a fleet of old nextcloud clients and 1 computer with the latest version (3.12.0). Reason we’ve held back the rest of our computers is because of the nasty bug a few years ago that removed timestamps for files, so we’ve been cautious upgrading…

So the new client is way faster at uploaded. Using 3MB files we are hitting 135mbps upload speed, which is going through a low-end mikrotik router which is doing a lot of packet mangling, so we anticipate that headline speed is actually a result of the CPU hitting 100% on the router, not the limitation of the servers/client/nextcloud.

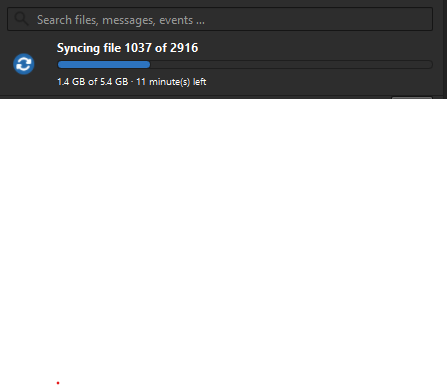

However, what we are seeing with this latest version of the client is that it uploads 100 files in a few seconds, then just hangs not uploading anything for about a minute, then quickly uploads another 100 files, hangs, and this continues in a loop in this fashion. During the hang of the client, there are no spikes on the servers (MySQL, Application Servers or Storage Servers).

What makes this more strange is that the older clients don’t do this, they consistently upload with no breaks, but at a lower speed (About 35 - 40mbps). Because of the huge gaps on the new client, despite the headline speed being substantially faster, it is actually way slower because of these pauses.

We have redis configured as a file-locking memcache.

If we set both the new client and the old clients uploading at the same time, when the new client freezes, the old clients continue to upload with no drop in speed.

Apache is configured with mod_php. Max upload/post size is 2GB, max file uploads I set to 1000 (because it was set to 100, which I thought might have been the reason it hung every 100 files, but that change and restart of apache didn’t fix it).

Is anyone else having this issue? I kind of feel like it’s a client issue, considering the older client doesn’t seem to have the same issue… but I’m also wondering if something is mis-configured on the server side that the new client doesn’t like.

Haven’t pasted any log files as no errors happen during the freezing.