G’day there,

I’ve been working in business technology for the past 19-20 years, developing and supporting small to midscale system deployments using a wide range of different subsystems; as far back as Novell Netware, Linux using NFS shares and much more commonly, Windows in an Active Directory/Domain Controller scenario with SMB shares.

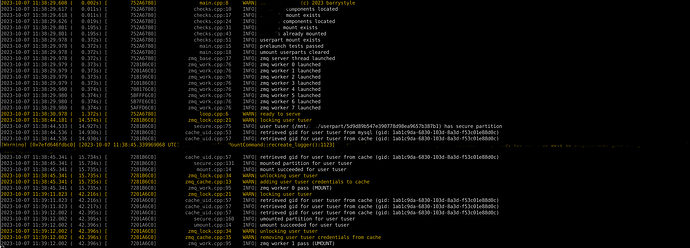

As of this year i’ve been working on a public Nextcloud deployment and for the most part it has been enjoyable. Currently I use an encrypted partition system, with a realtime interface written in C++ using ZMQ to manage the calls directly from Nextcloud with some custom PHP to bring it all together, as well as LDAP to manage the actual authentication. This was borne out of necessity, as Nextcloud operates with the base data root folder with individual user folders underneath it. Additionally this has required a lot of small hacks to point Nextcloud ‘at the right place’, rather than the data root.

I’m interested to know how other people are managing with this issue, as it feels like the elephant in the room.

Nextcloud is promoted as having expandable infrastructure and in particular large scale deployments, however to be honest I see having a single ‘root’ data mount being entirely incompatible with this concept. Windows makes this very easy having redirected Desktop, Documents and mounted drives which are set per logon - Novell had this baked in too as a core concept. It has been suggested that a user’s base folder can be set in LDAP but i’m unsure as to whether this is only as an ‘additional’ storage area, or the users base home folder. Finding documentation of any of kind on this topic is difficult or out of date.

Is there any documentation available showing definitively where the user base path/roots are defined?

Is it a matter of writing a module specifically with overrides to set it up in this situation?

barrystyle

Hello.

Your post seems very strange to me. You seem to have hacked the core severely on order to get a custom solution. Still, I do not get the point in your request for various things (most prominent the real-time interface, other things as well).

What is your point against a single folder with all use data underneath?

I know of very big installations that run well. You need more than a raspberry pi for sure then, but this is obvious.

You can however create your own app that integrates a custom storage environment to the core. That way, the core is rather generic and extendable.

So, unless I see your requirement, I cannot and will not give you any good advice on the topic.

Hi christianlupus,

Sorry if my point wasn’t clear.

To get the system working ‘so far’ with the volume of users I support; I have had to ‘hack at the core’ to get things running correctly. I doubt that many other implementers would go to these extremes, so my point was really ‘how are others doing it’.

For instance, if i wanted to split my user base across three separate filesystems for both redundancy and performance; how would I do so?

To allow me to mount a custom separate partition per user so far, i’ve modified the method ‘getHomePath()’ in apps/user_ldap/lib/User/User.php (to return the ‘true’ user mount). I’ve also had to ‘shim’ other parts of the code (mainly lib/private/User/Session.php) to actually allow realtime mount/unmount of the user partitions as required.

I’d love to write a custom module to make this process easier; but there seems to be no documentation covering the exact portions of the code that handle the user data paths; what i’ve found so far has been by guesswork and debugging.

Any information you have on this topic would be appreciated.

barrystyle

Peanut gallery over here, but I cant help but feel you are hacking into the Nextcloud software on what really is a Linux server problem. I would think the solution you are looking for would be handled better within BTRFS or the old LVM/MDADM combo. That will allow you to use RAID level redundancy (assuming you have multiple drives) and logically partition and carve out your storage however you like (each user folder can be its own partition if you want) Then you can point your NC data folder to the folder that contains all those partitions and NC would be none the wiser.

(Granted I’m glossing over a lot of key logistical challenges, but that would be the main idea)

My point is I think you are forgetting about how Linux does its directory structure. Any directory can be a mount to somewhere else. There is no need hack nextcloud to point at multiple different places for its data.

3 Likes

This is perfect, and yep can just move the mount points to where the data directory is… thankyou!

The only issue I am seeing here is that is is directly possible to split a 1500 user NC instance into 3 partitions of 500 users each. You could use symlinks or bind mounts (heavy in that magnitude).

The question is though: Is it a good idea and required? This is quite some hassle to keep NC and the OS mounts in sync. So, if the storage is well-maintained and speedy, why would splitting into chunks create a benefit? I admit, some caching (on OS level) might be a good idea, though. This is more an admin topic then.

1 Like

That is a fair point. I’m not really sure the benefit of splitting the users into separate partitions either. Personally I do the whole data folder as its own single LVM partition. Which I can add storage as needed (Until I need to migrate to a larger array which LVM can help with too).

1 Like

Separate partitions which get mount/umount’d when not in use are just a preventative measure, in case of path traversal exploits or user compromise.

Having all the user data sitting in one unencrypted partition just doesn’t make much sense to me. Even if someone was able to gain access to the physical disk via remote, they wouldn’t be able to get at the data stored in the actual encrypted partitions.

After reverting changing the home path methods to standard and disabling file caching, the setup now runs perfectly.

Hi @barrystyle,

I have one word for you Ceph. Well actually three words Object Storage or Ceph. My preferred way is Ceph, lots of speed if setup on the right hardware and lots of redundancy. And no hacking of the core required.

With Ceph, you can have 10K of users, 800TB of files, and not have to worry about splitting anything up. If you setup a cluster of 3 storage servers, it will automatically replicate, 3 times and you can even have two of the server go down and everything will keep working.

Sebastian

2 Likes

Hi sebastiank,

The product that we have assembled has a very unique use-case, in this particular case we did indeed look at Ceph and agree 100% that it is a superior solution, however the storage layer we went for is fairly lightweight, allows setting a ‘storage goal’ (hint) and we were able to rewrite its ‘agent’ to run as an internal thread inside of our feature application.

You did mention using it as Object Storage… you mean setting it up to contain the entirety of the /data mount, or some other configuration?

Most probably, he is thinking of S3 storage interface in Nextcloud.

Just a generic word: the server will need the access to all files independently of they are encrypted, mounted on demand or whatever. So, there is no point in my understanding of trying to protect against a thread that is in fact a normal use case.

If you do not trust your server infrastructure, you will have a problem to find a working solution. You have written a few comments that you want to avoid file traversals and the like. What is the idea behind it? What is the tread? Can it really be counted by the intended modifications?

Just a few ideas to check the motivations before investing too much effort into something.

Christian

Initially there were a few issues regarding user access, but once file caching was disabled they were minimal. A few edge-case scenarios, such as external/shared files and in cases where users have actually logged off, do cause minor issues.

I’ve written a separate handler to do emergency mounts where required, but other than that there really havent been many issues.

One of the core features of our product lies in a decentralized, trust-less filesystem. This is why we have separated user partitions ‘on top of’ the storage layer.

Apologies for the vagueness in some of my responses, once the product is launched will be able to give a bit more detail.

Hi

@christianlupus is correct. S3 storage is the same as object storage. S3 is just AWS’s brand name for it.

Also, Ceph is pretty lightweight, I run it on some very old hardware and it just works. It all just depends on how much speed you want. But honestly, you can create a pretty cheap, super fast 3 server cluster these days for like 12k. And for a company that wants to run 1500 users on this platform that 12-15k should be a drop in the bucket.

1 Like

Not sure I understand this. Ignoring all other security features that NC has built in, best practices, and what not and just take this particular precaution at face value. All partition are mounted when the server is running. I don’t think that would translate to any better security and it all has to be accessible at all times by the web server user. In fact there are plenty of processes that need to access data while the user isn’t explicitly logged in (any service the user sets up including desktop applications). So dismounting whole user folders when people log out really wouldn’t be a good idea.

1 Like

exact thing i was referring to earlier in the post; i’m aware its owncloud but it ‘couldve’ been nextcloud. having independently encrypted volumes per user would’ve meant that the intruder would’ve only had access to the data for users that were currently logged in (with an active volume mount).

having all of the user accounts sharing one common area of pooled diskspace is not security minded.

this sounds more like an issue inherited by design, rather than a sound principle.

The exploits that were done on owncloud could not be done on nextcloud. And again everything has to be acessable by the web service at runtime to work. Doesnt matter if its on a same folder, partion, or on a seperate server. So where exactly is the security improvement? If its being accessed by another user, thats a permissions issue, and if its being accessed by root than you got bigger problems.

Yes, everything has to be accessible by the web server when it is required. If the user’s encrypted volume is not mounted (when the user is not logged in), then the web server doesn’t need access to it.

Additionally, even if the attacker gets root access to the server or to the encrypted volume’s data… then what? They still don’t have the key to decrypt the data.

The key is provided to the service responsible for mounting the encrypted volumes when the user logs in (additional piece of data provided during authentication). Once the volume is unmounted, it is no longer held in memory.

The takeaway point here is that, the key is not stored on the server and the unencrypted data is only ever made available to the webserver if the volume has been mounted.

Based on the use case you have described, what you want is a variation on an SFTP service. And I wouldn’t bother with partitions. I would use encrypted disk images files. They would be a lot easier to manage and back up. It would take some scripting to do exactly what you want. Maybe use the use the user password or rsa key as the image encryption key. But it should be doable and a lot easier than hacking away at the nextcloud core.

Nextcloud is a SaaS platform. The data it stores isn’t just files on the hard drive, It’s data in the database. (Contacts, Calendar Events, Emails, Chats, Passwords, whatever the heck Circles is.) It’s syncing data across multiple machines and multiple user applications in as safe a and secure manner as can be. It can’t do that if data is periodically locked away if the user isn’t signed into the web app. It needs to regularly index and cache data to do that reliably and quickly. You throw a file on the server in a user folder, it isn’t going to just appear on the nextcoud app. it needs to build an entry for the file in its database and its really that entry that the user sees.

[EDIT 2K+1] Maybe the sftp idea is not that simple, but I can’t help but feel that there has to be a way.