Tl;dr Use Hansson vm image with main storage on nfs share.

Introduction: Got started with Nextcloud about 2 years ago (Ubuntu 18/NC 14) when I wanted to part ways with drive/dropbox for storing & sharing with friends/family (homelab). Already had an esxi box serving other duties so a vm instance seemed like a good fit.

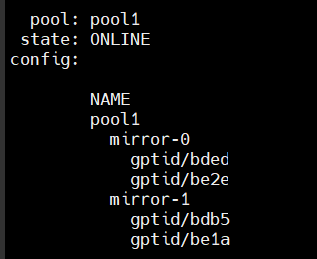

This solution worked well enough but had caveats. Veeam backups kept growing larger and larger - 10GB actual storage was turning out 80+ GB backup images. . As I understand it this is a byproduct of using zfs. Changes to the file system still consume storage space even if the file is marked erased. Existing backup solution was to create a vm image every 3 months. Not best strategy in case of datastore failure. That particular vm was based on ubuntu 18. In place updates to 20.x resulted in some things breaking. Made sense to backup nc data and start over.

Hansson has a current vm out based on most recent NC and Ubuntu with a wonderful installation script. In fact the whole instance is very well thought out and implemented. However, the same caveats as above would apply.

Time to try my hand at a home nas to simplify backup functions from various devices. Truenas instance exists on the same esxi box. I know there’s the option to run NC in a sandbox but given NC is exposed to the internet I’m not comfortable with this idea. Instead NC resides in its own vm, with vswitch that has no external access. NC and the firewall software only has access to this vswitch.

NC has an option for external storage. One thought was to keep data in the NC VM but actual user content (pics, videos, etc), on the nas. Reading up on this there seems to be issues with keeping database in sync with file changes. I suppose in my case this wouldn’t be a problem given NC interface only is used to interact with this share on the nas. That still leaves a headache for keeping NC data backed up regularly.

Why not just dump the whole NC datadirectory folder to the nas? The guide that follows describes how. I’m far from an expert in ubuntu/linux. Maybe a seasoned novice if that.

Needed:

- Hansson NC vm deployment OVA file - Nextcloud VM – T&M Hansson IT AB

- Configured nfs share on file server. For sake of consistency I named this

.../ncdataand assigned user/group www-data and uid/gid 33/33.

Process:

- Import the ova into esxi. Once imported adjust memory/cpu to your preference. Mine is configured with 2 vcpu’s and 4GB ram.

- While still in the vm editor, delete

hard disk 2. NAS mount will be used in its place.

2.5) For testing purposes, generate a snapshot at this point. Will save time redeploying ova if something goes wrong.

The following steps are best done using an ssh client. I prefer mobaxterm but putty will work too.

-

Power on the vm and allow to boot. Connect to using ssh.

-

Login as

ncadmin/nextcloud. At the first prompt hitctrl-cto exit the startup script. Typesudo -i. Use the same password andctrl-cagain. You should now be at a#prompt. -

Add the following to

/etc/multipath.conffile. Ref: syslog flooded with multipath errors every 5 seconds · Issue #1847 · nextcloud/vm · GitHub

blacklist {

devnode "^(ram|raw|loop|fd|md|dm-|sr|scd|st)[0-9]*"

devnode "^sd[a-z]?[0-9]*"

}

Restart multipathd - service multipathd restart

- Remove zfs (I think this should do it)

systemctl stop zfs.target

systemctl stop zfs-import-cache

systemctl stop zfs-mount

systemctl stop zfs-import.target

systemctl disable zfs.target

systemctl disable zfs-import-cache

systemctl disable zfs-mount

systemctl disable zfs-import.target

rm -r /etc/systemd/system/zfs*

rm /etc/systemd/system/zed.service

apt remove zfsutils-linux

rm /etc/cron.d/zfs-auto-snapshot

rm /etc/cron.d/zfsutils-linux

systemctl daemon-reload

systemctl reset-failed

- Install nfs components

apt install nfs-common

-

Edit

/etc/idmapd.confto reflect your lan domain name

Domain = lan.domain (or whatever yours is called) -

Clear nfs idmapping cache

nfsidmap -c -

Edit

/etc/fstabto reflect nfs mount

{ip of nas}:/mnt/fatcow/ncdata /mnt/ncdata nfs4 defaults 0 0

10.5) Mount the share

mount -a

-

On the nas I assigned

maproot user/groupasroot/wheelundersharing/nfs/path. This is needed so root functions of install script can adjust permissions to the data folder. Open to suggestions how to do this differently? -

.ocdataneeds to exist in the data folder for the install script to work.

touch /mnt/ncdata/.ocdata -

Trigger installation script by logging out entirely.

exit

exit

-

Login back in as

ncadmin/nextcloud. You’ll be prompted for a password again,nextcloud. -

Allow the installation script to run. Install whatever modules you want. Make sure to set

timezone.

Assuming everything above completed successfully the server should now be fully functional with data directory residing on the nas. In my case I still needed to transfer data from the old NC instance.

There’s quite a few backup/restore methods. I chose @Bernie_O’s ncupgrade script (BernieO/ncupgrade: Bash script to perform backups and manual upgrades of a local Nextcloud installation based on the Nextcloud documentation<br> - ncupgrade - Codeberg.org).

The script supports both backup/restore functions within one script. Supports all 3 database engines. Postgres support fully working. Supports hook scripts before database restore.

I used the following command line to generate backup in the old instance. Three archive files are created - database, nc www folder (/var/www/nextcloud), and user data folder (/mnt/ncdata).

./ncupgrade /var/www/nextcloud -w apache2 -ob -bd {destination path}

Destination path can be local or network share. Bulk of my files were in the user data folder, ~7GB worth. Database and NC archives were relatively small. You will need to either transfer these files to the new instance or mount a path so archive files are accessible.

Command line to use on new deployment after completing steps 1-15 above.

./ncupgrade /var/www/nextcloud/ -w apache2 -rb -bd {source path} -hs ./hook.sh

Hook script is needed to adjust database and redis passwords. Database restore will fail otherwise. config.php will also be updated to reflect ip of new instance as allowed in the trusted domain’s array. Recall new instsance has newly generated passwords (from install script).

My hook.sh script. Credit to @Bernie_O for the trusted domain’s code.

#!/usr/bin/env bash

#Restore psql database password from config.php

dbname="$(getvalue_from_configphp "dbname")"

dbuser="$(getvalue_from_configphp "dbuser")"

dbpassword="$(getvalue_from_configphp "dbpassword")"

printf '%s\n' "+ Restoring database password to '${dbpassword}'"

sudo -u postgres psql > /dev/null 2>&1 <<END

ALTER ROLE $dbuser WITH PASSWORD '${dbpassword}';

END

# Correct redis password

REDISPASS="$(getvalue_from_configphp "password")"

REDISCFG="/etc/redis/redis.conf"

REDISPASS2=$(grep "requirepass " $REDISCFG | awk -F" " '{print $2}')

if [ "$REDISPASS" != "$REDISPASS2" ]; then

sed -i 's/requirepass .*$/requirepass '$REDISPASS'/' $REDISCFG

printf '%s\n' '' "+ Redis password updated in ${REDISCFG} to ${REDISPASS}"

else

printf '%s\n' ''"+ Redis password __NOT__ updated,"

printf '%s\n' ''"+ ${configphp} password is ${REDISPASS},"

printf '%s\n' ''"+ ${REDISCFG} password is ${REDISPASS2}"

fi

# Flush redis

redis-cli -a ${REDISPASS2} --no-auth-warning -s /var/run/redis/redis-server.sock -c FLUSHALL > /dev/null 2>&1

service redis-server restart

#update config.php with new ip

# path to 'occ' in the Nextcloud directory:

occ="/var/www/nextcloud/occ"

# read trusted_domains from config.php into array (array index might not be incremental):

while read -r line; do

trusted_domains+=(${line})

done <<<"$(sudo -u www-data php "${occ}" config:system:get trusted_domains)"

# replace array trusted_domains with a variable (to delete the array from config.php):

sudo -u www-data php "${occ}" config:system:set trusted_domains

# replace variable again with an array containing the original trusted_domains (array index is now incremental, starting at 0):

for (( i=0; i<${#trusted_domains[@]}; i++ )); do

sudo -u www-data php "${occ}" config:system:set trusted_domains ${i} --value="${trusted_domains[${i}]}"

done

# add an additional trusted domain (index ${i} is now at number of elements and doesn't have to be imcremented here as we started with 0):

sudo -u www-data php "${occ}" config:system:set trusted_domains ${i} --value="$(hostname -I | awk -F" " '{print $1}')"

Congrats if you’ve made it this far! New vm deployment data folder containing your old nc data now resides on the nas. As mentioned earlier, I’m open to suggestions on improving the process or optimizing. These steps were successfuly tested multiple times.