My lab set-up is running NC 28.0.2 on a Centos v7 virtual machine with client 3.11.1 on Windows 11. I use it for experimentation and testing. I’ve just unzipped a printer driver file which rapidly generated 1,983 files but only 170MB in total - so LOTS of very small files.

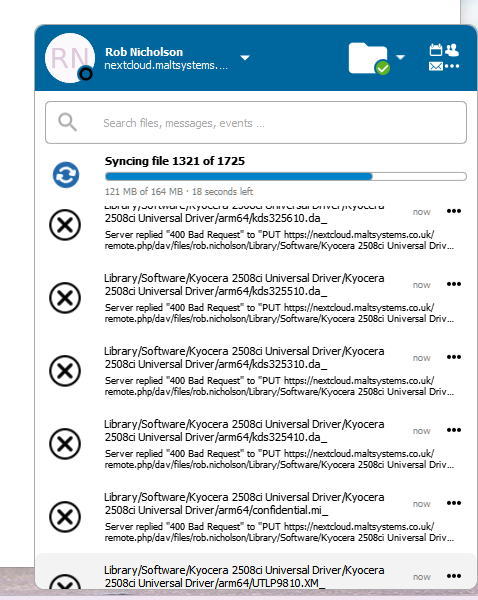

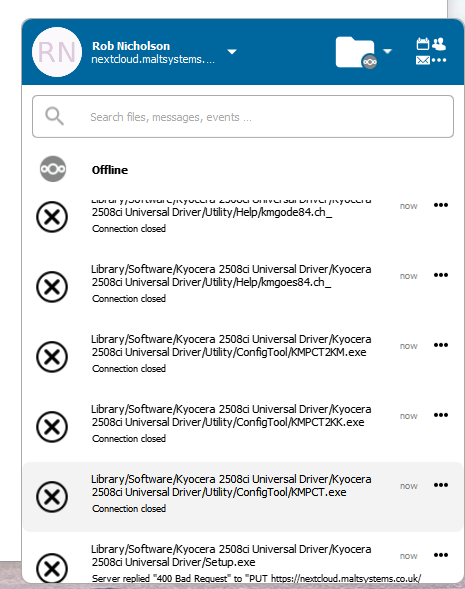

The client had real trouble uploading them with thousands of errors flying up the activity window: network error 2, 400 bad request. Now this VM is running on an external USB-3 hard disk so doesn’t have the fastest disk I/O in the world and when I did this, I happened to be running an rsync which was writing files (downloaded from internet). atop did show the VM was pretty busy I/O wise.

However, the reason I’m posting is that about 30 minutes after that rsync had finished, the client was still throwing out a lot of errors. It would not finish the sync of those files until I rebooted the server.

I’m just trying to understand what was going on here in case it happens in production. I’m going to try repeating it as I did snapshot the VMs before hand. I can understand some resource running out but why didn’t NC recover?