Support intro

Sorry to hear you’re facing problems ![]()

help.nextcloud.com is for home/non-enterprise users. If you’re running a business, paid support can be accessed via portal.nextcloud.com where we can ensure your business keeps running smoothly.

In order to help you as quickly as possible, before clicking Create Topic please provide as much of the below as you can. Feel free to use a pastebin service for logs, otherwise either indent short log examples with four spaces:

example

Or for longer, use three backticks above and below the code snippet:

longer

example

here

Some or all of the below information will be requested if it isn’t supplied; for fastest response please provide as much as you can ![]()

Nextcloud version (eg, 20.0.5): 28.0.0

Operating system and version (eg, Ubuntu 20.04): Debian 12

Apache or nginx version (eg, Apache 2.4.25): apache

PHP version (eg, 7.4): 8.2.11

The issue you are facing:

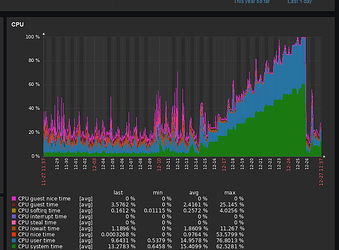

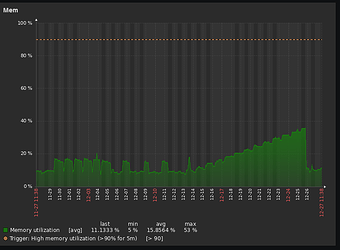

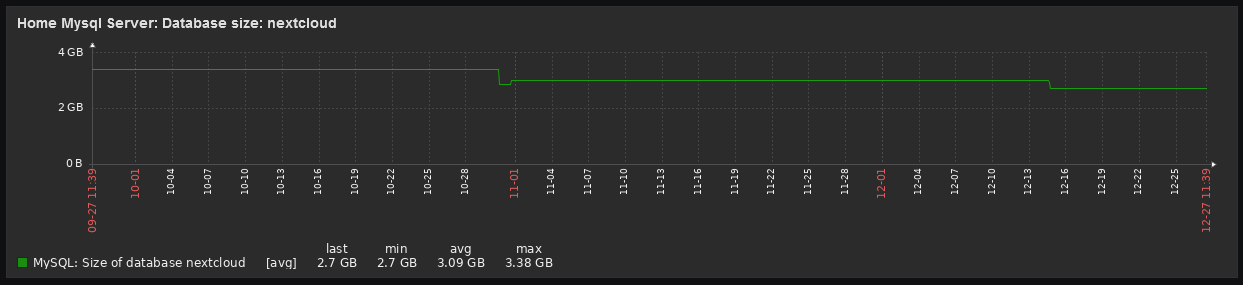

I have an SMB storage attached to the nextcloud. And since the attache the CRON jobs takes a long time. The longest I’ve waited for the end of the run 300+ something hours. But I had to kill that job. It causes high CPU usage on the Nextcloud server. If I mount the same storage into the LXC with mountpoint there is no problem, everything runs perfectly.

Is this the first time you’ve seen this error? (Y/N):N

Steps to replicate it:

- Install Nextcloud

- Mount an SMB share (size: 12TB, rights: 775, auth: passwd, filled with a lot of small file) with the webUI admin interface use global stored password

- Assign the mounted storage to a Group with 5-10 user

- Wait for the cron jobs

The output of your Nextcloud log in Admin > Logging:

SRY MY LOGGING IS MESSED UP THE PAGE JUST CRASH ON LOAD

If anyone can point me to a direction how to fix this problem.

Best regards,

Mate

EDIT 1

I’ve found the occ command to export the reducted config:

"system": {

"passwordsalt": "***REMOVED SENSITIVE VALUE***",

"secret": "***REMOVED SENSITIVE VALUE***",

"trusted_domains": [

"cloud.zsolyahome.com",

"172.16.1.89"

],

"trusted_proxies": "***REMOVED SENSITIVE VALUE***",

"default_phone_region": "HU",

"datadirectory": "***REMOVED SENSITIVE VALUE***",

"dbtype": "mysql",

"version": "28.0.0.11",

"overwrite.cli.url": "https:\/\/cloud.zsolyahome.com",

"dbname": "***REMOVED SENSITIVE VALUE***",

"dbhost": "***REMOVED SENSITIVE VALUE***",

"dbport": "",

"dbtableprefix": "oc_",

"mysql.utf8mb4": true,

"dbuser": "***REMOVED SENSITIVE VALUE***",

"dbpassword": "***REMOVED SENSITIVE VALUE***",

"installed": true,

"filelocking.enabled": true,

"memcache.locking": "\\OC\\Memcache\\Redis",

"memcache.local": "\\OC\\Memcache\\Redis",

"memcache.distributed": "\\OC\\Memcache\\Redis",

"redis": {

"host": "***REMOVED SENSITIVE VALUE***",

"port": 6379,

"timeout": 0,

"password": "***REMOVED SENSITIVE VALUE***"

},

"instanceid": "***REMOVED SENSITIVE VALUE***",

"maintenance": false,

"log_type": "file",

"logfile": "\/var\/log\/nextcloud.log",

"loglevel": 1,

"mail_domain": "***REMOVED SENSITIVE VALUE***",

"mail_from_address": "***REMOVED SENSITIVE VALUE***",

"mail_smtpmode": "smtp",

"mail_sendmailmode": "smtp",

"mail_smtpsecure": "ssl",

"mail_smtpauthtype": "LOGIN",

"mail_smtpauth": 1,

"mail_smtpport": "465",

"mail_smtphost": "***REMOVED SENSITIVE VALUE***",

"mail_smtpname": "***REMOVED SENSITIVE VALUE***",

"mail_smtppassword": "***REMOVED SENSITIVE VALUE***",

"theme": "",

"app_install_overwrite": [

"activitylog",

"files_bpm",

"spreed",

"files_texteditor",

"talk_simple_poll",

"files_markdown",

"breezedark",

"socialsharing_facebook",

"files_fulltextsearch_tesseract",

"files_fulltextsearch",

"fulltextsearch",

"fulltextsearch_elasticsearch",

"event_update_notification",

"audioplayer",

"talk_matterbridge",

"workflow_media_converter"

],

"enable_previews": true,

"enabledPreviewProviders": [

"OC\\Preview\\Imaginary"

],

"preview_imaginary_url": "http:\/\/172.16.1.94:5648",

"updater.release.channel": "stable",

"data-fingerprint": "f73dc831633a092bcdaa54861dd227fd",

"remember_login_cookie_lifetime": 172800,

"session_lifetime": 7200,

"session_relaxed_expiry": false,

"session_keepalive": true,

"auto_logout": true,

"token_auth_enforced": false,

"token_auth_activity_update": 10,

"onlyoffice": {

"jwt_header": "AuthorizationJwt"

}

}