Thank you. I had disabled elastic search already but you have led me to one path that might give me an answer.

The server is maybe not the strongest and that might be the issue.

5 x MIRROR | 2 wide | 16.37 TiB, 128 GiB, Intel(R) Atom™ CPU C3758 @ 2.20GHz, 8 core TrueNAS-SCALE-22.12.3.3

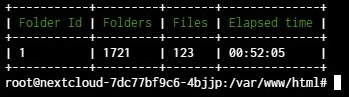

I stared occ scan from shell (its running in a truenas scale pod) and just typing

topfrom Truenas Scale’ s shell I see that the postgress server (pod) maxes out when the scanner hits some files. It will scan a few thousand files in some folders, then stop and continue.

So this seems to be a issue with postgres in the pod.

023-11-04T02:53:19.369295109Z 2023-11-04 02:53:19.369 UTC [109616] ERROR: duplicate key value violates unique constraint "oc_filecache_extended_pkey"

2023-11-04T02:53:19.369387859Z 2023-11-04 02:53:19.369 UTC [109616] DETAIL: Key (fileid)=(4664648) already exists.

2023-11-04T02:53:19.369412642Z 2023-11-04 02:53:19.369 UTC [109616] STATEMENT: INSERT INTO "oc_filecache_extended" ("fileid", "upload_time") VALUES($1, $2)

2023-11-04T02:53:19.480384048Z 2023-11-04 02:53:19.480 UTC [175394] ERROR: duplicate key value violates unique constraint "gf_versions_uniq_index"

2023-11-04T02:53:19.480490312Z 2023-11-04 02:53:19.480 UTC [175394] DETAIL: Key (file_id, "timestamp")=(4666021, 1544559530) already exists.

2023-11-04T02:53:19.480515150Z 2023-11-04 02:53:19.480 UTC [175394] STATEMENT: UPDATE "oc_group_folders_versions" SET "timestamp" = $1 WHERE "id" = $2

2023-11-04T02:53:19.509575490Z 2023-11-04 02:53:19.509 UTC [157972] ERROR: duplicate key value violates unique constraint "gf_versions_uniq_index"

2023-11-04T02:53:19.509656755Z 2023-11-04 02:53:19.509 UTC [157972] DETAIL: Key (file_id, "timestamp")=(4667617, 1550841542) already exists.

2023-11-04T02:53:19.509681731Z 2023-11-04 02:53:19.509 UTC [157972] STATEMENT: UPDATE "oc_group_folders_versions" SET "timestamp" = $1 WHERE "id" = $2

2023-11-04T02:53:31.079134628Z 2023-11-04 02:53:31.078 UTC [150162] ERROR: duplicate key value violates unique constraint "gf_versions_uniq_index"

2023-11-04T02:53:31.079354823Z 2023-11-04 02:53:31.078 UTC [150162] DETAIL: Key (file_id, "timestamp")=(4667699, 1550841462) already exists.

2023-11-04T02:53:31.079381252Z 2023-11-04 02:53:31.078 UTC [150162] STATEMENT: UPDATE "oc_group_folders_versions" SET "timestamp" = $1 WHERE "id" = $2

2023-11-04T02:53:31.214466832Z 2023-11-04 02:53:31.214 UTC [117638] ERROR: duplicate key value violates unique constraint "gf_versions_uniq_index"

2023-11-04T02:53:31.214527424Z 2023-11-04 02:53:31.214 UTC [117638] DETAIL: Key (file_id, "timestamp")=(4664566, 1699066387) already exists.

2023-11-04T02:53:31.214551760Z 2023-11-04 02:53:31.214 UTC [117638] STATEMENT: INSERT INTO "oc_group_folders_versions" ("file_id", "timestamp", "size", "mimetype", "metadata") VALUES($1, $2, $3, $4, $5)

2023-11-04T02:53:36.997713232Z 2023-11-04 02:53:36.997 UTC [150365] ERROR: duplicate key value violates unique constraint "gf_versions_uniq_index"

2023-11-04T02:53:36.997912223Z 2023-11-04 02:53:36.997 UTC [150365] DETAIL: Key (file_id, "timestamp")=(4667704, 1550841509) already exists.

2023-11-04T02:53:36.997937940Z 2023-11-04 02:53:36.997 UTC [150365] STATEMENT: UPDATE "oc_group_folders_versions" SET "timestamp" = $1 WHERE "id" = $2

2023-11-04T02:53:37.019759857Z 2023-11-04 02:53:37.019 UTC [111196] ERROR: duplicate key value violates unique constraint "gf_versions_uniq_index"

2023-11-04T02:53:37.019833049Z 2023-11-04 02:53:37.019 UTC [111196] DETAIL: Key (file_id, "timestamp")=(4664531, 1532905459) already exists.

2023-11-04T02:53:37.019857494Z 2023-11-04 02:53:37.019 UTC [111196] STATEMENT: UPDATE "oc_group_folders_versions" SET "timestamp" = $1 WHERE "id" = $2

2023-11-04T02:53:37.065251719Z 2023-11-04 02:53:37.065 UTC [112413] ERROR: duplicate key value violates unique constraint "gf_versions_uniq_index"

2023-11-04T02:53:37.065333512Z 2023-11-04 02:53:37.065 UTC [112413] DETAIL: Key (file_id, "timestamp")=(4664579, 1548877199) already exists.

2023-11-04T02:53:37.065358007Z 2023-11-04 02:53:37.065 UTC [112413] STATEMENT: UPDATE "oc_group_folders_versions" SET "timestamp" = $1 WHERE "id" = $2

2023-11-04T02:53:37.082539951Z 2023-11-04 02:53:37.082 UTC [111196] ERROR: duplicate key value violates unique constraint "oc_filecache_extended_pkey"

2023-11-04T02:53:37.082618152Z 2023-11-04 02:53:37.082 UTC [111196] DETAIL: Key (fileid)=(4664596) already exists.

2023-11-04T02:53:37.082643534Z 2023-11-04 02:53:37.082 UTC [111196] STATEMENT: INSERT INTO "oc_filecache_extended" ("fileid", "upload_time") VALUES($1, $2)

2023-11-04T02:53:37.116418369Z 2023-11-04 02:53:37.116 UTC [112413] ERROR: duplicate key value violates unique constraint "oc_filecache_extended_pkey"

2023-11-04T02:53:37.116502861Z 2023-11-04 02:53:37.116 UTC [112413] DETAIL: Key (fileid)=(4664577) already exists.

2023-11-04T02:53:37.116527689Z 2023-11-04 02:53:37.116 UTC [112413] STATEMENT: INSERT INTO "oc_filecache_extended" ("fileid", "upload_time") VALUES($1, $2)

2023-11-04T02:53:37.121831558Z 2023-11-04 02:53:37.121 UTC [158421] ERROR: duplicate key value violates unique constraint "gf_versions_uniq_index"

2023-11-04T02:53:37.121901125Z 2023-11-04 02:53:37.121 UTC [158421] DETAIL: Key (file_id, "timestamp")=(4667721, 1699066387) already exists.

2023-11-04T02:53:37.121925670Z 2023-11-04 02:53:37.121 UTC [158421] STATEMENT: INSERT INTO "oc_group_folders_versions" ("file_id", "timestamp", "size", "mimetype", "metadata") VALUES($1, $2, $3, $4, $5)

Its strange, because the first 9TB I added went really fast so I am wondering if there are some file name or file types folder names (just throwing thoughts out there) that might cause this.

I will continue to look and if I find a solution post it here. I see more people then me have this problem. (slow occ scan file)

regards

Tomas