Is it normal for the RAM on a Nextcloud server to appear at the same spot through a tool like htop? I have 128GB on my server but the graph reports 2.25GB and pretty much stays right there all the time. It doesn’t go any higher and rarely drops any lower. See attached image.

Services come with default settings. It helps a lot to give the database more resources so it can do proper caching, give php memory, adjust the number of available processes, use additional caching (redis etc.) …

But would this seem like some setting is acting like a “governor” limiting the usage to a max of 2GB?

Not sure how you installed it, if it is within a virtual environment, some solution might restrict the used RAM per virtual environment, and depending on this environment, you can probably change that.

But it can be just randomly something close to 2 GB, you can check what process uses how much.

My environment seems to have the entire lot applied. I guess what I am getting at is that I’m having serious issues with this Nextcloud web service locking up at times and it seems like the only relief valve is turning off Talk. We are only using Talk for messaging and I have about 20 active users at this time. I turn Talk back on and the website will run fine for awhile but then crawl. I cannot detect any other issues so I am theorizing that maybe the server wants more headroom but it cannot go past 2GB for some reason.

What did you expect?

I have 8 GB and that’s still too much ![]()

Some apps are realy RAM hungry, such as clamAV but a normal Nextcloud Server does not need that much RAM.

But you can configure your php, your Database, your Redis and give them more RAM Space.

You can find out the size of your databases on disk with

du -h "/var/lib/mysql"

(prosuming, that is the location of your database)

You will see, it is not that big at all.

With 126 GB of physical RAM available, you have plenty of resources to allocate to MySQL/MariaDB to optimize its performance. Here are some recommendations for optimizing the configuration:

- Increase the buffer pool size: The buffer pool is the memory area where InnoDB caches data and indexes. You should allocate a significant amount of memory to the buffer pool to improve performance.

Allocating a large buffer pool size is generally recommended for most MySQL/MariaDB installations, regardless of the size of the database. The idea is to have as much data and indexes cached in memory as possible, to reduce the need for disk I/O operations.

In your case, with a 1GB database size and 126GB of physical RAM, you may not need to allocate the full 80% to the buffer pool. However, you can still allocate a significant amount of memory to the buffer pool to improve performance. A good starting point would be to allocate 10-20% of your available RAM to the buffer pool, and then monitor performance to see if further adjustments are needed.

innodb_buffer_pool_size=12G

- Increase the number of threads: You should increase the number of threads used by MySQL to take advantage of the available CPU resources. A good starting point is to set the thread_cache_size to the number of CPU cores available.

thread_cache_size=8

- Enable the slow query log: The slow query log helps you identify queries that take a long time to execute. This can help you optimize your queries and improve overall performance. You can set the long_query_time parameter to define what queries should be considered slow. A good starting point is to set it to 1 second.

slow_query_log=ON

long_query_time=1

- Increase the max_connections value: The max_connections variable defines the maximum number of concurrent connections to MySQL. With a large amount of available RAM, you can increase this value to accommodate more connections.

max_connections=1000

Then you can move your /tmp dir into ram. If you don’t know how, you can ask me.

You can setup php-fpm to use more RAM. On Ubuntu that is done in

/etc/php/$VERSION/fpm/pool.d/www.conf

by tweeking the settings beginning with pm.

The memory settings for redis are to be tweeked in /etc/redis/redis.conf

If your RAM-use then still does not grow, than it may have some other reasons.

If you’d like more informed suggestions you’ll need to provide more background on your installation. Which install method did you use?

Are you running all the layers of the stack on the same box? Dockers? VMs?

Are you using Apache? FPM? What version of PHP? What OS? What is your NC config.php? Nextcloud log entries from around that top?

What parameters did you tune during setup (there are several sections in the admin manual on the topic)? Etc.

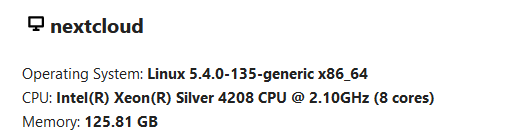

![]()

I installed on Ubuntu 20.04 directly from cli, no docker. I’m running ESXi with 3 VMs on the same box. One VM is OpenLDAP/iRedMail, one is Nextcloud, one is Collabora. The Nextcloud is Apache PHP-FPM 7.4. My install is rather vanilla other than bumping the PHP Memory limit to 2GB and setting up Redis. I recently realized that for running fpm I needed to be running mpm_event instead of prefork. I tried that but have the same issue. I just saw where perhaps I shouldn’t run the local memcache with Redis and set it up for APCu. No issue since then but that just happened earlier today.

@ernolf Can I change the mysql setting in a file or do I need to do them on at a sql prompt?

And, yes, if you don’t mind pointing me to moving the the tmp directory that would be nice.

I don’t know your installation but with MariaDB on Ubuntu/Debian it is done in

“/etc/mysql/mariadb.conf.d/50-server.cnf”

You should first look if tmpfs is not yet mounted on /tmp:

mount | grep "/tmp "

when it does not give you an echo like

tmpfs on /tmp type tmpfs (rw,nosuid,nodev,relatime)

then continue reading.

The thing is, when your /tmp actualy is on the normal filesystem, you must first find a way to remove all entries in that directory, before you mount your tmpfs (which is in fact your ram) on the mountpoint /tmp.

We do that with this steps:

Add this line to your /etc/fstab:

tmpfs /tmp tmpfs noauto,mode=1777,nosuid,nodev 0 0

Now create this file:

/etc/systemd/system/tmpfs-mount.service

with this entry:

[Unit]

Description=Remove contents of /tmp and mount tmpfs

[Service]

Type=oneshot

ExecStart=/bin/rm -rf /tmp/* && /bin/mount /tmp

RemainAfterExit=yes

[Install]

WantedBy=multi-user.target

Now you must execute this commands in your terminal:

sudo systemctl daemon-reload

sudo systemctl enable tmpfs-mount.service

Reboot your machine

after reboot, test again if it now is mounted as I described above (mount | grep "/tmp ")

If tmpfs is mounted as expected, edit /etc/fstab again and change the line

tmpfs /tmp tmpfs noauto,mode=1777,nosuid,nodev 0 0

into

tmpfs /tmp tmpfs mode=1777,nosuid,nodev 0 0

(removed noauto)

and enter this commands in terminal in order to remove the systemd.service we just created for the first mount:

sudo systemctl stop tmpfs-mount.service

sudo systemctl disable tmpfs-mount.service

sudo rm /etc/systemd/system/tmpfs-mount.service

After these steps, your /tmp directory will practicaly be moved into your RAM after every startup, which will give you a performance advantage.

Thanks @ernolf . I’m testing the changes to mysql first and then I will attempt the tmp dir move later. This is very easy to follow so thanks for detailing it for me!

Great guide.

@ernolf So far, so good without the tmp file move. I am showing an increase the htop from the capped out 2.25GB running now around 2.83GB. Will mysql just run as high as needed and not necessarily immediately consume that 12GB of space? I’m used to running MSSQL more and it taking up about anything you give it.

Hello @ernolf can you please help me to config nextcloud with my needs? I have change innodb_buffer_pool_size , thread_cache_size and max_connections as you described to mcoulter, but the load was 7 after 1 day. My sistem configuration is

Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz (16 threads)

Memory: 15.54 GB

PHP

Version: 8.1.2

Memory limit: 8 GB -seted by me

I use nextcloud as picture cloud (with Memories) and a few documents.

I have 10 users.

thread_cache_size should be like cores or like threads?

I read that I can use 80% of memory for thread_cache_size so that can be 12gb?

How many max_connections can I use?

Thank you in advance!

Hi @danielsutac,

It is not about nextcloud- but about MariaDB/MySQL configuration what your question is about.

The topic of database optimization is a very complex one. As you have already realized:

… it depends on the hardware used and the available resources, on the one hand, and on the other hand on the intended usecase. Then there are also small differences between MySQL and MariaDB but that’s not all, it also depends on the EXACT version!

It is also an extremely dynamic task. Some requirements are only needed very rarely, while others are needed every second.

Since it is currently impossible for me to weigh up all of these aspects in your individual case and to classify and evaluate them correctly, you have no choice but to study the online manuals of the exact version of your database in detail.

However, I would like to draw your attention to this great tool which I use myself:

It can observe and evaluate your MySQL/MariaDB database over a longer period of time in order to obtain valuable hints on optimization options.

Much and good luck,

ernolf

Thank you for your response.

I have another problem.

After update to Nextcloud 30.0.1 i get the warning mesage

Some headers are not set correctly on your instance - The Strict-Transport-Security HTTP header is not set (should be at least 15552000 seconds). For enhanced security, it is recommended to enable HSTS.

I haved same problem with my last update but was gone with

Can you help?

Not on a completely unrelated thread. Maybe start a new one. ![]()