Hi everyone and a happy new year!

I have been playing with my initial docker set-up for a few days over the holydays and am out of ideas. I am quite new to docker and nextcloud. So please, endure my ignorance.

I try to set up a Nextcloud AIO for my local net and VPN only. Why? Because 1) I want to try everything out before going live and 2) I want a family-only instance.

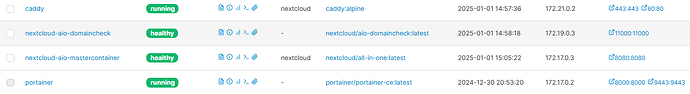

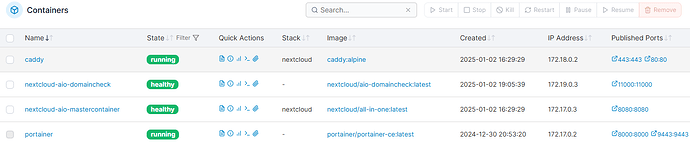

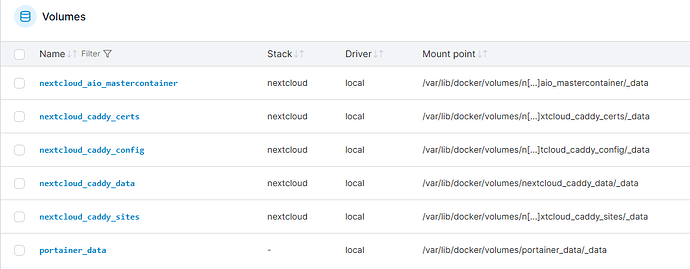

I set up doker on debian 12 and portainer to have everything nicely presented.

I followed the local-instance descrpion and went for “The recommended way”.

Used the docker-compose example and activated caddy as reverse-proxy.

From the reverse proxy documentation I first tried to Utilize host networking on both containers (i) but this failed because docker-proxy from portainer is running at port 8000 blocking the aio-mastercontainer to boot up.

So I went for the second option (ii) to use docker-internal network between nextcloud and caddy.

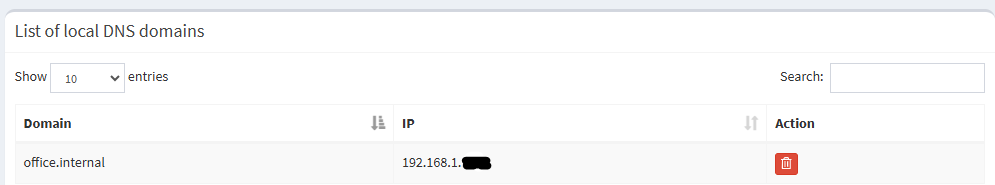

My node has the hostname office and pi-hole dns is overwriting office.internal to the IPv4.

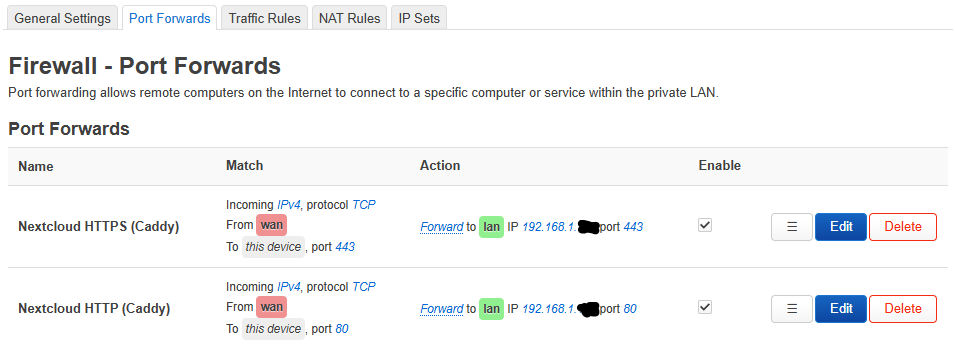

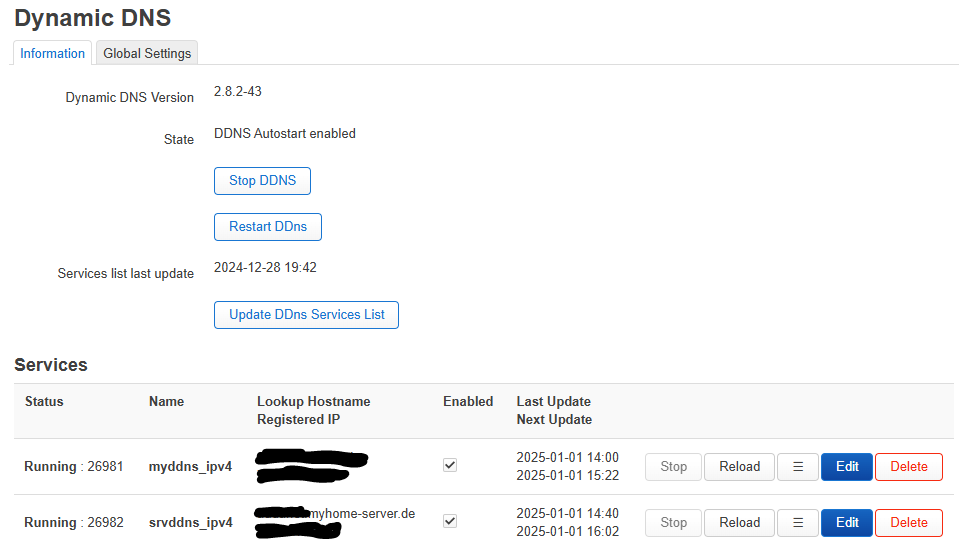

Openwrt is routing port 443 and 80 TCP to this IP and I have dyndns configured and running.

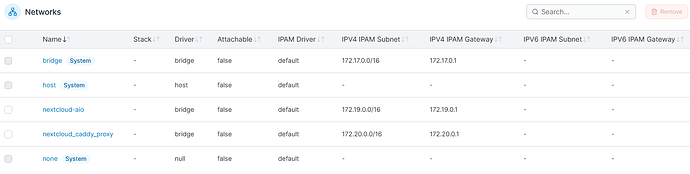

I have added the docker network nextcloud_caddy_proxy to connect the two containers internally.

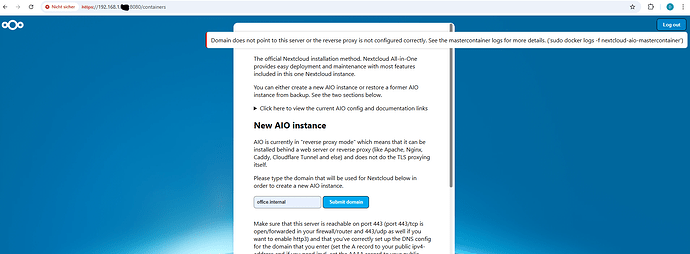

I can’t get caddy and nextcloud-aio-domaincheck talk to each other.

/srv # nc -z localhost 11000; echo $?

1

/srv # nc -z 172.19.0.3 11000; echo $?

^Cpunt!

/srv # nc -z nextcloud-aio-domaincheck 11000; echo $?

nc: bad address 'nextcloud-aio-domaincheck'

1

/srv #

office:~$ sudo docker logs nextcloud-aio-mastercontainer -f

Trying to fix docker.sock permissions internally...

Creating docker group internally with id 996

Initial startup of Nextcloud All-in-One complete!

You should be able to open the Nextcloud AIO Interface now on port 8080 of this server!

E.g. https://internal.ip.of.this.server:8080

⚠️ Important: do always use an ip-address if you access this port and not a domain as HSTS might block access to it later!

If your server has port 80 and 8443 open and you point a domain to your server, you can get a valid certificate automatically by opening the Nextcloud AIO Interface via:

https://your-domain-that-points-to-this-server.tld:8443

[Wed Jan 01 14:05:35.177422 2025] [mpm_event:notice] [pid 162:tid 162] AH00489: Apache/2.4.62 (Unix) OpenSSL/3.3.2 configured -- resuming normal operations

[Wed Jan 01 14:05:35.177897 2025] [core:notice] [pid 162:tid 162] AH00094: Command line: 'httpd -D FOREGROUND'

{"level":"info","ts":1735740335.1798623,"msg":"using config from file","file":"/Caddyfile"}

{"level":"info","ts":1735740335.1812928,"msg":"adapted config to JSON","adapter":"caddyfile"}

[01-Jan-2025 14:05:35] NOTICE: fpm is running, pid 167

[01-Jan-2025 14:05:35] NOTICE: ready to handle connections

NOTICE: PHP message: It seems like the ip-address of office.internal is set to an internal or reserved ip-address. (It was found to be set to '192.168.1.146')

NOTICE: PHP message: The response of the connection attempt to "https://office.internal:443" was:

NOTICE: PHP message: Expected was: 0837d9d7709853d983ea2f0d782602f5d8847f6c087e621a

NOTICE: PHP message: The error message was: TLS connect error: error:0A000438:SSL routines::tlsv1 alert internal error

NOTICE: PHP message: Please follow https://github.com/nextcloud/all-in-one/blob/main/reverse-proxy.md#6-how-to-debug-things in order to debug things!

Caddy seems to get the certificate issued correctly using the public dyndns address.

I exceeded my 5 certs per domain during my testing and have to wait ![]() .

.

office:~$ sudo docker logs caddy -f

{"level":"info","ts":1735745218.1380262,"msg":"using config from file","file":"/etc/caddy/Caddyfile"}

{"level":"info","ts":1735745218.139001,"msg":"adapted config to JSON","adapter":"caddyfile"}

{"level":"warn","ts":1735745218.1390214,"msg":"Caddyfile input is not formatted; run 'caddy fmt --overwrite' to fix inconsistencies","adapter":"caddyfile","file":"/etc/caddy/Caddyfile","line":2}

{"level":"info","ts":1735745218.1395395,"logger":"admin","msg":"admin endpoint started","address":":2020","enforce_origin":false,"origins":["//:2020"]}

{"level":"warn","ts":1735745218.1395597,"logger":"admin","msg":"admin endpoint on open interface; host checking disabled","address":":2020"}

{"level":"info","ts":1735745218.139655,"logger":"http.auto_https","msg":"server is listening only on the HTTPS port but has no TLS connection policies; adding one to enable TLS","server_name":"srv0","https_port":443}

{"level":"info","ts":1735745218.1396754,"logger":"http.auto_https","msg":"enabling automatic HTTP->HTTPS redirects","server_name":"srv0"}

{"level":"info","ts":1735745218.1397822,"logger":"tls.cache.maintenance","msg":"started background certificate maintenance","cache":"0xc00029f480"}

{"level":"info","ts":1735745218.1399503,"logger":"http","msg":"enabling HTTP/3 listener","addr":":443"}

{"level":"info","ts":1735745218.1405015,"msg":"failed to sufficiently increase receive buffer size (was: 208 kiB, wanted: 7168 kiB, got: 416 kiB). See https://github.com/quic-go/quic-go/wiki/UDP-Buffer-Sizes for details."}

{"level":"info","ts":1735745218.1407797,"logger":"http.log","msg":"server running","name":"srv0","protocols":["h1","h2","h3"]}

{"level":"info","ts":1735745218.140962,"logger":"http.log","msg":"server running","name":"remaining_auto_https_redirects","protocols":["h1","h2","h3"]}

{"level":"info","ts":1735745218.1409805,"logger":"http","msg":"enabling automatic TLS certificate management","domains":["xxxxxx.myhome-server.de"]}

{"level":"info","ts":1735745218.1422515,"msg":"autosaved config (load with --resume flag)","file":"/config/caddy/autosave.json"}

{"level":"info","ts":1735745218.1422822,"msg":"serving initial configuration"}

{"level":"info","ts":1735745218.1425366,"logger":"tls.obtain","msg":"acquiring lock","identifier":"xxxxxx.myhome-server.de"}

{"level":"info","ts":1735745218.1638799,"logger":"tls.obtain","msg":"lock acquired","identifier":"xxxxxx.myhome-server.de"}

{"level":"info","ts":1735745218.164086,"logger":"tls.obtain","msg":"obtaining certificate","identifier":"xxxxxx.myhome-server.de"}

{"level":"info","ts":1735745218.1641119,"logger":"tls","msg":"storage cleaning happened too recently; skipping for now","storage":"FileStorage:/data/caddy","instance":"8ee724e6-3576-4129-996d-15d33ed14d49","try_again":1735831618.1641095,"try_again_in":86399.999999528}

{"level":"info","ts":1735745218.1642547,"logger":"tls","msg":"finished cleaning storage units"}

{"level":"info","ts":1735745218.1649287,"logger":"http","msg":"waiting on internal rate limiter","identifiers":["xxxxxx.myhome-server.de"],"ca":"https://acme-v02.api.letsencrypt.org/directory","account":""}

{"level":"info","ts":1735745218.1649537,"logger":"http","msg":"done waiting on internal rate limiter","identifiers":["xxxxxx.myhome-server.de"],"ca":"https://acme-v02.api.letsencrypt.org/directory","account":""}

{"level":"info","ts":1735745218.164967,"logger":"http","msg":"using ACME account","account_id":"https://acme-v02.api.letsencrypt.org/acme/acct/2145544765","account_contact":[]}

{"level":"error","ts":1735745218.9803617,"logger":"tls.obtain","msg":"could not get certificate from issuer","identifier":"xxxxxx.myhome-server.de","issuer":"acme-v02.api.letsencrypt.org-directory","error":"HTTP 429 urn:ietf:params:acme:error:rateLimited - too many certificates (5) already issued for this exact set of domains in the last 168h0m0s, retry after 2025-01-02 18:20:16 UTC: see https://letsencrypt.org/docs/rate-limits/#new-certificates-per-exact-set-of-hostnames"}

{"level":"error","ts":1735745218.9804375,"logger":"tls.obtain","msg":"will retry","error":"[xxxxxx.myhome-server.de] Obtain: [xxxxxx.myhome-server.de] creating new order: attempt 1: https://acme-v02.api.letsencrypt.org/acme/new-order: HTTP 429 urn:ietf:params:acme:error:rateLimited - too many certificates (5) already issued for this exact set of domains in the last 168h0m0s, retry after 2025-01-02 18:20:16 UTC: see https://letsencrypt.org/docs/rate-limits/#new-certificates-per-exact-set-of-hostnames (ca=https://acme-v02.api.letsencrypt.org/directory)","attempt":1,"retrying_in":60,"elapsed":0.816458444,"max_duration":2592000}

z{"level":"info","ts":1735745243.5883558,"msg":"shutting down apps, then terminating","signal":"SIGTERM"}

{"level":"warn","ts":1735745243.5883925,"msg":"exiting; byeee!! ��","signal":"SIGTERM"}

{"level":"info","ts":1735745243.588437,"logger":"http","msg":"servers shutting down with eternal grace period"}

{"level":"info","ts":1735745243.5887704,"logger":"tls.obtain","msg":"releasing lock","identifier":"xxxxxx.myhome-server.de"}

{"level":"info","ts":1735745243.5889902,"logger":"admin","msg":"stopped previous server","address":":2020"}

{"level":"info","ts":1735745243.5890195,"msg":"shutdown complete","signal":"SIGTERM","exit_code":0}

My docker-compose is

services:

nextcloud-aio-mastercontainer:

image: nextcloud/all-in-one:latest

init: true

restart: always

container_name: nextcloud-aio-mastercontainer # This line is not allowed to be changed as otherwise AIO will not work correctly

network_mode: bridge

depends_on:

- caddy

volumes:

- nextcloud_aio_mastercontainer:/mnt/docker-aio-config # This line is not allowed to be changed as otherwise the built-in backup solution will not work

- /var/run/docker.sock:/var/run/docker.sock:ro # May be changed on macOS, Windows or docker rootless. See the applicable documentation. If adjusting, don't forget to also set 'WATCHTOWER_DOCKER_SOCKET_PATH'!

ports:

# - 80:80 # Can be removed when running behind a web server or reverse proxy (like Apache, Nginx, Caddy, Cloudflare Tunnel and else). See https://github.com/nextcloud/all-in-one/blob/main/reverse-proxy.md

- 8080:8080

# - 8443:8443 # Can be removed when running behind a web server or reverse proxy (like Apache, Nginx, Caddy, Cloudflare Tunnel and else). See https://github.com/nextcloud/all-in-one/blob/main/reverse-proxy.md

environment: # Is needed when using any of the options below

# AIO_DISABLE_BACKUP_SECTION: false # Setting this to true allows to hide the backup section in the AIO interface. See https://github.com/nextcloud/all-in-one#how-to-disable-the-backup-section

# AIO_COMMUNITY_CONTAINERS: # With this variable, you can add community containers very easily. See https://github.com/nextcloud/all-in-one/tree/main/community-containers#community-containers

APACHE_PORT: 11000 # Is needed when running behind a web server or reverse proxy (like Apache, Nginx, Caddy, Cloudflare Tunnel and else). See https://github.com/nextcloud/all-in-one/blob/main/reverse-proxy.md

APACHE_IP_BINDING: 127.0.0.1 # Should be set when running behind a web server or reverse proxy (like Apache, Nginx, Caddy, Cloudflare Tunnel and else) that is running on the same host. See https://github.com/nextcloud/all-in-one/blob/main/reverse-proxy.md

APACHE_ADDITIONAL_NETWORK: nextcloud_caddy_proxy # (Optional) Connect the apache container to an additional docker network. Needed when behind a web server or reverse proxy (like Apache, Nginx, Caddy, Cloudflare Tunnel and else) running in a different docker network on same server. See https://github.com/nextcloud/all-in-one/blob/main/reverse-proxy.md

BORG_RETENTION_POLICY: --keep-within=7d --keep-weekly=4 --keep-monthly=6 --keep-yearly=3 # Allows to adjust borgs retention policy. See https://github.com/nextcloud/all-in-one#how-to-adjust-borgs-retention-policy

# COLLABORA_SECCOMP_DISABLED: false # Setting this to true allows to disable Collabora's Seccomp feature. See https://github.com/nextcloud/all-in-one#how-to-disable-collaboras-seccomp-feature

NEXTCLOUD_DATADIR: /home/ncdata # Allows to set the host directory for Nextcloud's datadir. ⚠️⚠️⚠️ Warning: do not set or adjust this value after the initial Nextcloud installation is done! See https://github.com/nextcloud/all-in-one#how-to-change-the-default-location-of-nextclouds-datadir

# NEXTCLOUD_MOUNT: /mnt/ # Allows the Nextcloud container to access the chosen directory on the host. See https://github.com/nextcloud/all-in-one#how-to-allow-the-nextcloud-container-to-access-directories-on-the-host

# NEXTCLOUD_UPLOAD_LIMIT: 16G # Can be adjusted if you need more. See https://github.com/nextcloud/all-in-one#how-to-adjust-the-upload-limit-for-nextcloud

# NEXTCLOUD_MAX_TIME: 3600 # Can be adjusted if you need more. See https://github.com/nextcloud/all-in-one#how-to-adjust-the-max-execution-time-for-nextcloud

# NEXTCLOUD_MEMORY_LIMIT: 512M # Can be adjusted if you need more. See https://github.com/nextcloud/all-in-one#how-to-adjust-the-php-memory-limit-for-nextcloud

# NEXTCLOUD_TRUSTED_CACERTS_DIR: /path/to/my/cacerts # CA certificates in this directory will be trusted by the OS of the nextcloud container (Useful e.g. for LDAPS) See https://github.com/nextcloud/all-in-one#how-to-trust-user-defined-certification-authorities-ca

# NEXTCLOUD_STARTUP_APPS: deck twofactor_totp tasks calendar contacts notes # Allows to modify the Nextcloud apps that are installed on starting AIO the first time. See https://github.com/nextcloud/all-in-one#how-to-change-the-nextcloud-apps-that-are-installed-on-the-first-startup

# NEXTCLOUD_ADDITIONAL_APKS: imagemagick # This allows to add additional packages to the Nextcloud container permanently. Default is imagemagick but can be overwritten by modifying this value. See https://github.com/nextcloud/all-in-one#how-to-add-os-packages-permanently-to-the-nextcloud-container

# NEXTCLOUD_ADDITIONAL_PHP_EXTENSIONS: imagick # This allows to add additional php extensions to the Nextcloud container permanently. Default is imagick but can be overwritten by modifying this value. See https://github.com/nextcloud/all-in-one#how-to-add-php-extensions-permanently-to-the-nextcloud-container

NEXTCLOUD_ENABLE_DRI_DEVICE: true # This allows to enable the /dev/dri device in the Nextcloud container. ⚠️⚠️⚠️ Warning: this only works if the '/dev/dri' device is present on the host! If it should not exist on your host, don't set this to true as otherwise the Nextcloud container will fail to start! See https://github.com/nextcloud/all-in-one#how-to-enable-hardware-acceleration-for-nextcloud

# ENABLE_NVIDIA_GPU: true # This allows to enable the NVIDIA runtime and GPU access for containers that profit from it. ⚠️⚠️⚠️ Warning: this only works if an NVIDIA gpu is installed on the server. See https://github.com/nextcloud/all-in-one#how-to-enable-hardware-acceleration-for-nextcloud.

# NEXTCLOUD_KEEP_DISABLED_APPS: false # Setting this to true will keep Nextcloud apps that are disabled in the AIO interface and not uninstall them if they should be installed. See https://github.com/nextcloud/all-in-one#how-to-keep-disabled-apps

# SKIP_DOMAIN_VALIDATION: false # This should only be set to true if things are correctly configured. See https://github.com/nextcloud/all-in-one?tab=readme-ov-file#how-to-skip-the-domain-validation

# TALK_PORT: 3478 # This allows to adjust the port that the talk container is using. See https://github.com/nextcloud/all-in-one#how-to-adjust-the-talk-port

# WATCHTOWER_DOCKER_SOCKET_PATH: /var/run/docker.sock # Needs to be specified if the docker socket on the host is not located in the default '/var/run/docker.sock'. Otherwise mastercontainer updates will fail. For macos it needs to be '/var/run/docker.sock'

# security_opt: ["label:disable"] # Is needed when using SELinux

# Optional: Caddy reverse proxy. See https://github.com/nextcloud/all-in-one/discussions/575

# Hint: You need to uncomment APACHE_PORT: 11000 above, adjust cloud.example.com to your domain and uncomment the necessary docker volumes at the bottom of this file in order to make it work

# You can find further examples here: https://github.com/nextcloud/all-in-one/discussions/588

caddy:

image: caddy:alpine

restart: always

container_name: caddy

networks:

- nextcloud_caddy_proxy

volumes:

- caddy_certs:/certs

- caddy_config:/config

- caddy_data:/data

- caddy_sites:/srv

ports:

- 80:80

- 443:443

configs:

- source: Caddyfile

target: /etc/caddy/Caddyfile

configs:

Caddyfile:

content: |

{

admin :2020

}

https://audanet.myhome-server.de:443 {

reverse_proxy localhost:11000

}

networks:

nextcloud_caddy_proxy:

driver: bridge

volumes: # If you want to store the data on a different drive, see https://github.com/nextcloud/all-in-one#how-to-store-the-filesinstallation-on-a-separate-drive

nextcloud_aio_mastercontainer:

name: nextcloud_aio_mastercontainer # This line is not allowed to be changed as otherwise the built-in backup solution will not work

caddy_certs:

caddy_config:

caddy_data:

caddy_sites:

I am not quite sure if I should use localhost (as i understand only for host networking) or nextcloud-aio-apache or nextcloud-aio-domaincheck for docker-intern DNS name reolution in the inline caddyfile.

The caddy reverse proxy admin port defaults to 2019. It conflicts with an open 2019 in the nextcloud-aio docker (maybe another caddy inside?) and so I changed it to 2020.

{

admin :2020

}

https://audanet.myhome-server.de:443 {

reverse_proxy localhost:11000

}

Resolving nextcloud-aio-domaincheck seems not to work from the caddy container.