Hello,

I know there are many discussions on this topic, but none of them help me: I can’t upload files with a web browser (firefox and chromium) larger than 2 GB.

I don’t use the owncloud client, because it causes many problems. It also synchronizes directories and files, which I have excluded in the configuration! When I remove files in the cloud via a web browser, it also removes the corresponding local files on the client PC without any notification!

What a mess, what a shit!!!

How can I determine the true cause for this error and perhaps eliminate it?

Error message in nextcloud log:

Sabre\DAV\Exception\BadRequest: HTTP/1.1 400 expected filesize 2425871160 got 2026938368

- /srv/http/nextcloud/apps/dav/lib/Connector/Sabre/Directory.php - line 137: OCA\DAV\Connector\Sabre\File->put(Resource id #14)

- /srv/http/nextcloud/3rdparty/sabre/dav/lib/DAV/Server.php - line 1072: OCA\DAV\Connector\Sabre\Directory->createFile(‘nv-recovery-st-…’, Resource id #14)

- /srv/http/nextcloud/3rdparty/sabre/dav/lib/DAV/CorePlugin.php - line 525: Sabre\DAV\Server->createFile(‘Software/Androi…’, Resource id #14, NULL)

- [internal function] Sabre\DAV\CorePlugin->httpPut(Object(Sabre\HTTP\Request), Object(Sabre\HTTP\Response))

- /srv/http/nextcloud/3rdparty/sabre/event/lib/EventEmitterTrait.php - line 105: call_user_func_array(Array, Array)

- /srv/http/nextcloud/3rdparty/sabre/dav/lib/DAV/Server.php - line 479: Sabre\Event\EventEmitter->emit(‘method PUT’, Array)

- /srv/http/nextcloud/3rdparty/sabre/dav/lib/DAV/Server.php - line 254: Sabre\DAV\Server->invokeMethod(Object(Sabre\HTTP\Request), Object(Sabre\HTTP\Response))

- /srv/http/nextcloud/apps/dav/appinfo/v1/webdav.php - line 60: Sabre\DAV\Server->exec()

- /srv/http/nextcloud/remote.php - line 165: require_once(’/srv/http/nextc…’)

- {main}

My (cloud) server and client configs

Cloud client :

OS Archlinux x86_64 (actual)

linux kernel 4.9.27 (with “Large File” option set)

FS type ext4

Web browser firefox 53.0.2

Chromium 58.0.3029.110

Cloud server :

OS Archlinux x86_64 (actual)

linux kernel 4.9.27 (with “Large File” option set)

FS type ext4

Apache httpd 2.4.25

php 7.1.5

Nextcloud 11.0.3

/etc/php/php.ini :

post_max_size = 8G

upload_max_filesize = 8G

/srv/http/nextcloud/.user.ini :

upload_max_filesize=8G

post_max_size=8G

memory_limit=1024M

Nextcloud admin area, Additional settings :

Maximum upload size : 8GB

Thanks in advance,

Michael

Is there a file /etc/php/apache2/php.ini? If so check the max sizes in there. They might be set to 2G.

In relation to removing files on the web, which are then removed on the client, this is normal behaviour. When syncing files, it will also delete files which are removed on the other location (directly on the server or on the client side) to keep both the server and the Nextcloud folder in sync.

Hello Stephan,

this file doesn’t exist. I also checked all other config files for apache and php: there are no entries limiting the max. upload filesize.

Hi Michael,

OK I am just doing now a bit of brainstorm session to see if we can find a situation which could help pin point the cause:

- Are both machines on the same network?

- What is between the machines, switch/router/etc

- Is this behaviour also the same if done over the public internet?

- Is there enough memory on the server (I know it might seem strange, but just trying to cover every angle)

- Have you tried making a wireshark trace on the server, to analyse if and which side is closing the connection?

Hello Stephan,

1, Both boxes are in the same subnet 192.168.x.0

2. The server is connected to a wlan router via ethernet, the client via wifi.

3. There are no probs downloading large files from internet.

4. The server box has 8 GB RAM and isn’t really busy with other jobs (mainly httpd and mariadb).

5. Although I am a Linux expert (programming, kernel a.s.o.), but I barely know wireshark. I don’t know, how to recognize it!

Would you give me a clue as to how I can do it best?

Kind Regards,

Michael

On the server you could install the package tcpdump, this can capture the packages.

You can then run the following command, which will capture packets on eth0, and save it to /tmp/capture.pcap

tcpdump -s0 -i eth0 -w /tmp/capture.pcap

Then start your upload. Wait for the upload to fail and then stop tcpdump by pressing CTRL-C. Keep in mind the capture will log all data, so also the actual file you upload. Best make it save it somewhere with enough space.

Then download the file to your computer with graphical interface, which has Wireshark installed. You can then open the file with Wireshark.

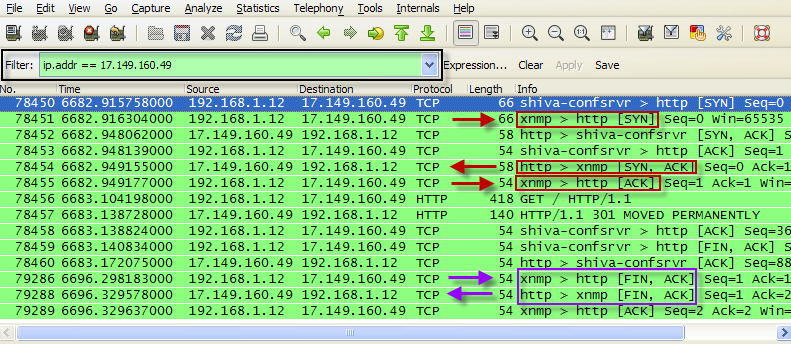

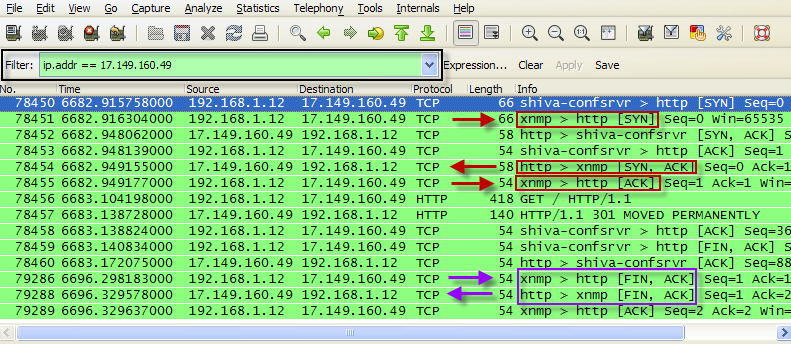

Then you want to look at the FIN packets (at the end of the trace, or very near the end) which would indicate which side is closing the socket. An example of the 3-way handshake (in red), and the FIN (in purple) can be find in the following picture:

Hello Stephan,

I have followed your instructions. Here is the result:

Kind Regards,

Michael

Sorry for bit late response.

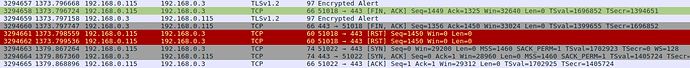

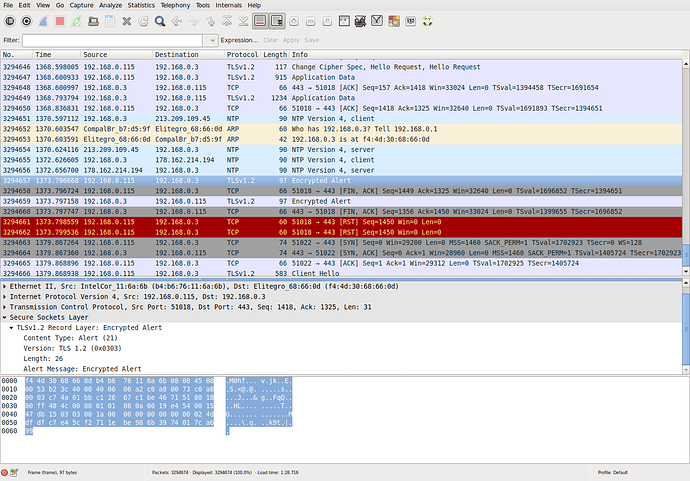

It looks like the .115 IP is terminating the socket, but just before can see the Encrypted Alert packet. This indicates something on the SSL layer is causing the socket to be terminated. Could you open the packet to show more details and check for the field “Content Type”. Can you tell me the value. Below is an example showing a value of 21

Secure Sockets Layer

TLSv1 Record Layer: Encrypted Alert

Content Type: Alert (21)

Version: TLS 1.0 (0x0301)

Length: 22

Alert Message: Encrypted Alert

Hello Stephan,

If I understand you correctly, you are interested in packet no 3294657 in the following screenshot:

OK from the Content Type 21, it looks like the packet got corrupted somehow and couldn’t be decrypted. The definition for Content Type 21 is:

“Decryption of a TLSCiphertext record is decrypted in an invalid way: either it was not an even multiple of the block length or its padding values, when checked, were not correct. This message is always fatal.”

it will be difficult to analyse this, since everything is encrypted so can’t check the actual content to see if anything is wrong with it.

OK just looking at the trace again, just before the Encrypted Alert it looks like it is updating its time. I am wondering if it drifted just enough that it gets out of sync, and when it get updated again the TLS packets are not valid anymore. Maybe analyse the clock itself.

Sorry I can’t give a direct answer for the solution.

I also see these “got x expected y” all the time; I feel everything is terribly broken and I can’t relax that data is safe. I also wonder who decided to not move data to the local trashbin if something gets deleted on the server; there’s not even an option. So Nextcloud can delete 1000Gio of data from the local filesystem and then not have enough trash bin size on the server; who comes up with this stuff?

I had similar issue due to running NextCloud inside nspawn container that forcefully mount /tmp inside container as tmpfs: https://github.com/systemd/systemd/blob/v233/src/nspawn/nspawn-mount.c#L555

Obviously, on node with low memory size that imposed limitation on php that use /tmp for uploaded files by default. Changing upload_tmp_dir to /var/tmp in /etc/php/7.0/fpm/php.ini solved issue for me.