I’ve been mulling the idea of replicating my home server to a dedicated server in a datacentre for when I’m away (and the home server is shut down) or I happen to lose the NC server for whatever reason.

I’ve seen some examples using GlusterFS or DRBD, and a master/slave DB relationship, but I’m looking at the feasibility of a slightly different approach:

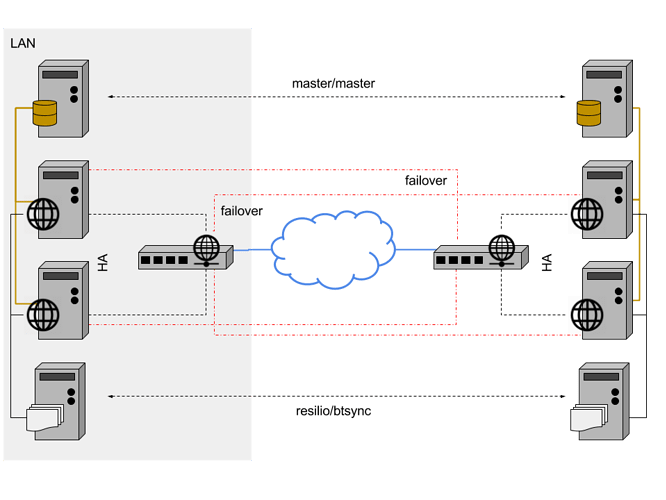

As it stands currently, besides a 2nd webserver my home system is setup as shown above. Data is stored on the container host and passed through to the container running NC. The database is also in its own container. The NC server is accessible via reverse proxy.

I’ve been using Resilio/BTsync since its release and feel it’s a good, solid sync engine. Using this to keep the data folders in sync across two systems via bidirectional sync appears to be a good way to go as it’s real-time and immediate. I’m not considering external storage right now as I think btsync is fast enough that it’d avoid database issues.

Now, could someone tell me why this wouldn’t work please? If Gluster/etc is a hard requirement I’d like to know why ![]()