@grouchysysadmin thanks for the guidance. I am going through it and the documentation on the GlusterFS.

My current setup:

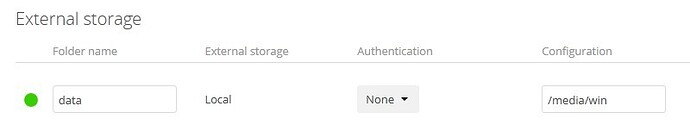

Server 1 - Dell PowerEdge 2950 32gb RAM Ubuntu 16.04 lts running NextCloud 10.0.1., php7, mysql, apache 2 and APCu caching - data is stored dev/sdb1 (836gb) mounted on /media/win in data folder. Bonded IP 192.168.1.201

Server 2 - Dell PowerEdge R710 32gb RAM Ubuntu 16.04 lts running NextCloud 10.0.1., php7, mysql, apache 2 and APCu caching - data is stored dev/sdb1 (836gb) mounted on /media/win in data folder. Bonded IP 192.168.1.202

I would like to ask if I can I use the already existing storage folder (for storage and volume replication) being dev/sdb1 /media/win data (that is also mounted as an external local storage on both NextClouds) or do I need to create another storage area and replicated volume?

I ask this as I already have a large amount of data stored within the folder data mounted on dev/sdb1 /media/win

Server 1

borgf003@CLD01:~$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 136.1G 0 disk

├─sda1 8:1 0 487M 0 part /boot

├─sda2 8:2 0 1K 0 part

└─sda5 8:5 0 135.7G 0 part

├─CLD01--vg-root 252:0 0 103.6G 0 lvm /

└─CLD01--vg-swap_1 252:1 0 32G 0 lvm [SWAP]

sdb 8:16 0 836.6G 0 disk

└─sdb1 8:17 0 836.6G 0 part /media/win

sr0 11:0 1 1024M 0 rom

sr1 11:1 1 1024M 0 rom

borgf003@CLD01:~$

Server 2

borgf003@CLD02:~$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 278.9G 0 disk

├─sda1 8:1 0 487M 0 part /boot

├─sda2 8:2 0 1K 0 part

└─sda5 8:5 0 278.4G 0 part

├─CLD02--vg-root 252:0 0 274.4G 0 lvm /

└─CLD02--vg-swap_1 252:1 0 4G 0 lvm [SWAP]

sdb 8:16 0 836.6G 0 disk

└─sdb1 8:17 0 836.6G 0 part /media/win

sr0 11:0 1 1024M 0 rom

borgf003@CLD02:~$

My installation so far on both servers

Installed GlusterFS

sudo apt-get install glusterfs-server

Checked GlusterFS Version

sudo glusterfs --version

......

glusterfs 3.7.6 built on Dec 25 2015 20:50:44

......

Set the DNS /etc/hosts

# GlusterFS

192.168.1.201 gluster1

192.168.1.202 gluster2

Probed each server

Gluster 1

borgf003@CLD01:~$ sudo gluster peer probe gluster2

peer probe: success. Host gluster2 port 24007 already in peer list

borgf003@CLD01:~$

Gluster2

borgf003@CLD02:~$ sudo gluster peer probe gluster1

peer probe: success. Host gluster1 port 24007 already in peer list

borgf003@CLD02:~$

Checked peer status

Gluster1

borgf003@CLD01:~$ sudo gluster peer status

Number of Peers: 1

Hostname: gluster2

Uuid: c259823a-5369-424a-a9b8-d9e67f926aed

State: Accepted peer request (Disconnected)

borgf003@CLD01:~$

Gluster 2

borgf003@CLD02:~$ sudo gluster peer status

Number of Peers: 1

Hostname: gluster1

Uuid: 6f9545ac-3d49-4599-857a-17a22266945d

State: Accepted peer request (Connected)

borgf003@CLD02:~$

I have a (Disconnected) on Gluster 1 for gluster2 is this ok?

UFW

To make sure I added the following:

Gluster 1

sudo ufw allow from 192.168.1.202

Gluster 2

sudo ufw allow from 192.168.1.201

From now on, I need guidance if I can use my already configured data folders as above.