Hey!

I am new here and also new to the whole Nextcloud/Docker universe in general, so I just want to apologize first of all for this unsophisticated post.

I did a fresh nextcloud installation in my OMV via the GUI with MariaDB and LetsEncrypt. Everything works just fine but I was asking myself how to do automated backups of my data and the whole installation in a sufficient way.

I connected a second external drive to my server tried to follow the steps discribed in the nextcloud documentation- unfortunately I was not able to write a working script to backup everything I need to build a new Server in case of a system-crash or anything similar…

Here are the bash commands I tried to use:

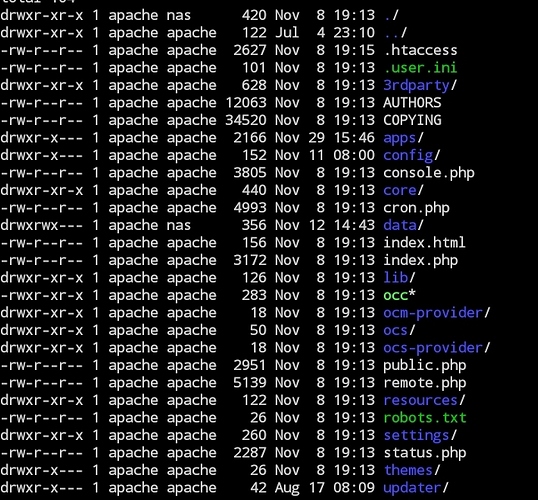

docker exec -it nextcloud bash

sudo -u abc php /config/www/nextcloud/occ maintenance:mode --on

exit

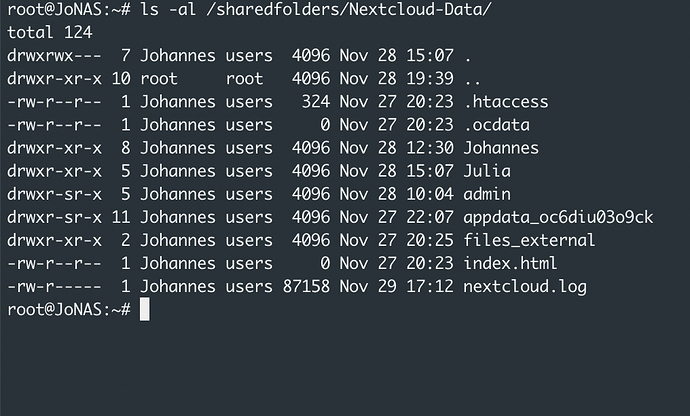

tar -cpzf /sharedfolders/Next_Backup/nextcloud_files_$now.tar.gz /sharedfolders/Nextcloud-Data/

docker exec mariadb sh -c exec mysqldump --single-transaction -h localhost -u 1000 -p100 nextcloud > /sharedfolders/Next_Backup/nextcloudDB_1.sql

docker exec -it nextcloud bash

sudo -u abc php /config/www/nextcloud/occ maintenance:mode --offI was able to create backupfiles of the Data and the Database… but I would like to automate the process… is this possible? And How? Or is there a better way to do this? (And how would I restore the data if needed?

Thank you very much in advance and kind regards!

Here is my System-Configuration:

Server configuration detail

Operating system: Linux 4.19.0-0.bpo.6-amd64 #1 SMP Debian 4.19.67-2+deb10u2~bpo9+1 (2019-11-12) x86_64

Webserver: nginx/1.16.1 (fpm-fcgi)

Database: mysql 10.4.10

PHP version: 7.3.11

Modules loaded: Core, date, libxml, pcre, zlib, filter, hash, readline, Reflection, SPL, session, cgi-fcgi, bz2, ctype, curl, dom, fileinfo, ftp, gd, gmp, iconv, intl, json, ldap, mbstring, openssl, pcntl, PDO, pgsql, posix, standard, SimpleXML, smbclient, sodium, sqlite3, xml, xmlwriter, zip, exif, imap, mysqlnd, pdo_pgsql, pdo_sqlite, Phar, xmlreader, pdo_mysql, apcu, igbinary, redis, memcached, imagick, mcrypt, libsmbclient, Zend OPcache

Nextcloud version: 17.0.1 - 17.0.1.1