Thanks to everyone who’s taken an interest in this, with master-master not supported I don’t see a way of expanding this beyond a local network PoC and so will spin it all down.

What I’ve learned:

-

SyncThing is super reliable, handles thousands upon thousands of files and has the flexibility to be used in a usecase such as this; full webapp replication between several servers without any fuss however:

-

Feasibility in a distributed deployment model where latency and potentially flaky connections wasn’t tested thoroughly, and while the version management was spot on at picking up on out of sync files, it would need far more testing before being even remotely considered for a larger deployment

-

Load on the server can be excessive if left setup in a default state, however intentionally reducing the cap for the processes will obviously impact sync speed and reliability

-

-

Data/app replication between sites is not enough if you have multiple nodes sharing a single database

-

The first few attempts at a NC upgrade for example led to issues with every other node once the first had a) updated itself and b) made changes to the database.

- This may be rectified by undertaking upgrades on each node individually but stopping at the DB step, opting to only run that on the one, I didn’t test this though.

-

I think a common assumption is you only need to replicate /data, however what happens if an admin adds an app on one node? It doesn’t show up on the others. Same for themes.

-

-

Galera is incredible

-

The way it instantly replicates, fails over and recovers, particularly combined with HAProxy which can instantly see when a DB is down and divert, is so silky smooth I couldn’t believe it.

-

Although a powercut entirely wiped Galera out and I had to build it again, this wouldn’t happen in a distributed scenario… despite the cluster failure I was still able to extract the database and start again with little fuss anyway.

-

The master-master configuration is not compatible with NC, so at best you’ll have a master-slave(n) configuration, where all nodes have to write to the one master no matter where in the world it might be located. Another solution for multimaster is needed in order for nodes to be able to work seamlessly as if they’re the primary at all times.

-

-

Remote session storage is a thing, and NC needs it if using multiple nodes behind a floating FQDN

-

Otherwise refreshing the page could take you to another node and your session would be nonexistent. Redis was a piece of cake to setup on a dedicated node (though it could also live on an existing node) and handled the sessions fine.

-

It doesn’t seem to scale well though, with documentation suggesting VPN or tunneling to gain access to each Redis node in a Redis “cluster” (not a real cluster) as authentication is plaintext or nothing, and that’s bad if you’re considering publishing it to the internet for nodes to connect to.

-

-

When working with multiple nodes, timing is everything.

-

I undertook upgrades that synced across all nodes without any further input past kicking it off on the first node; however don’t ever expect to be able to take the node out of maintenance mode in a full-sync environment until all nodes have successfully synced, otherwise some nodes will sit in a broken state until complete

-

Upgrading/installing apps/other sync related stuff takes quite a bit longer, but status pages of SyncThing or another distributed storage/sync solution will keep you updated on sync progress

-

-

The failover game must be strong

- By default HAProxy won’t check nodes quickly enough for my liking, meaning if a node suddenly fails and I refresh, it may result in an error. I found the following backend setting resulted in faster checks and was more ruthless about when a node comes back up (requires 2 successful responses to come back into the cluster, but only on fail to drop out):

server web1 10.10.20.1:80 check fall 1 rise 2

In summary, it was a good experiment and I learned a few things. Although I faced no issue with Galera in master-master I understand it could lead to problems down the road and it’s therefore not something I’d invest any more time into. I will however use some of the things I learned here to improve other things I manage and perhaps will be able to revisit this in the future if Global Scale flops (![]() jokes)

jokes)

If anyone does wish to have a poke around, check performance and whathaveyou, by all means feel free to do so for the rest of today, this evening it’s all being destroyed ![]()

Thanks particularly to @LukasReschke for your advice!

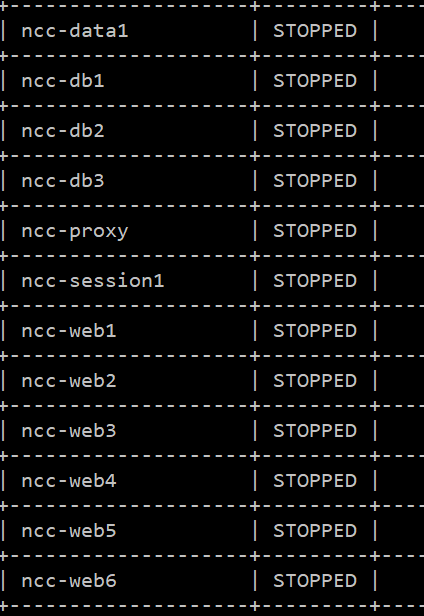

Edit: All now shut down.