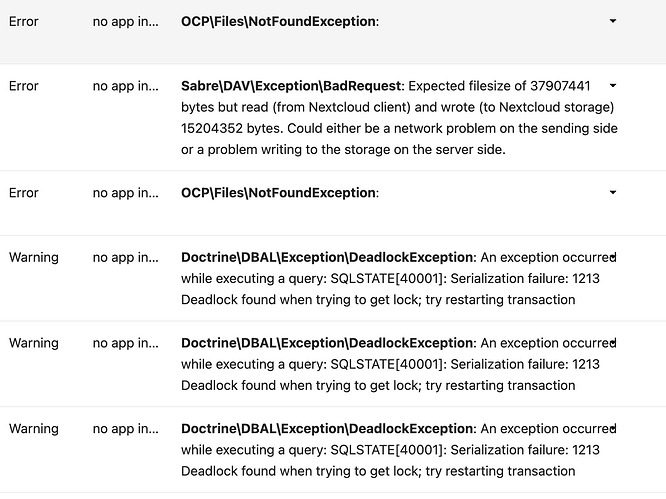

My client is synchronozing a huge amount of data, 1500 GB. In the process, the Nextcloud client encounters many broken connections, with messages such as “socket operation timed out”. The problem is that when such a message appears, Nextcloud takes a long time to retry/resume synching again. I don’t know how long it takes, more than ten minutes, maybe one hour or so. The only way of resuming right now is by manually clicking on “Force Sync now”, or restarting the the nextcloud client.

How to force the Nextcloud client to automatically retry/resume again after one minute (or ten minutes)?

Nextcloud Client: Version 3.9.1, osx-22.5.0.

Server:

$ ./occ support:report

Nextcloud version: 27.0.1 - 27.0.1.2

Operating system: Linux 6.2.16-4-pve #1 SMP PREEMPT_DYNAMIC PVE 6.2.16-4 (2023-07-07T04:22Z) x86_64

Webserver: Unknown (cli)

Database: mysql 10.5.19

PHP version: 8.1.20

Modules loaded: Core, date, libxml, openssl, pcre, zlib, filter, hash, json, pcntl, Reflection, SPL, session, standard, sodium, mysqlnd, PDO, xml, bcmath, calendar, ctype, curl, dom, mbstring, FFI, fileinfo, ftp, gd, gettext, gmp, iconv, igbinary, imagick, imap, intl, ldap, exif, mysqli, pdo_mysql, Phar, posix, readline, redis, shmop, SimpleXML, sockets, sysvmsg, sysvsem, sysvshm, tokenizer, xmlreader, xmlwriter, xsl, zip, Zend OPcache

I am currently trying a workaround, with these settings in nextcloud.cfg:

remotePollInterval=30000 (currently and default: 30 seconds)

forceSyncInterval=300000 (currently 5 minute, default: 2 hours)

fullLocalDiscoveryInterval=300000 (currently 5 minute, default: 1 hour)

notificationRefreshInterval=300000 (currently and default: 5 minute)