Nextcloud version : 16.0.5

Operating system and version : CentOS 7

Apache or nginx version : Apache 2.4.6

PHP version : PHP 7.2.24

Intel Xeon E3 1225v2

4 cores / 4 threads

3.2 GHz

16Go DDR3

2x 2 To SATA RAID 1

config.php :

'dbtype' => 'mysql',

'version' => '16.0.5.1',

'mysql.utf8mb4' => true,

'loglevel' => 2,

'memcache.local' => '\OC\Memcache\Redis',

'memcache.distributed' => '\OC\Memcache\Redis',

'redis' => [

'host' => '/var/run/redis/redis.sock',

'port' => 0,

'dbindex' => 0,

'password' => 'xxx',

'timeout' => 1.5,

],

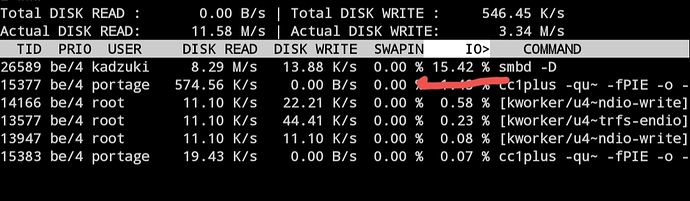

When i do a files:scan, it seems to me it is very long and i’m wondering if this is coming from a lack of performance from nextcloud or if i can optimize my software configuration.

Example :

Scanning a user folder (all files were put there by ftp so they were all unknown from nextcloud)

sudo -u apache php /home/www/nextcloud/occ files:scan --path=“dca”

±--------±-------±-------------+

| Folders | Files | Elapsed time |

±--------±-------±-------------+

| 41892 | 454701 | 16:33:22 |

±--------±-------±-------------+

For instance, it scan one file every 131ms or 7.6 files per second.

What took the time ? What nextcloud do during scan ? Analysing content of each file ? or just putting their path in database ?

Should i disable lock file system (if this is what took time) ?