Currently I am trying to get the high-performance backend for Talk to work on my virtual server (Ubuntu 18.04 hosted at Netcup). I followed these instructions: How to Install Nextcloud Talk High Performance Backend with Stun/Turnserver on Ubuntu – Markus' Blog

Nextcloud and the servers required for the high-performance backend are running on the same machine. Nextcloud is running on the subdomain cloud.domain.tld, whereas the signaling server is reachable on signaling.domain.tld.

Since Nextcloud is setup with Apache, I opted to also use Apache as reverse proxy for accessing the signaling server with the following config file:

<VirtualHost *:443>

ServerName signaling.domain.tld

DocumentRoot /var/www/html

LogLevel debug

CustomLog /var/log/apache2/signaling.domain.tld.access.log combined

ErrorLog /var/log/apache2/signaling.domain.tld.error.log

# Enable proxying Websocket requests to the standalone signaling server.

ProxyPass "/standalone-signaling/" "ws://127.0.0.1:8080/"

RewriteEngine On

# Websocket connections from the clients.

RewriteRule ^/standalone-signaling/spreed$ – [L]

# Backend connections from Nextcloud.

RewriteRule ^/standalone-signaling/api/(.*) http://127.0.0.1:8080/api/$1 [L,P]

Header always set Strict-Transport-Security "max-age=15552000; includeSubDomains"

SSLCertificateFile /etc/letsencrypt/live/signaling.domain.tld/fullchain.pem

SSLCertificateKeyFile /etc/letsencrypt/live/signaling.domain.tld/privkey.pem

Include /etc/letsencrypt/options-ssl-apache.conf

</VirtualHost>

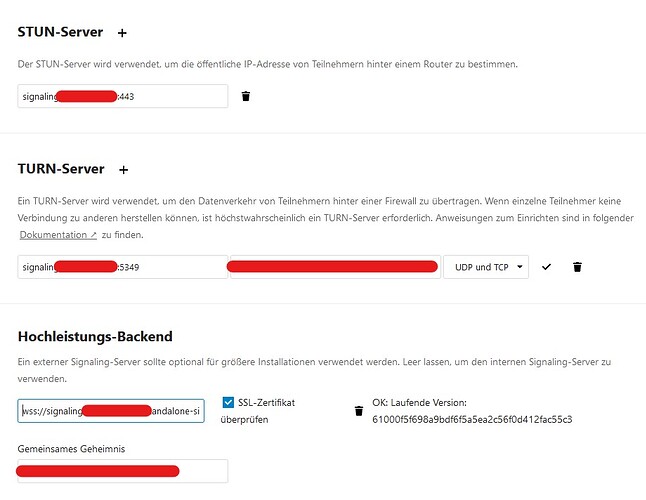

From the outside, everything looks successful. The servers are running, ports are bound, the configuration screen in Nextcloud says everything is fine:

Testing the spreed API also works as expected:

$ curl -i https://signaling.domain.tld/standalone-signaling/api/v1/welcome

HTTP/1.1 200 OK

Date: Fri, 05 Feb 2021 23:49:05 GMT

Server: nextcloud-spreed-signaling/61000f5f698a9bdf6f5a5ea2c56f0d412fac55c3`

Strict-Transport-Security: max-age=15552000; includeSubDomains

Content-Type: application/json; charset=utf-8

Content-Length: 94

{"nextcloud-spreed-signaling":"Welcome","version":"61000f5f698a9bdf6f5a5ea2c56f0d412fac55c3"}

But when I try to join a conversation, my clients (Firefox and Chrome) tell me “Fehler beim Aufbau der Signaling-Verbindung. Versuche erneut…” and when I check the console, then I see the following error:

GET wss://signaling.domain.tld/standalone-signaling/spreed [HTTP/1.1 400 Bad Request 63ms]

This is matched by an entry in the Apache access log:

2a02:[Rest of IP redacted] - - [06/Feb/2021:00:12:26 +0100] "GET /standalone-signaling/spreed HTTP/1.1" 400 4267 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:85.0) Gecko/20100101 Firefox/85.0"

Unfortunately, I have no further ideas what to try or what could be causing the error message. Could this be caused by IPv6 clients trying to access the high-performance backend? It seems to be configured solely based on IPv4, but my assumption was that the reverse proxy would take care of that.

Any hints? Any further information I could provide?