Hello

After upgrading from OC 8–>9–>10 to NextCloud 12.0.0 with apparently no problem, I’m facing a strange behaviour with filecache table.

Facts:

-

Clients complain about they cannot delete files.

-

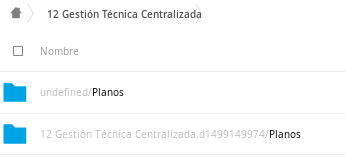

Affected files appear duplicated in the web view, and they are named with full greyed path (as if they came from different shares, but in fact they are not shared or at most they are but only once by the owner)

-

In the web view, non deletable files seem to be created by the complaining user a while ago (false) in the Details section, but modified days, weeks or months before (really true) in the time stamp label placed in-line at the rightmost part of the file entry.

-

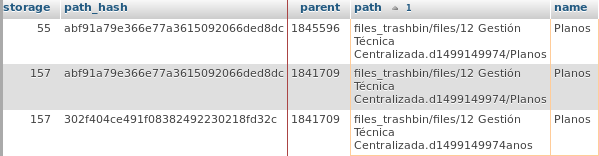

oc_filecache entries related to these files, are duplicated.

-

But both entries differ from each other in one thing:

- The right one has:

path = files/username/actual_path_to_file/filename

- The right one has:

-

and the wrong name has:

path = files/username/actual_path_to_filefilename

(Note the missing ‘/’ before filename)

-

Bad entries can be pointing to files into ‘files’, ‘files_versions’ of ‘files_trashbin’, no matter.

-

The issue is started in the moment any client app sync process starts, but it seems to be random, not all sync processes start the issue.

Small tool to monitor:

I built a simple SQL sentence to detect all of these wrong filecache entries:

SELECT * FROM oc_filecache where ( path not like Concat('%/',name) ) AND path <> name

The second ‘AND’ part is added to exclude entries like “files” itself and other single filename paths that don’t seem to be affected.

I noticed that the list of bad entries grows to infinity without control. After few days working, we can have more than 8.000 bad entries.

Fix trials:

-

to set PHP memory limit to 256M (from 128M) after reading about some similar problems, but still didn’t see any positive effect on the behaviour.

-

files:cleanup (It does nothing, no orphan file entry is detected by occ, having actually more than 8000 wrong ones in the table)

-

files:scan username (or --all)

This also does nothing on filecache table, but has an annoying effect: affected files are updated at client side every second after scan, and create conflict copies. Stoppable only by manual and urgent deletion of the bad entries, using SQL.

Up to now, the only thing that works, but for a small break is:

DELETE FROM oc_filecache WHERE ( path not like Concat ('%/', name) AND path <> name

This can give admin a break for a coffee or for a rest, but this is a very ugly solution…

I think there’s any issue which creates the wrong (without the last ‘/’) entries into oc_filecache.

Server is an Ubuntu Server 16.04 LTS using all its packages (PHP 7.0 and so on) running into a Proxmox VM environment using up to 8GB of RAM (for Nextcloud VM) and no other service is installed on this VM.

.

. ). I’ll tell you tomorrow

). I’ll tell you tomorrow

. So I think that 12.0.0. had a small bug evincing under intense connections (not under normal personal use).

. So I think that 12.0.0. had a small bug evincing under intense connections (not under normal personal use).