I am running Nextcloud Hub 3 (25.0.8) on Ubuntu 20.04. My cron jobs are set up correctly but don’t seem to actually do anything.

I can see from the system log the conjob is starting every 5 minutes. However, it doesn’t do anything. There is also nothing in the log file I have created for it.

The cronjob looks like this:

php -f --define apc.enable_cli=1 /var/www/clients/client0/web2/web/cron.php

I have then tried to manually run files:scan-app-data like so:

sudo -u web2 php --define apc.enable_cli=1 occ files:scan-app-data

This does also not return anything. The command prompt keeps blinking forever.

The nextcloud cron page is telling me:

Last background job execution ran 1 hour ago. Something seems wrong.

Any advice on how to fix this?

Hi.

I am assuming that you are running php-fpm as you need to add this to your CLI:

–define apc.enable_cli=1

Can you please try:

sudo -u web2 php8.1 --define apc.enable_cli=1 /var/www/clients/client0/web2/web/occ files:scan-app-data

@Kerasit thank you for your suggestion. I don’t use PHP FPM. I am using CLI. I put --define apc.enable_cli=1 for good measure. I thought it wouldn’t hurt. Tried with and without, no difference.

After running

sudo -u web2 php --define apc.enable_cli=1 /var/www/clients/client0/web2/web/occ files:scan-app-data

I get the same result I got from

udo -u web2 php --define apc.enable_cli=1 occ files:scan-app-data

Scanning AppData for files → The cursor blinks forever → Nothing happens.

Please execute with reference to excact php. php8.1 or 8.0 if that is what you are running.

Adding the apc.enable as a parameter defined php config, does no bad, so that is fine.

What is the result of

php -v

?

php -v

PHP 7.4.3-4ubuntu2.18 (cli) (built: Feb 23 2023 12:43:23) ( NTS )

Copyright (c) The PHP Group

Zend Engine v3.4.0, Copyright (c) Zend Technologies

with Zend OPcache v7.4.3-4ubuntu2.18, Copyright (c), by Zend Technologies

I ran

sudo -u web2 php7.4 --define apc.enable_cli=1 /var/www/clients/client0/web2/web/occ files:scan-app-data

Same result as before.

If you tests occ list?

sudo -u web2 php7.4 --define apc.enable_cli=1 /var/www/clients/client0/web2/web/occ list

Does it print all OCC commands?

Okay.

Any OCC commands doing anything?

update:check for example?

or

db:add-missing-indices

Yes, other OCC commands are working.

update:check gave the correct result: Everything up to date

db:add-missing-indices I already ran this previously. Works as intended:

Check indices of the share table.

Check indices of the filecache table.

Check indices of the twofactor_providers table.

Check indices of the login_flow_v2 table.

Check indices of the whats_new table.

Check indices of the cards table.

Check indices of the cards_properties table.

Check indices of the calendarobjects_props table.

Check indices of the schedulingobjects table.

Check indices of the oc_properties table.

Check indices of the oc_jobs table.

Check indices of the oc_direct_edit table.

Check indices of the oc_preferences table.

Check indices of the oc_mounts table.

Done.

Fair.

Any errors or success with files:cleanup

0 orphaned file cache entries deleted

0 orphaned mount entries deleted

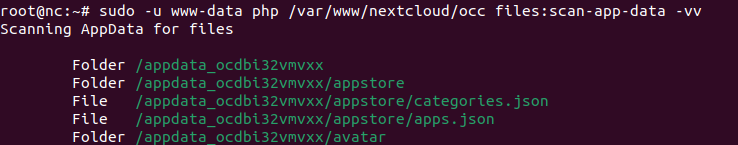

sudo -u web2 php7.4 --define apc.enable_cli=1 /var/www/clients/client0/web2/web/occ files:scan-app-data -vv

The -vv flag is outputting file and folder. If it is truly not running, nothing will be displayed.

If it runs, it will output like this.

Without the -vv parameter, I do not see this, and it only shows me the final table.

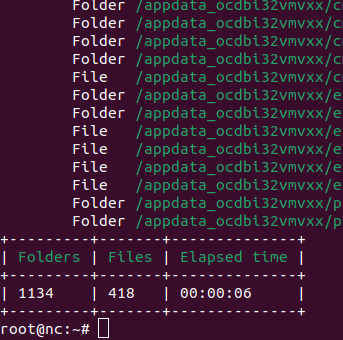

It’s running, but it’s running none stop.

I had to interupt it with CTRL C

I guess this would time out at some point if I don’t stop it? Or can this take very long like days?

As long as it is not “looping” over the same files, it is just because you have MANY files in there. Only patience.

It depends on the speed of I/O if the physical drive you are scanning as well as actual available resources on the machine.

I am running NC in an unprivileged container (that is why I am not concerned with running as root), but using a dedicated OpenZFS storage only for this. And I am not sharing the machine itself with anyone, so I have full access and use of the resources on that machine, minus a few low consuming services. I also set the max_memory consumption for CLI to -1.

So what do I do? Restart the server and let me cronjob run in the background for a very long time?

Or do I stop the cron and run this from command line until done? The cron runs every 5 minutes. I guess that wouldn’t do any good if it takes hours to finish.

Yes. Stop Cron and run this as well as files:scan --all “manually”. Hopefully this will only take hours or days the first time.

Ok, thank you for your help.

1 Like