I have the same issue! Haven’t figured out how to properly integrate localai yet. I have a few more specific questions to ask, though.

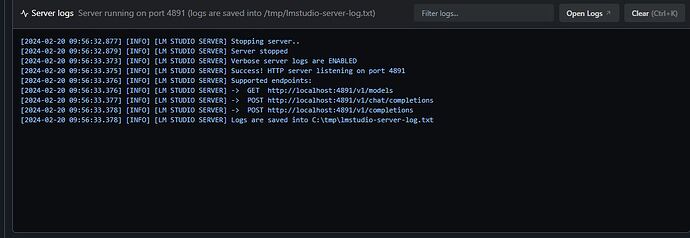

The following API endpoint all work locally:

LM Studio:

# Chat with an intelligent assistant in your terminal

from openai import OpenAI

# Point to the local server

client = OpenAI(base_url="http://localhost:1234/v1", api_key="not-needed")

history = [

{"role": "system", "content": "You are an intelligent assistant. You always provide well-reasoned answers that are both correct and helpful."},

{"role": "user", "content": "Hello, introduce yourself to someone opening this program for the first time. Be concise."},

]

while True:

completion = client.chat.completions.create(

model="local-model", # this field is currently unused

messages=history,

temperature=0.7,

stream=True,

)

new_message = {"role": "assistant", "content": ""}

for chunk in completion:

if chunk.choices[0].delta.content:

print(chunk.choices[0].delta.content, end="", flush=True)

new_message["content"] += chunk.choices[0].delta.content

history.append(new_message)

# Uncomment to see chat history

# import json

# gray_color = "\033[90m"

# reset_color = "\033[0m"

# print(f"{gray_color}\n{'-'*20} History dump {'-'*20}\n")

# print(json.dumps(history, indent=2))

# print(f"\n{'-'*55}\n{reset_color}")

print()

history.append({"role": "user", "content": input("> ")})

Ollama:

curl http://localhost:11434/api/chat -d '{

"model": "mistral",

"messages": [

{ "role": "user", "content": "why is the sky blue?" }

]

}'

LocalAI:

curl http://localhost:8080/v1/chat/completions -H "Content-Type: application/json" -d '{

"model": "llava",

"messages": [{"role": "user", "content": [{"type":"text", "text": "What is in the image?"}, {"type": "image_url", "image_url": {"url": "https://upload.wikimedia.org/wikipedia/commons/thumb/d/dd/Gfp-wisconsin-madison-the-nature-boardwalk.jpg/2560px-Gfp-wisconsin-madison-the-nature-boardwalk.jpg" }}], "temperature": 0.9}]}'

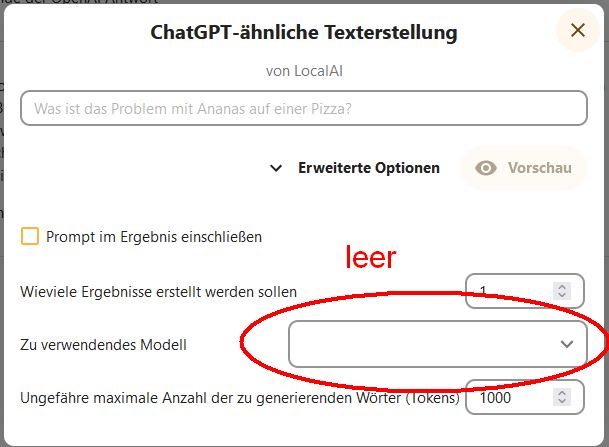

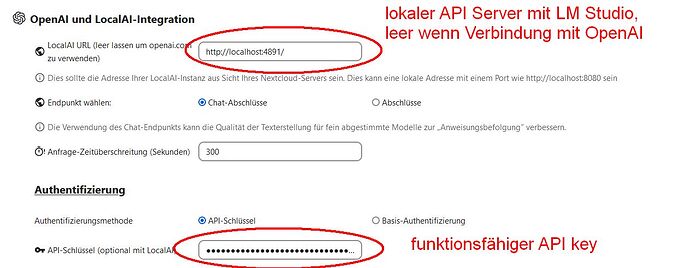

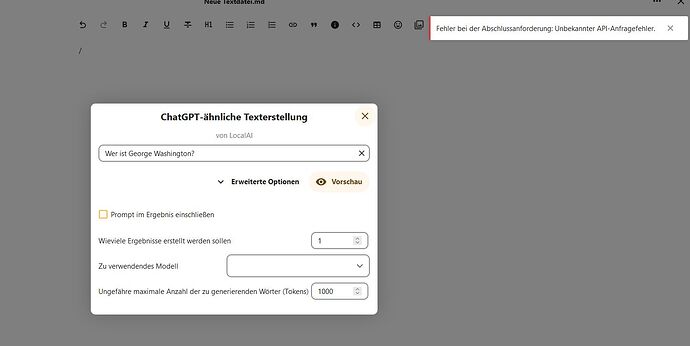

But none of these endpoints, when set as LocalAI URL (leave empty to use openai.com), work to enable the AI functionality on Nextcloud:

- http://localhost:1234/v1

- http://localhost:11434/api/chat

- http://localhost:8080/v1/chat/completions

Can someone please enlighten us how to set this up properly?

Must localai be installed on the same machine as the server, or can it be run remotely on the client?