Basically it works out from the box. Only that you have to check you nextcloud path, log path and create a log file for php occ output.

I put it in

/usr/local/bin/

with chmod 755

I run it under nextcloud user (for me it is www-data) basically twice per day at 2:30 and 14:30. You can run it also hourly. This is my cron config:

30 2,14 * * * perl -e 'sleep int(rand(1800))' && /usr/local/bin/nextcloud-file-sync.sh #Nextcloud file sync

Here I add perl -e ‘sleep int(rand(1800))’ to inject some random start time within 30 Minutes, but since it scans externals only it is not necessary any more. Your cron job config to run it hourly could be:

* */1 * * * /usr/local/bin/nextcloud-file-sync.sh

Lets go through what it does (valid for commit 44d9d2f):

COMMAND=/var/www/nextcloud/occ ← This is where your nextcloud OCC command located

OPTIONS=“files:scan” ← This is “Command” to run, just live it as it is

LOCKFILE=/tmp/nextcloud_file_scan ← Lock file to not execute script twice, if already ongoing

LOGFILE=/var/www/nextcloud/data/nextcloud.log ← Location of Nextcloud LOG file, will put some logs in Nextcloud format

CRONLOGFILE=/var/log/next-cron.log ← location for bash log. In case when there is an output by command generated. AND IT IS GENERATED…

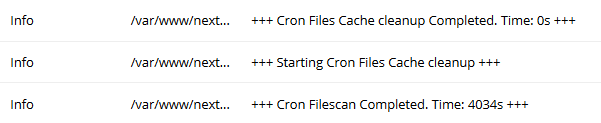

Line 22 will generate NC log input. You will see it in a GUI as:

![]()

From the line 26 starts the job, basically it is left from an older version of script and it is exactly what you done - scan all users, all shares, all locals with all folders. It takes ages to perform an a big installations, so I commented it.

Second option (line 31) is to scan for specific user, but as soon as I get more than one user with external shares it does not work also. Besides it is still scanning whole partition (local and remote) for specific user - commented.

From line 35 till 42 comments how to not forget how I get users from the NC, basically everything is happens in line 45, scipt will generate exactly path for external shares to be updated for all users (you can run it and test output). Here an example command:

sudo -u www-data php occ files_external:list | awk -F'|' '{print $8"/files"$3}'| tail -n +4 | head -n -1 | awk '{gsub(/ /, "", $0); print}'

and output:

user1/files/Dropbox-user1

user2/files/Dropbox-user2

user1/files/MagentaCloud

user2/files/MagentaCloud

user1/files/Local-Folder

Those lines will be read one by one and synced in line 49.

After this script will generate NC log output:

I have had some issues (like described here OCC files:cleanup - Does it delete the db table entries of the missing files?) in older NC versions, so I added workaround from line 60 till 67 as files:cleanup command, nut sure if it is needed now, but it does not harm anything.