I have mine setup in truenas, but not the built in container orchestration in truenas.

I’m guessing you are trying to copy files directly to the smb share and expecting them to show up in nextcloud. that wont work. if youre dead set on that approach you would have to have something triggering a file scan in nextcloud. thats not going to be easy to work with.

ill explain how i have mine set up

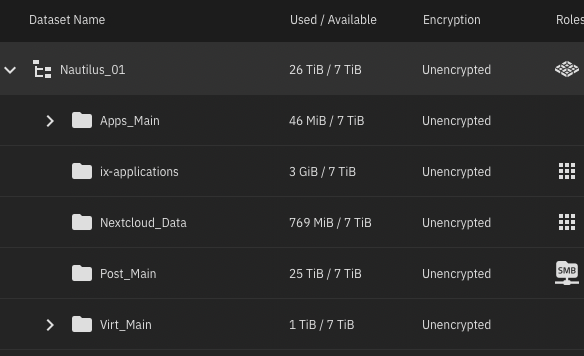

I opted to set up a vm running in the truenas virtualization to run things in docker, at first because i didnt like the gui layer of managing containers because i am more used to manually configuring the settings and everything for each container. i did not want to run apps in the truenas containerization ui and wanted to limit the overhead of apps on the NAS by controlling the resources available to the vm but also because i already had another server handling docker the same way and i wanted to move off of that baremetal server and move all of my containers to this vm. i just prefer managing things at a lower level and running things in a compose file and managing updates and configuring things myself through command line. i’ve not done performance monitoring to have facts on this point, but it just feels like this is a much faster response time for things with it set up this way. i am currently disappointed how they are moving some truenas services to those containers anyway as truenas scale gets updates, but thats another topic…

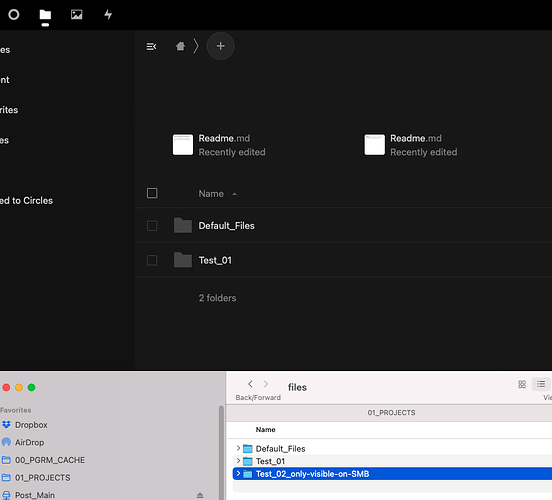

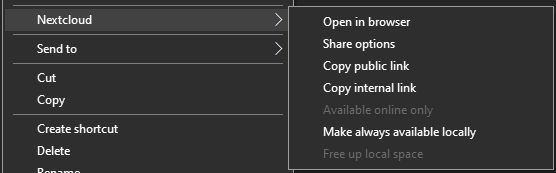

I have an NFS share pointing at a dataset specific to nextcloud in trunas that is my nextcloud data home. This allows me to create full snapshots of just the data and utilize truenas for what its made for. ( i also have a separate nfs share for my own user in nextcloud thats mounted after the other main nfs share but thats a complicated story and ill keep this simple) In the VM i then have the NFS share set up in /etc/fstab to mount on startup and then in the docker compose volume mounts for nextcloud container i have it mapped to the location of the nfs mount in the vm. From there I use the nextcloud desktop sync app on my remote computers and the nextcloud app on my phone. I also have a smb share set up directly to that dataset in truenas in case i need to get to it and cant access nextcloud for some reason, however this is only if i need to verify something or pull a copy of a file down because i know if i modify those files that would make nextcloud not consistent.

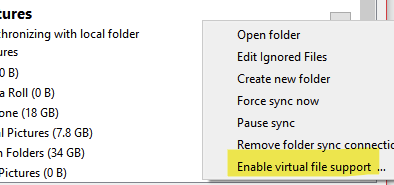

Basically i feel youre where i was a while ago with setup and youre trying to have two cooks in the kitchen. you need to have one source of truth for the data, either nextcloud or use smb shares. and if you do need to use the smb share to add a file you need to keep that in mind and trigger a file scan. iirc they have an auto filesystem scan setting now but its never been quick enough for moving a file to the smb share and then trying to access it in nextcloud to share it out.

one other reason why i would suggest setting up a vm to run on truenas for containers is to keep them all self contained from truenas and easier to manage and move if needed. in addition to nextcloud in the containers i also have many other containers in use. i have mysql, redis, elasticsearch, all in separate containers in the vm as well, all in use by nextcloud and some of the other containers with their own namespaces configured in redis and elasticsearch. i found that using mysql and redis in separate containers significantly improved the overall response of the nextcloud server(again, i have no performance metric proof of this outside of my experience and the other couple users i have on nextcloud saying its extremely faster).

if you want to understand more of what im talking about with the file scan research these topics

file scan command

occ files:scan --all

config.php settings

‘filesystem_check_changes’ => ‘1’,

(note my setup is probably overly complicated for some and all of this is not necessary to run nextcloud. its simply what i prefer and allows me to have data consistency and backup images that suit my own needs. the basic answer to your question is to not use smb to move files to nextcloud, set up a folder on your client PCs that you want to sync with nextcloud and use the nextcloud desktop sync app. and if you do use smb dont expect it to show up quickly unless you trigger a file scan.)

hope all that helps!

![]()