<!--

Thanks for reporting issues back to Nextcloud!

Note: This is the **issu…e tracker of Nextcloud**, please do NOT use this to get answers to your questions or get help for fixing your installation. This is a place to report bugs to developers, after your server has been debugged. You can find help debugging your system on our home user forums: https://help.nextcloud.com or, if you use Nextcloud in a large organization, ask our engineers on https://portal.nextcloud.com. See also https://nextcloud.com/support for support options.

Nextcloud is an open source project backed by Nextcloud GmbH. Most of our volunteers are home users and thus primarily care about issues that affect home users. Our paid engineers prioritize issues of our customers. If you are neither a home user nor a customer, consider paying somebody to fix your issue, do it yourself or become a customer.

Guidelines for submitting issues:

* Please search the existing issues first, it's likely that your issue was already reported or even fixed.

- Go to https://github.com/nextcloud and type any word in the top search/command bar. You probably see something like "We couldn’t find any repositories matching ..." then click "Issues" in the left navigation.

- You can also filter by appending e. g. "state:open" to the search string.

- More info on search syntax within github: https://help.github.com/articles/searching-issues

* This repository https://github.com/nextcloud/server/issues is *only* for issues within the Nextcloud Server code. This also includes the apps: files, encryption, external storage, sharing, deleted files, versions, LDAP, and WebDAV Auth

* SECURITY: Report any potential security bug to us via our HackerOne page (https://hackerone.com/nextcloud) following our security policy (https://nextcloud.com/security/) instead of filing an issue in our bug tracker.

* The issues in other components should be reported in their respective repositories: You will find them in our GitHub Organization (https://github.com/nextcloud/)

* You can also use the Issue Template app to prefill most of the required information: https://apps.nextcloud.com/apps/issuetemplate

-->

### How to use GitHub

* Please use the 👍 [reaction](https://blog.github.com/2016-03-10-add-reactions-to-pull-requests-issues-and-comments/) to show that you are affected by the same issue.

* Please don't comment if you have no relevant information to add. It's just extra noise for everyone subscribed to this issue.

* Subscribe to receive notifications on status change and new comments.

### Steps to reproduce

1. Setting up a cron-job for `cron.php`.

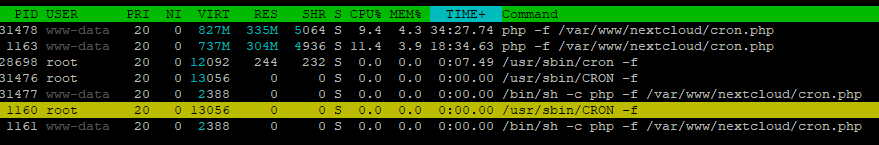

2. After a few days (in my case something between 24 and 48 hours) the CPU-usage of executing cron is very high.

3. I see a lot of `Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418`-error in the logs.

### Expected behaviour

No CPU eating and a empty log-file.

### Actual behaviour

After 5 days I see this error-log 250k times. The first version was `19.0.2.2`.

It occurs short time after I started the cronjob.

Also is the CPU usage constantly high.

Maybe it has something to do with my soft-links. They worked well since I added the cron-job (the folders are not longer shown up in nextcloud).

```

// first time in logs

{"reqId":"5782jW0p9rvmyXhE9lVd","level":3,"time":"2020-09-09T19:39:15+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.2.2"}

// last time in logs

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:58+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

```

### Server configuration

**Operating system:** centos for host and nextcloud with alpine inside docker

**Web server:** nginx

**Database:** postgres

**PHP version:** 7.4.10

**Nextcloud version:** 19.0.2 and 19.0.3 (both affected)

**Updated from an older Nextcloud/ownCloud or fresh install:** no

**Where did you install Nextcloud from:** Docker, see https://github.com/nextcloud/docker

**Signing status:**

<details>

<summary>Signing status</summary>

```

No errors have been found.

```

</details>

**List of activated apps:**

<details>

<summary>App list</summary>

```

Enabled:

- accessibility: 1.5.0

- activity: 2.12.0

- audioplayer: 2.11.2

- bruteforcesettings: 2.0.1

- cloud_federation_api: 1.2.0

- comments: 1.9.0

- contactsinteraction: 1.0.0

- dav: 1.15.0

- federatedfilesharing: 1.9.0

- federation: 1.9.0

- files: 1.14.0

- files_pdfviewer: 1.8.0

- files_rightclick: 0.16.0

- files_sharing: 1.11.0

- files_trashbin: 1.9.0

- files_versions: 1.12.0

- files_videoplayer: 1.8.0

- firstrunwizard: 2.8.0

- forms: 2.0.4

- logreader: 2.4.0

- lookup_server_connector: 1.7.0

- nextcloud_announcements: 1.8.0

- notifications: 2.7.0

- oauth2: 1.7.0

- occweb: 0.0.7

- password_policy: 1.9.1

- photos: 1.1.0

- privacy: 1.3.0

- provisioning_api: 1.9.0

- recommendations: 0.7.0

- richdocuments: 3.7.4

- serverinfo: 1.9.0

- settings: 1.1.0

- sharebymail: 1.9.0

- support: 1.2.1

- survey_client: 1.7.0

- systemtags: 1.9.0

- text: 3.0.1

- theming: 1.10.0

- twofactor_backupcodes: 1.8.0

- twofactor_webauthn: 0.2.5

- updatenotification: 1.9.0

- viewer: 1.3.0

- workflowengine: 2.1.0

Disabled:

- admin_audit

- encryption

- files_external

- user_ldap

```

</details>

**Nextcloud configuration:**

<details>

<summary>Config report</summary>

```

{

"system": {

"htaccess.RewriteBase": "\/",

"memcache.local": "\\OC\\Memcache\\APCu",

"apps_paths": [

{

"path": "\/var\/www\/html\/apps",

"url": "\/apps",

"writable": false

},

{

"path": "\/var\/www\/html\/custom_apps",

"url": "\/custom_apps",

"writable": true

}

],

"instanceid": "***REMOVED SENSITIVE VALUE***",

"passwordsalt": "***REMOVED SENSITIVE VALUE***",

"secret": "***REMOVED SENSITIVE VALUE***",

"trusted_domains": [

"next.akop.online"

],

"datadirectory": "***REMOVED SENSITIVE VALUE***",

"dbtype": "pgsql",

"version": "19.0.3.1",

"overwrite.cli.url": "https:\/\/next.akop.online",

"overwriteprotocol": "https",

"overwritehost": "next.akop.online",

"overwriteport": "443",

"dbname": "***REMOVED SENSITIVE VALUE***",

"dbhost": "***REMOVED SENSITIVE VALUE***",

"dbport": "54321",

"dbtableprefix": "oc_",

"dbuser": "***REMOVED SENSITIVE VALUE***",

"dbpassword": "***REMOVED SENSITIVE VALUE***",

"installed": true,

"maintenance": false,

"theme": "",

"loglevel": 2,

"mail_smtpmode": "smtp",

"mail_smtpsecure": "ssl",

"mail_sendmailmode": "smtp",

"mail_from_address": "***REMOVED SENSITIVE VALUE***",

"mail_domain": "***REMOVED SENSITIVE VALUE***",

"mail_smtpauth": 1,

"mail_smtpauthtype": "LOGIN",

"mail_smtphost": "***REMOVED SENSITIVE VALUE***",

"mail_smtpport": "465",

"mail_smtpname": "***REMOVED SENSITIVE VALUE***",

"mail_smtppassword": "***REMOVED SENSITIVE VALUE***",

"trusted_proxies": "***REMOVED SENSITIVE VALUE***",

"app_install_overwrite": [

"occweb",

"twofactor_webauthn"

]

},

"apps": {

"accessibility": {

"types": "",

"enabled": "yes",

"installed_version": "1.5.0"

},

"activity": {

"types": "filesystem",

"enabled": "yes",

"installed_version": "2.12.0",

"enable_email": "no"

},

"audioplayer": {

"installed_version": "2.11.2",

"types": "filesystem",

"enabled": "yes"

},

"backgroundjob": {

"lastjob": "2142"

},

"bruteforcesettings": {

"types": "",

"enabled": "yes",

"installed_version": "2.0.1"

},

"calendar": {

"installed_version": "2.0.3",

"types": "",

"enabled": "no"

},

"cloud_federation_api": {

"types": "filesystem",

"enabled": "yes",

"installed_version": "1.2.0"

},

"comments": {

"types": "logging",

"enabled": "yes",

"installed_version": "1.9.0"

},

"contacts": {

"installed_version": "3.3.0",

"types": "",

"enabled": "no"

},

"contactsinteraction": {

"installed_version": "1.0.0",

"types": "dav",

"enabled": "yes"

},

"core": {

"installedat": "1565451319.7764",

"vendor": "nextcloud",

"public_webdav": "dav\/appinfo\/v1\/publicwebdav.php",

"public_files": "files_sharing\/public.php",

"shareapi_enforce_links_password": "no",

"installed.bundles": "[\"CoreBundle\"]",

"scss.variables": "db81cddf52fdb3c8ca1e4c859e214124",

"oc.integritycheck.checker": "[]",

"updater.secret.created": "1570874709",

"enterpriseLogoChecked": "yes",

"lastupdatedat": "1600070101",

"lastupdateResult": "[]",

"shareapi_enable_link_password_by_default": "yes",

"backgroundjobs_mode": "cron",

"theming.variables": "975e48529bcf117c93aebc6e531b028a",

"lastcron": "1600080300"

},

"dav": {

"types": "filesystem",

"enabled": "yes",

"regeneratedBirthdayCalendarsForYearFix": "yes",

"buildCalendarSearchIndex": "yes",

"buildCalendarReminderIndex": "yes",

"installed_version": "1.15.0",

"chunks_migrated": "1"

},

"deck": {

"installed_version": "1.0.5",

"enabled": "no",

"types": "dav"

},

"federatedfilesharing": {

"types": "",

"enabled": "yes",

"installed_version": "1.9.0"

},

"federation": {

"types": "authentication",

"enabled": "yes",

"installed_version": "1.9.0"

},

"files": {

"types": "filesystem",

"enabled": "yes",

"cronjob_scan_files": "500",

"installed_version": "1.14.0"

},

"files_pdfviewer": {

"types": "",

"enabled": "yes",

"installed_version": "1.8.0"

},

"files_rightclick": {

"types": "",

"enabled": "yes",

"installed_version": "0.16.0"

},

"files_sharing": {

"types": "filesystem",

"enabled": "yes",

"installed_version": "1.11.0"

},

"files_texteditor": {

"installed_version": "2.8.0",

"types": "",

"enabled": "no"

},

"files_trashbin": {

"types": "filesystem,dav",

"enabled": "yes",

"installed_version": "1.9.0"

},

"files_versions": {

"types": "filesystem,dav",

"enabled": "yes",

"installed_version": "1.12.0"

},

"files_videoplayer": {

"types": "",

"enabled": "yes",

"installed_version": "1.8.0"

},

"firstrunwizard": {

"types": "logging",

"enabled": "yes",

"installed_version": "2.8.0"

},

"forms": {

"installed_version": "2.0.4",

"types": "",

"enabled": "yes"

},

"gallery": {

"types": "",

"installed_version": "18.4.0",

"enabled": "no"

},

"logreader": {

"types": "",

"enabled": "yes",

"installed_version": "2.4.0",

"levels": "01111",

"relativedates": "1"

},

"lookup_server_connector": {

"types": "authentication",

"enabled": "yes",

"installed_version": "1.7.0"

},

"mail": {

"installed_version": "1.4.1",

"enabled": "no",

"types": ""

},

"nextcloud_announcements": {

"types": "logging",

"enabled": "yes",

"installed_version": "1.8.0",

"pub_date": "Thu, 24 Oct 2019 00:00:00 +0200"

},

"notifications": {

"types": "logging",

"enabled": "yes",

"installed_version": "2.7.0"

},

"oauth2": {

"types": "authentication",

"enabled": "yes",

"installed_version": "1.7.0"

},

"occweb": {

"enabled": "yes",

"types": "",

"installed_version": "0.0.7"

},

"password_policy": {

"enabled": "yes",

"types": "authentication",

"installed_version": "1.9.1"

},

"photos": {

"installed_version": "1.1.0",

"types": "",

"enabled": "yes"

},

"privacy": {

"types": "",

"enabled": "yes",

"installed_version": "1.3.0"

},

"provisioning_api": {

"types": "prevent_group_restriction",

"enabled": "yes",

"installed_version": "1.9.0"

},

"recommendations": {

"types": "",

"enabled": "yes",

"installed_version": "0.7.0"

},

"richdocuments": {

"installed_version": "3.7.4",

"types": "filesystem,dav,prevent_group_restriction",

"enabled": "yes",

"disable_certificate_verification": "yes",

"wopi_url": "nextcloud-collabora-code:9980",

"public_wopi_url": "https:\/\/colla.akop.online"

},

"richdocumentscode": {

"installed_version": "4.2.602",

"types": "",

"enabled": "no"

},

"serverinfo": {

"types": "",

"enabled": "yes",

"installed_version": "1.9.0"

},

"settings": {

"installed_version": "1.1.0",

"types": "",

"enabled": "yes"

},

"sharebymail": {

"types": "filesystem",

"enabled": "yes",

"installed_version": "1.9.0"

},

"spreed": {

"installed_version": "9.0.1",

"project_access_invalidated": "1",

"enabled": "no",

"stun_servers": "***REMOVED SENSITIVE VALUE***",

"types": "prevent_group_restriction",

"signaling_servers": "***REMOVED SENSITIVE VALUE***",

"turn_servers": "***REMOVED SENSITIVE VALUE***"

},

"support": {

"enabled": "yes",

"types": "session",

"installed_version": "1.2.1",

"SwitchUpdaterServerHasRun": "yes"

},

"survey_client": {

"types": "",

"enabled": "yes",

"installed_version": "1.7.0",

"files_sharing": "no",

"encryption": "no"

},

"systemtags": {

"types": "logging",

"enabled": "yes",

"installed_version": "1.9.0"

},

"text": {

"enabled": "yes",

"types": "dav",

"installed_version": "3.0.1"

},

"theming": {

"types": "logging",

"enabled": "yes",

"installed_version": "1.10.0",

"name": "akops's Nextcloud",

"url": "***REMOVED SENSITIVE VALUE***",

"slogan": "***REMOVED SENSITIVE VALUE***",

"backgroundMime": "image\/jpeg",

"color": "#00628c",

"cachebuster": "19"

},

"twofactor_backupcodes": {

"types": "",

"enabled": "yes",

"installed_version": "1.8.0"

},

"twofactor_webauthn": {

"installed_version": "0.2.5",

"enabled": "yes",

"types": ""

},

"updatenotification": {

"types": "",

"enabled": "yes",

"update_check_errors": "0",

"files_rightclick": "0.15.1",

"installed_version": "1.9.0",

"core": "19.0.3.1"

},

"viewer": {

"types": "",

"enabled": "yes",

"installed_version": "1.3.0"

},

"workflowengine": {

"types": "filesystem",

"enabled": "yes",

"installed_version": "2.1.0"

}

}

}

```

</details>

**Are you using external storage, if yes which one:** pvc from kubernetes

**Are you using encryption:** no

### Logs

#### Nextcloud log (data/nextcloud.log)

<details>

<summary>Nextcloud log (last 100 lines)</summary>

```

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:02+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:02+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:03+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:03+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"opendir(/var/www/html/data/andre/files/Sonstiges/Filme////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////Filme von Peter): failed to open dir: Filename too long at /var/www/html/lib/private/Files/Storage/Local.php#131","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:05+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:05+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:05+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:06+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:06+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:07+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:07+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:07+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:08+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"opendir(/var/www/html/data/andre/files/Sonstiges/Filme/////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////Filme von Peter): failed to open dir: Filename too long at /var/www/html/lib/private/Files/Storage/Local.php#131","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:11+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:11+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:12+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:12+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:13+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:13+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:14+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:14+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:15+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"opendir(/var/www/html/data/andre/files/Sonstiges/Filme//////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////Filme von Peter): failed to open dir: Filename too long at /var/www/html/lib/private/Files/Storage/Local.php#131","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:17+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:18+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:18+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:18+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:19+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:20+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:20+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:20+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:21+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"opendir(/var/www/html/data/andre/files/Sonstiges/Filme///////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////Filme von Peter): failed to open dir: Filename too long at /var/www/html/lib/private/Files/Storage/Local.php#131","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:22+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:22+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:22+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:22+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:23+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:23+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:24+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:24+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:24+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"opendir(/var/www/html/data/andre/files/Sonstiges/Filme////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////Filme von Peter): failed to open dir: Filename too long at /var/www/html/lib/private/Files/Storage/Local.php#131","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:26+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:26+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:26+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:26+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:26+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:27+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:27+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:28+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:28+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"opendir(/var/www/html/data/andre/files/Sonstiges/Filme/////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////Filme von Peter): failed to open dir: Filename too long at /var/www/html/lib/private/Files/Storage/Local.php#131","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:29+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:30+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:30+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:30+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:31+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:31+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:32+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:32+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:32+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"opendir(/var/www/html/data/andre/files/Sonstiges/Filme//////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////Filme von Peter): failed to open dir: Filename too long at /var/www/html/lib/private/Files/Storage/Local.php#131","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:35+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:35+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:35+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:36+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:36+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:37+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:37+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:37+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:38+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"opendir(/var/www/html/data/andre/files/Sonstiges/Filme///////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////Filme von Peter): failed to open dir: Filename too long at /var/www/html/lib/private/Files/Storage/Local.php#131","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:40+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:40+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:41+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:41+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:41+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:42+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:42+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:43+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:43+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"opendir(/var/www/html/data/andre/files/Sonstiges/Filme////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////Filme von Peter): failed to open dir: Filename too long at /var/www/html/lib/private/Files/Storage/Local.php#131","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:46+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:46+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:46+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:46+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:46+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:46+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:46+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}

{"reqId":"z1DPk2vmvkTALOrGtv1S","level":3,"time":"2020-09-13T22:40:46+00:00","remoteAddr":"","user":"--","app":"PHP","method":"","url":"--","message":"Trying to access array offset on value of type null at /var/www/html/lib/private/Files/Cache/Scanner.php#418","userAgent":"--","version":"19.0.3.1"}