Try to run (under NC user):

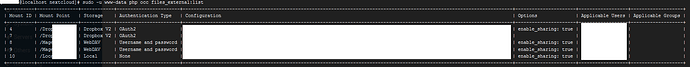

php occ files_external:list

you should see something similar to this:

This will output list of all external shares. Check that it is not empty.

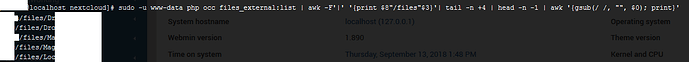

Then try to run and check the output (it should be list of USERNAME/files/SHARENAME):

php occ files_external:list | awk -F'|' '{print $8"/files"$3}'| tail -n +4 | head -n -1 | awk '{gsub(/ /, "", $0); print}'

Then take one path (from the 1st table, or last adopted list) and run command to check what happens:

php occ files:scan --path="/USERNAME/files/Mount_Point"

Every thing must bus executed under your NC user. For Ubuntu I used sudo -u www-data command as www-data is my NC user. Hope it helps you in trouble shooting.