So, uh, I have quite a setup I’ve been trying to serve on my personal network. I’ll try my best to provide what details I can.

I have an Ubuntu server, with Docker installed via Snap. Caddy is installed directly in Ubuntu itself, and I’ve been using it to serve various services running in Docker to specific subfolders. I’m using Avahi to resolve hostname rather than a DNS server. Obviously, this would mean I only intend Nextcloud to only be accessible within my wifi network rather than public.

I’ve been struggling with two problems:

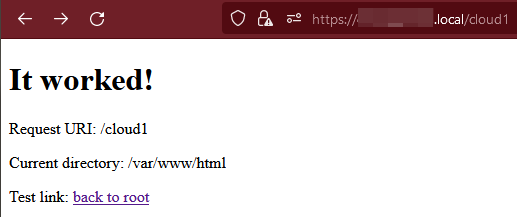

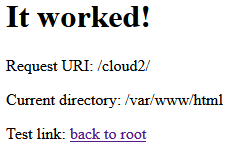

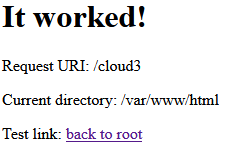

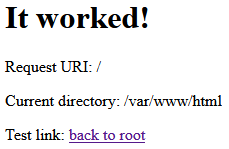

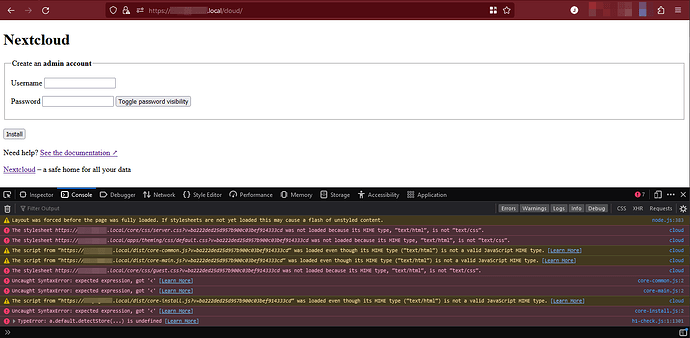

- Serving Nextcloud at

hostname.local/cloud, and - getting the Nextcloud docker container itself to connect to Mariadb.

In regards to serving under /cloud, I’ve been using Portainer and putting this compose configuration file as stack:

version: '3.8'

volumes:

nextcloud:

db:

services:

db:

image: mariadb:10.6

restart: always

ports:

- 3306:3306

command: --transaction-isolation=READ-COMMITTED --log-bin=binlog --binlog-format=ROW

volumes:

- db:/var/lib/mysql

environment:

- MYSQL_ROOT_PASSWORD=pass1

- MYSQL_PASSWORD=pass2

- MYSQL_DATABASE=nextcloud

- MYSQL_USER=nextcloud

app:

image: nextcloud

restart: always

ports:

- 8383:80

links:

- db

volumes:

- /media/www/nextcloud/data:/var/www/html/data

- nextcloud:/var/www/html

- /media/www/nextcloud/config/.htaccess:/var/www/html/.htaccess

environment:

- MYSQL_PASSWORD=pass2

- MYSQL_DATABASE=nextcloud

- MYSQL_USER=nextcloud

- MYSQL_HOST=db

For my Caddyfile in Ubuntu, I have:

hostname.local, 10.0.0.171 {

tls /hostname.local.cert.pem /hostname.local.key.pem

encode zstd gzip

# Handle subfolders

# Here's portainer

handle_path /docker/* {

reverse_proxy https://localhost:9443 {

transport http {

tls_insecure_skip_verify

}

}

}

# Here's Nextcloud...eventually

handle /cloud* {

reverse_proxy localhost:8383

}

# Otherwise, host PHP

root * /var/www/php/

php_fastcgi unix//run/php/php8.1-fpm.sock

file_server

}

As made obvious by this line in compose – - /media/www/nextcloud/config/.htaccess:/var/www/html/.htaccess – I’ve attempted to configure Apache with the following .htaccess file below. It just copy-pastes what was already in the container, but attempts to change all the rewrite rules to /cloud.

<IfModule mod_headers.c>

<IfModule mod_setenvif.c>

<IfModule mod_fcgid.c>

SetEnvIfNoCase ^Authorization$ "(.+)" XAUTHORIZATION=$1

RequestHeader set XAuthorization %{XAUTHORIZATION}e env=XAUTHORIZATION

</IfModule>

<IfModule mod_proxy_fcgi.c>

SetEnvIfNoCase Authorization "(.+)" HTTP_AUTHORIZATION=$1

</IfModule>

<IfModule mod_lsapi.c>

SetEnvIfNoCase ^Authorization$ "(.+)" XAUTHORIZATION=$1

RequestHeader set XAuthorization %{XAUTHORIZATION}e env=XAUTHORIZATION

</IfModule>

</IfModule>

<IfModule mod_env.c>

# Add security and privacy related headers

# Avoid doubled headers by unsetting headers in "onsuccess" table,

# then add headers to "always" table: https://github.com/nextcloud/server/pull/19002

Header onsuccess unset Referrer-Policy

Header always set Referrer-Policy "no-referrer"

Header onsuccess unset X-Content-Type-Options

Header always set X-Content-Type-Options "nosniff"

Header onsuccess unset X-Frame-Options

Header always set X-Frame-Options "SAMEORIGIN"

Header onsuccess unset X-Permitted-Cross-Domain-Policies

Header always set X-Permitted-Cross-Domain-Policies "none"

Header onsuccess unset X-Robots-Tag

Header always set X-Robots-Tag "none"

Header onsuccess unset X-XSS-Protection

Header always set X-XSS-Protection "1; mode=block"

SetEnv modHeadersAvailable true

</IfModule>

# Add cache control for static resources

<FilesMatch "\.(css|js|svg|gif|png|jpg|ico|wasm|tflite)$">

Header set Cache-Control "max-age=15778463"

</FilesMatch>

<FilesMatch "\.(css|js|svg|gif|png|jpg|ico|wasm|tflite)(\?v=.*)?$">

Header set Cache-Control "max-age=15778463, immutable"

</FilesMatch>

# Let browsers cache WOFF files for a week

<FilesMatch "\.woff2?$">

Header set Cache-Control "max-age=604800"

</FilesMatch>

</IfModule>

# PHP 7.x

<IfModule mod_php7.c>

php_value mbstring.func_overload 0

php_value default_charset 'UTF-8'

php_value output_buffering 0

<IfModule mod_env.c>

SetEnv htaccessWorking true

</IfModule>

</IfModule>

# PHP 8+

<IfModule mod_php.c>

php_value mbstring.func_overload 0

php_value default_charset 'UTF-8'

php_value output_buffering 0

<IfModule mod_env.c>

SetEnv htaccessWorking true

</IfModule>

</IfModule>

<IfModule mod_mime.c>

AddType image/svg+xml svg svgz

AddType application/wasm wasm

AddEncoding gzip svgz

</IfModule>

<IfModule mod_dir.c>

DirectoryIndex index.php index.html

</IfModule>

<IfModule pagespeed_module>

ModPagespeed Off

</IfModule>

<IfModule mod_rewrite.c>

RewriteEngine on

RewriteCond %{REQUEST_URI} !^/cloud/

RewriteRule ^(.*)$ /cloud/$1 [L,R=301]

RewriteCond %{HTTP_USER_AGENT} DavClnt

RewriteRule ^$ /cloud/remote.php/webdav/ [L,R=302]

RewriteRule .* - [env=HTTP_AUTHORIZATION:%{HTTP:Authorization}]

RewriteRule ^\.well-known/carddav /cloud/remote.php/dav/ [R=301,L]

RewriteRule ^\.well-known/caldav /cloud/remote.php/dav/ [R=301,L]

RewriteRule ^remote/(.*) /cloud/remote.php [QSA,L]

RewriteRule ^(?:build|tests|config|lib|3rdparty|templates)/.* - [R=404,L]

RewriteRule ^\.well-known/(?!acme-challenge|pki-validation) /cloud/index.php [QSA,L]

RewriteRule ^(?:\.(?!well-known)|autotest|occ|issue|indie|db_|console).* - [R=404,L]

</IfModule>

AddDefaultCharset utf-8

Options -Indexes

So yeah, any help on all the above would help. I think I had played around a tiny bit with the environment variable, OVERWRITEWEBHOSTROOT or something like that in previous attempts as well?

In regards to connecting with Mariadb, if I attempt to run any connection from the Nextcloud container to db, the connection always times-out. What’s up with that?

$ sudo docker exec -it nextcloud_app_1 /bin/bash

# curl -v telnet://db:3306 --connect-timeout 10

* Trying 172.28.0.2:3306...

* Connection timed out after 10001 milliseconds

* Closing connection 0

curl: (28) Connection timed out after 10001 milliseconds

Just in case, I did run sudo ufw allow 3306/tcp, but that does not appear to solve the issue.