Just tested with 25k files (toucan.jpg) and it worked! (throwing some errors in the middle…) The sync was slow at 20-30MBit/s bit the result was complete sync - using the logic you created works very goos as all folders as same size ![]()

Hi wwe, as said I have the following szenario:

an already, local synced folder with now 330 GB and almost 67.000 Files sorted by Year.

When switching to Virtual File System the sync will never finish, sop that after some day i abort.

Workaround: create empty homefolder, and upload the files in smaller barches. Then at anytime the sync is done. But when now connecting a 2snd computer to this home share, it will also never finish syncing even after some days.

So in my opinion the amount of files is too huge, and there must be made some code modifications to prevent users like me to use workarounds iof you really want to use Nextcloud similar to other hosting solutions like seadrive, Onedrive and much others.

The problem is also if you have finally synced your 100000 files to the server and have a second client who want to initially sync with the server. It will also abort - Then the amount of files are too much.

In my last test I created 45000 files (toucan.jpg) Nextcloud synced directory - and the client successfully uploaded all files to the server. The sync was pretty slow at 10-20MBit/s and maybe 10-20 files per second which is not even close to network and hardware limits but succeeded - this is the most important observation for me

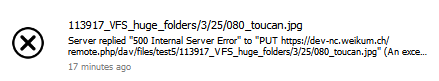

There was an error in the middle

but obviously it has no impact - I see all the files on the server…

My system NC 24.0.2 running as docker container on old desktop with i3 cpu, Windows Client 3.5.1.

I will do more tests with VFS and perform fresh sync server > client as well and keep you updated…

So i made another test where NC is running on a VM in the cloud. I created with your script ~ 100.000 files. The file stored in every folder is a png with 3kb file size. The Upload is starting, very slow, my upload line is 50 Mbit.

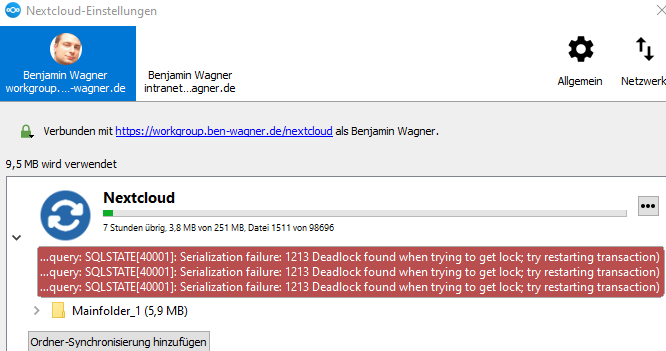

So my suggestion is: it is a combination of the NC Code, and of the mysql database which is not optimized for bulk loads. I would say it is not the size of data which is the problem, but the database actions. Would you agree?

I fully agree the issue must be related to database and operations (I’m not even sure if the error comes from the clients local sqlite DB or server backend)… But now it seems to work good enough - in my tests I saw this error messages as well but didn’t recognize any issues - file uploads completed successfully.

my conclusion is - there are issues but for majority of cases I would expect it to work. If it happen you need to upload huge data sets I recommend to add them in portions and wait until the client completes uploads before adding more files.

So I made now again some tests:

On one Client the switch from disabled VFS to enabled VFS took some hours, then it was completely synced. On an other local VM the same client aborts with “Server replied an error while reading directory xxx”. So it is better than one year ago, but not stable. I was also searching how to improve the local sqlite DB, but i think I should keep my fingers away from there ![]()

So, do you think there will be some improvements in the future?

No idea, if it is the same error that I was facing. My NC (from 24.x.x to 25.0.1) cancelled the sync because the server had an error with reading big folders, mostly smartphone pictures, 70 GB and 133GB each.

I set higher timeout values of Timeout 1200 in /etc/apache2/apache2.conf and also included a ProxyTimeout 1200. Additionally, in the file /etc/apache2/mods-enabled/fcgid.conf added these lines:

FcgidIdleTimeout 1200

FcgidProcessLifeTime 1200

FcgidConnectTimeout 1200

FcgidIOTimeout 1200

My system is a bit low performing, so I guessed it might be a timeout, where the Windows Desktop client runs into timeout problems. I also have a max_execution_time and max_input_time of 5600 in my php.ini.

After restarting php-fpm and apache2, the sync went through without any errors. Every new picture from the smartphone is on my Windows machine in time now. No errors in the client anymore. Maybe you give this a try?

Did not work. The Client is starting to Sync, there are two huge folders (Audio and Video), in bith folders the scan is in about 50% to 70%, then several clients abort. I tried it on two windows systems now, no chance to get the sync done.

We’re in a similar situation, huge file list and VFS never finishes initial sync, while it works fine with users with restricted file access.

We didn’t debug it deeply, but from the initial check we did, we have no performance issues on the server.

Did you file a bug for it? From what I read, no improvements or solutions so far. Thanlks

For me it seems that the problem is the initial file scan - it doesn´t matter if you use VFS or Traditional File system - if the amount of files is too big, it will crash.

I had the same issue without VFs when creating a test szenario with 500GB of files stored in ~250.000 files. When I upload the files, it will abort. So maybe the client has some settings not optimnized for bis file szenarios.

On Serverside I cannot see any impact.

Thanks for the update.

On our side, we didn’t have problems without VFS, the client managed to complete the sync process, maybe with some folders unchecked, but this shouldn’t affect scan.

I’m looking into issues but I’m unable to find something related.

I think you are right: We have a directory tree of approx 100.000 Files in 20.000 folders (250 GB in total) here. After enabling VFS (which includes processing the whole tree) things got really ugly for us: All locally added/modified files are stuck in a “sync pending” state. Before enabling VFS we used to sync only parts of the tree, and things went just fine. Also from my POV this seems to be a client related issue. As a consequence odd things like unresponsive Explorer-Windows and “stuck” Applications are happinging.

Quite a bunch of users seem affected by this issue -is it filed as a bug already?

Bug opened

Not from me. Who can open this bug?

In my case, we have already synchronized a group folder with 20000+ main folder, and about 220000 subfolders, 3m+ files, total about 5TB to the server.

When we connect some PC clients with virtual file enabled, it seems to sync forever. It shows between 20-30 days to synchronize, but a cursory look at different folders seems to have synchronized the folder structure already.

So I am not sure whether the virtual file system actually work or not, and same question also arises from the user.

Can anyone advise on what is actually happening OR is virtual file actually works with this amount of files?

Thank you

Jim

So what is beast way to continue here? My NC instance is growing and growing, and I need a ways to use VFS because I cannot download all files to clients.

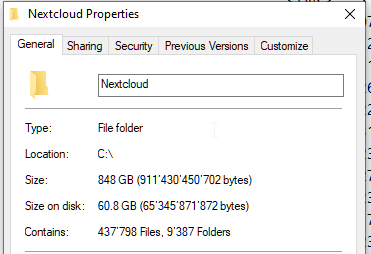

I can’t compare with your numbers but I can say my installation works fine with 437k files and 850GB total size

everybody can open a bug.

go on and contribute!

An update on the situation.

The client did work fine AFTER the initial sync. The sad fact is that initial sync takes more than 1 week.

This looks quite stupid for every new devices that connects.

I would say if virtual file is enabled, then it should just download the TOP level folders, and then the status should show ready, and then continue sync the next level folder in the background, and after second level is sync, continue onto the third, etc.

And if the user click on any of the folder, it will download the folder entry right away. That will give a better user experience than what it is now. With the current version of the client, it will just display an empty folder if the initial sync is still going on. That gives the impression that the client is not working.

Just my 2 cents.

Jim

I totally agree, we use OneDrive at work where we have the behaviour you describe. It would be nice if NC would also only sync when it is needed