My server error logfile shows:

[Mon Apr 19 07:19:21.377363 2021] [proxy_fcgi:error] [pid 12740:tid 140270704207616] (70007)The timeout specified has expired: [client 192.168.10.125:64823] AH01075: Error dispatching request to : (polling)

[Mon Apr 19 07:19:23.402128 2021] [proxy_fcgi:error] [pid 12740:tid 140270720993024] (70007)The timeout specified has expired: [client 192.168.10.125:64816] AH01075: Error dispatching request to : (polling)

[Mon Apr 19 07:19:24.374095 2021] [proxy_fcgi:error] [pid 12740:tid 140270695814912] (70007)The timeout specified has expired: [client 192.168.10.125:64825] AH01075: Error dispatching request to : (polling)

[Mon Apr 19 07:19:29.714338 2021] [proxy_fcgi:error] [pid 12740:tid 140270729385728] (70007)The timeout specified has expired: [client 192.168.10.125:64827] AH01075: Error dispatching request to : (polling)

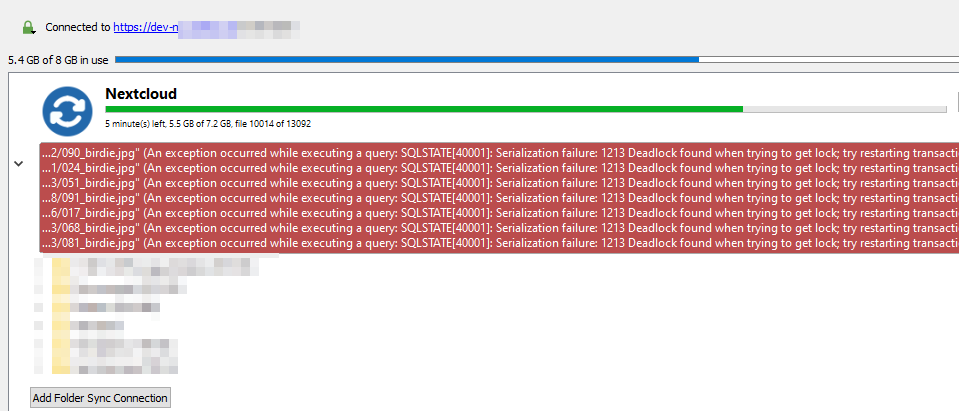

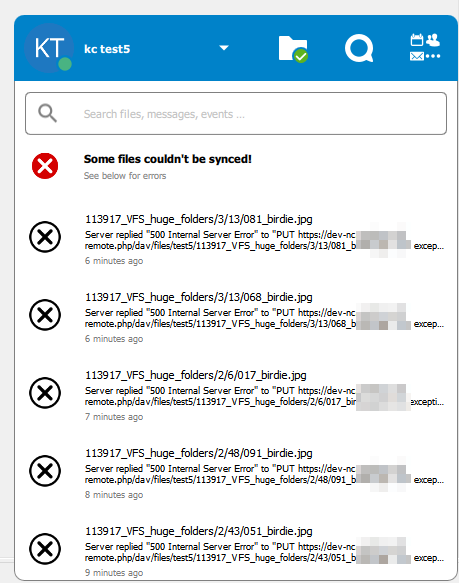

Is there any known bug that a folder with 60.000 files in several subfoldes will cause Nextcloud to abort the sync? It seems that the client is running into a timeout, because in the client logfile I can find:

2021-04-19 14:44:40:382 [ debug nextcloud.sync.database.sql ] [ OCC::SqlQuery::exec ]: SQL exec "SELECT lastTryEtag, lastTryModtime, retrycount, errorstring, lastTryTime, ignoreDuration, renameTarget, errorCategory, requestId FROM blacklist WHERE path=?1 COLLATE NOCASE"

2021-04-19 14:44:40:382 [ info sync.discovery ]: STARTING "FOTOS/2019/2019_08_09_Silas_Jonas" OCC::ProcessDirectoryJob::NormalQuery "FOTOS/2019/2019_08_09_Silas_Jonas" OCC::ProcessDirectoryJob::ParentDontExist

2021-04-19 14:44:40:382 [ info nextcloud.sync.accessmanager ]: 6 "PROPFIND" "https://servername/nextcloud/remote.php/dav/files/testuser/FOTOS/2019/2019_08_09_Silas_Jonas" has X-Request-ID "9a4f07cb-7f19-43df-9b09-784741fca4da"

2021-04-19 14:44:40:382 [ debug nextcloud.sync.cookiejar ] [ OCC::CookieJar::cookiesForUrl ]: QUrl("https://servername/nextcloud/remote.php/dav/files/testuser/FOTOS/2019/2019_08_09_Silas_Jonas") requests: (QNetworkCookie("nc_sameSiteCookielax=true; secure; HttpOnly; expires=Fri, 31-Dec-2100 23:59:59 GMT; domain=servername; path=/nextcloud"), QNetworkCookie("nc_sameSiteCookiestrict=true; secure; HttpOnly; expires=Fri, 31-Dec-2100 23:59:59 GMT; domain=servername; path=/nextcloud"), QNetworkCookie("oc_sessionPassphrase=K%2BWJoJAlcDrs1EUnolQCSTHYaSjx33OPBH9vC7YjFaGaVXSmSsq5gCnHbxdNQZYl9dCdjx5Qei6IVRM5i11ff7Okkb2QirI4TS1XXtjq%2Fz8QZeiF79fodEbMCyji9YHw; secure; HttpOnly; domain=servername; path=/nextcloud"), QNetworkCookie("ocut4lyy62j6=uu3tcdulfj1l82smb5k8qa0fgp; secure; HttpOnly; domain=servername; path=/nextcloud"))

2021-04-19 14:44:40:382 [ info nextcloud.sync.networkjob ]: OCC::LsColJob created for "https://servername/nextcloud" + "/FOTOS/2019/2019_08_09_Silas_Jonas" "OCC::DiscoverySingleDirectoryJob"

2021-04-19 14:44:40:616 [ warning nextcloud.sync.networkjob ]: Network job timeout QUrl("https://servername/nextcloud/remote.php/dav/files/testuser/FOTOS/100MEDIA")

2021-04-19 14:44:40:616 [ info nextcloud.sync.credentials.webflow ]: request finished

2021-04-19 14:44:40:616 [ warning nextcloud.sync.networkjob ]: QNetworkReply::OperationCanceledError "Connection timed out" QVariant(Invalid)

2021-04-19 14:44:40:616 [ warning nextcloud.sync.credentials.webflow ]: QNetworkReply::OperationCanceledError

2021-04-19 14:44:40:616 [ warning nextcloud.sync.credentials.webflow ]: "Operation canceled"

I changed in the following files the following parameters which sometimes seem to help a little bit, can maybe someone please test them, too?:

Add the following line into %appdata%\Nextcloud\nextcloud.cfg

chunkSize=268435456

timeout=600

On the Nextcloud Server modify the following parameters:

/etc/php/7.4/fpm/php.ini

post_max_size = 40M

upload_max_filesize = 40M

max_execution_time = 300

max_input_time = 600

/etc/php/7.4/apache2/php.ini

post_max_size = 40M

upload_max_filesize = 40M

max_execution_time = 300

max_input_time = 600

/etc/apache2/apache2.conf

Timeout 600

ProxyTimeout 600

Restart the Apache Webserver and the php service. Now restart the Nextcloud Desktop Client and see if the timeout is gone.

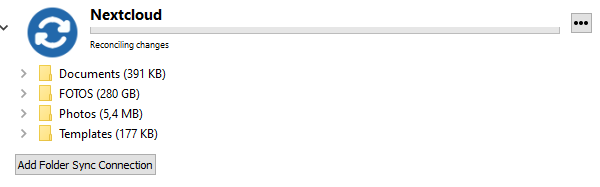

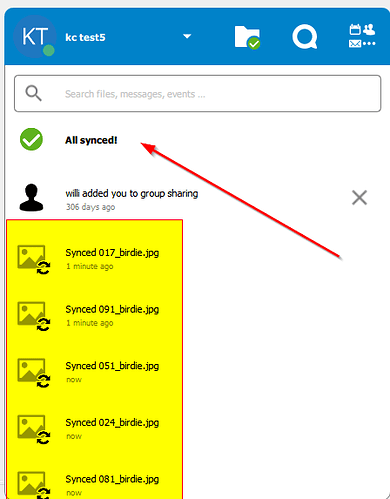

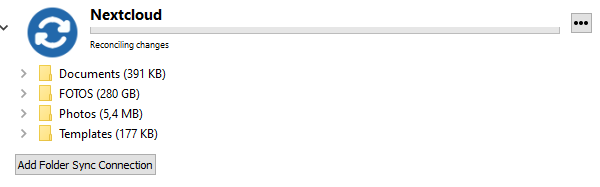

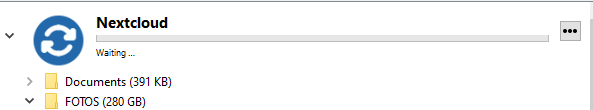

In my case the timeout is not there anymore, but the client now stucks at “Reconcilling changes” for hours:

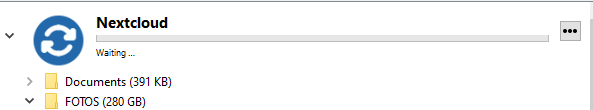

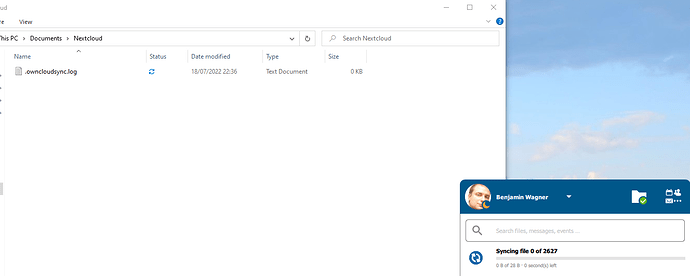

12 hours later it stucks at

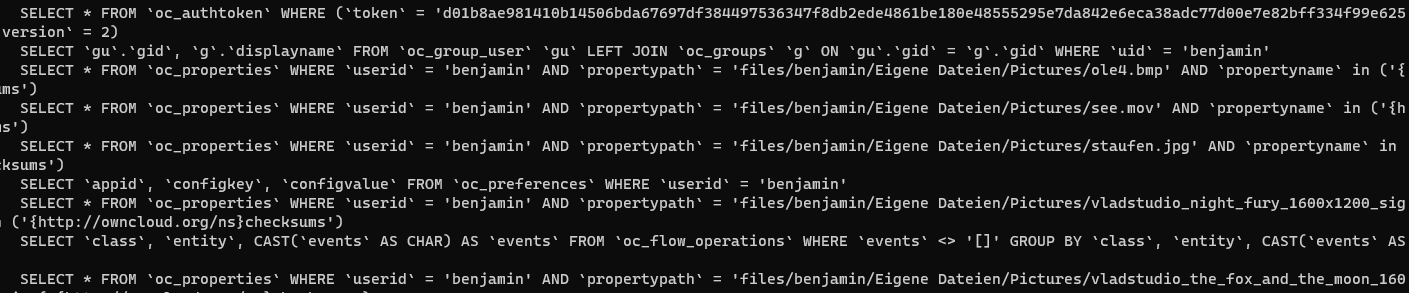

In the local logfile I did not get any error message.