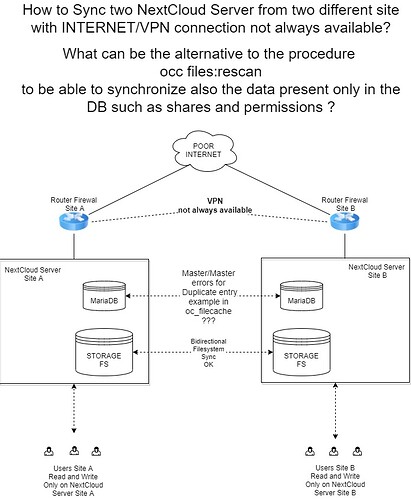

SCENARIO: two NextCloud servers in two different site connected in VPN through internet connection that is not always available, users read and write only on the server of their site.

What can be the alternative to the procedure occ files:rescan to be able to synchronize also the data present only in the DB such as shares and permissions ?

I searched in the forum and on the internet but there is talk of successful configurations only for clusters (MariaDB Galera and SyncThing) and load-balancer that require installation in the same datacenter, instead in my case the problem to be solved is that the two servers are not always connected for varius internet lines problems (upload congestion, breakdowns, line derating, etc.).

For the replication of the MariaDB database I followed this guide:

and until errors like:

Last_SQL_Errno: 1062

Last_SQL_Error: Could not execute Write_rows_v1 event on table mndrive.oc_filecache; Duplicate entry ‘1004’ for key ‘PRIMARY’, Error_code: 1062; handler error HA_ERR_FOUND_DUPP_KEY; the event’s master log mysqld-bin.000001, end_log_pos 261338

everything works perfectly… (pity it doesn’t last long

)

)

The real problem is to manage the synchronization of the two systems immediately after being offline with each other, since each server in its headquarters has continued to work regularly and therefore the two DBs have become misaligned.

Best regards

GMR