I have recently synced a folder with many files (11GB in ~300.000 files) to my server (18.0.1, running the official apache docker container behind a nginx reverse proxy) using one client (Version 2.6.3stable-Win64 (build 20200217) on Win10 1909).

I then have copied the files to another computer running the same version of the client and operating system.

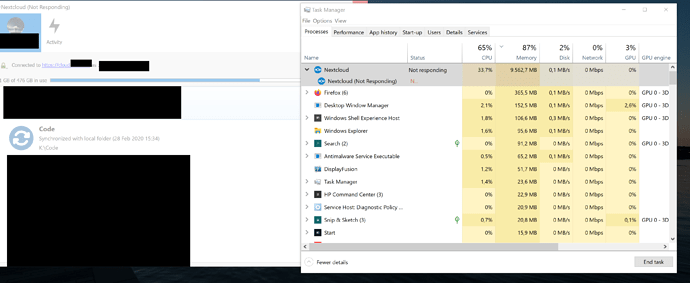

When I add the folder on this other computer, the following happens:

- Client iterates through all files, “Checking for changes in remote …”.

- Client displays “Synchronized with local folder”, but immediately becomes unresponsive (“Not Responding” in Windows).

- System memory consumption of the non-responsive client increases slowly from ~300MB in Step 1 to more than 10GB, until the client terminates (or gets terminated by Windows?)

Syncing repos with less files works perfectly with the same client and server.

I have tried downgrading to version 2.6.2 of the desktop client with the same result.

Unfortunately, none of the logs (Server, Client) seem to contain any error.

Is there any way to simply prevent the client from “discovering”/checking the folder?

Since the folder is a one-to-one copy from another computer that is synced with the server, I already know that all files should be in sync.

Can the internal database of the client somehow be copied from one computer to the other?